I.INTRODUCTION

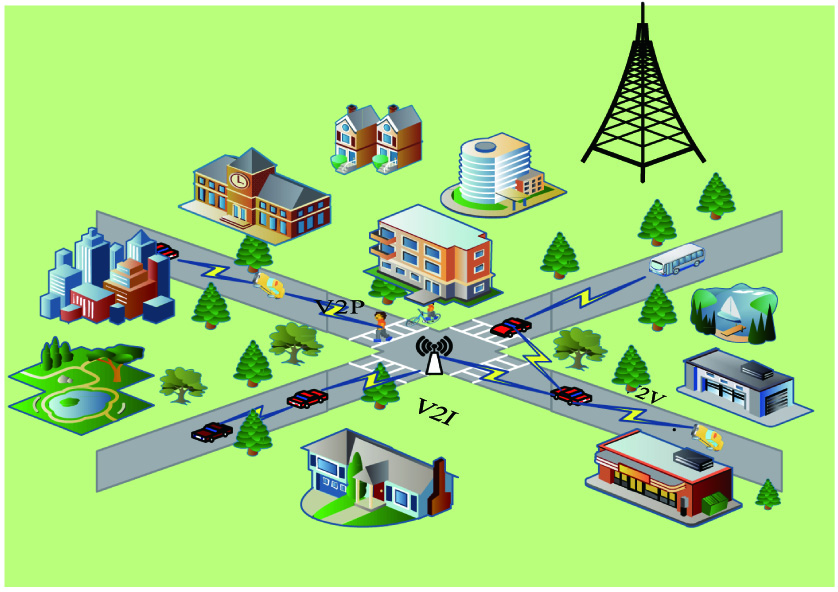

With the continuous development of information technologies such as science and technology, wireless network communication technology, and artificial intelligence (AI), vehicles have become more and more interconnected in the era of the interconnection of all things, while the applications for vehicles are also more intelligent and diversified at the same time. The rapid evolution of Internet of Vehicles (IoV) has led to the emergence of various delay-sensitive vehicle applications such as automatic driving systems and high-precision maps. Automotive applications usually need to handle large amounts of data. Thus, these applications have brought severe challenges to vehicles with limited computing resources [1]. To solve such problems, various computer load strategy and communication infrastructure have been proposed. They use the Intelligent Video Surveillance communication capabilities to transfer computing aggregation tasks to the cloud center of the computing applications. However, the long communication transmission distance between the vehicle and the cloud center leads to a long response time and unstable communication links [2]. The emergence of cloud computing technology satisfies the computing power requirements of resource-intensive applications to some certain extent and also brings the problem of propagation delay to applications. Mobile edge computing is a new network paradigm whose computing could be completed at the network edge of the terminals, which can effectively solve the delay problem of delay-sensitive applications [3]. Vehicle Edge Computing (VEC) is a mobile edge computing IoV application. It provides solutions to enhance computing and improve delay-sensitive onboard applications. As shown in Fig. 1, the vehicle improves transportation efficiency and reduces traffic accident rate and energy loss through information interaction with roadside units (RSUs) and cloud centers [4].

Computing offload can perform computing-intensive and delay-sensitive tasks performed in an edge server, and it significantly reduces task processing delay and energy consumption. At the same time, computational offloading can not only utilize less core network bandwidth to ensure data transmission rate but also alleviate network congestion, thereby reducing the risk of network congestion. Computing offloading is the key of VEC. However, vehicles in the IoV environment are with high mobility and dynamic network topology, so the computing offloading in VEC faces great challenges. In addition, each vehicular terminal in VEC, which is different with other common mobile terminals, could be a task vehicle for service request and a service vehicle for task execution at the same time. Therefore, computing offloading in VEC is an important research direction [5,6]. With extensive investigation and research on VEC, we analyze and give a summary for computing offloading on VEC.

The rest of the paper is organized as follows. Section II shows the outline of VEC. In Section III, application scenarios of VEC are introduced. In Section IV, an existing study on computational loading for VEC is summarized. In Section V, we discuss the challenges and future tasks of computing offloading. The full text is summarized in Section VI.

II.THE OVERVIEW OF VEC

In the VEC network, every vehicle itself has some capabilities to finish communication, computing, and resource storage, while the RSUs located near the vehicle act as an edge server for timely data collection, processing, and storage. Due to the limitation of own capacity, vehicles can offload computationally intensive and delay-sensitive tasks to edge servers, shortening response times and reducing the burden of backhaul networks.

A.THE ARCHITECTURE OF THE VEC NETWORK

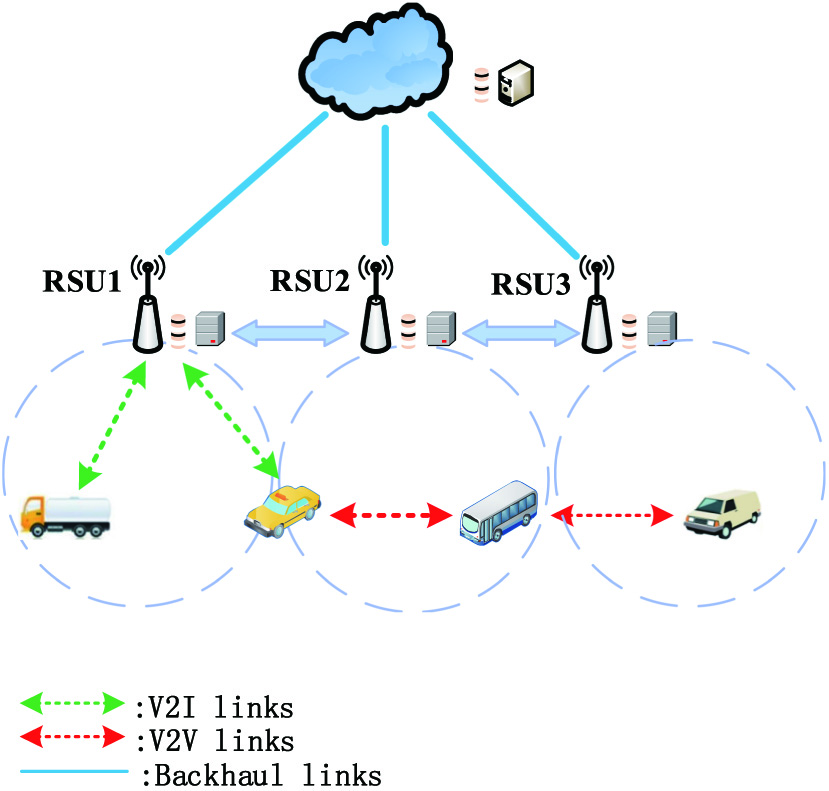

The network structure of VEC is shown in Fig. 2. Generally, the architecture includes three layers, that is, vehicle terminal as user layer, RSUs as multi-access edge computing (MEC) layer, and cloud server as cloud layer.

B.COMPUTING OFFLOADING

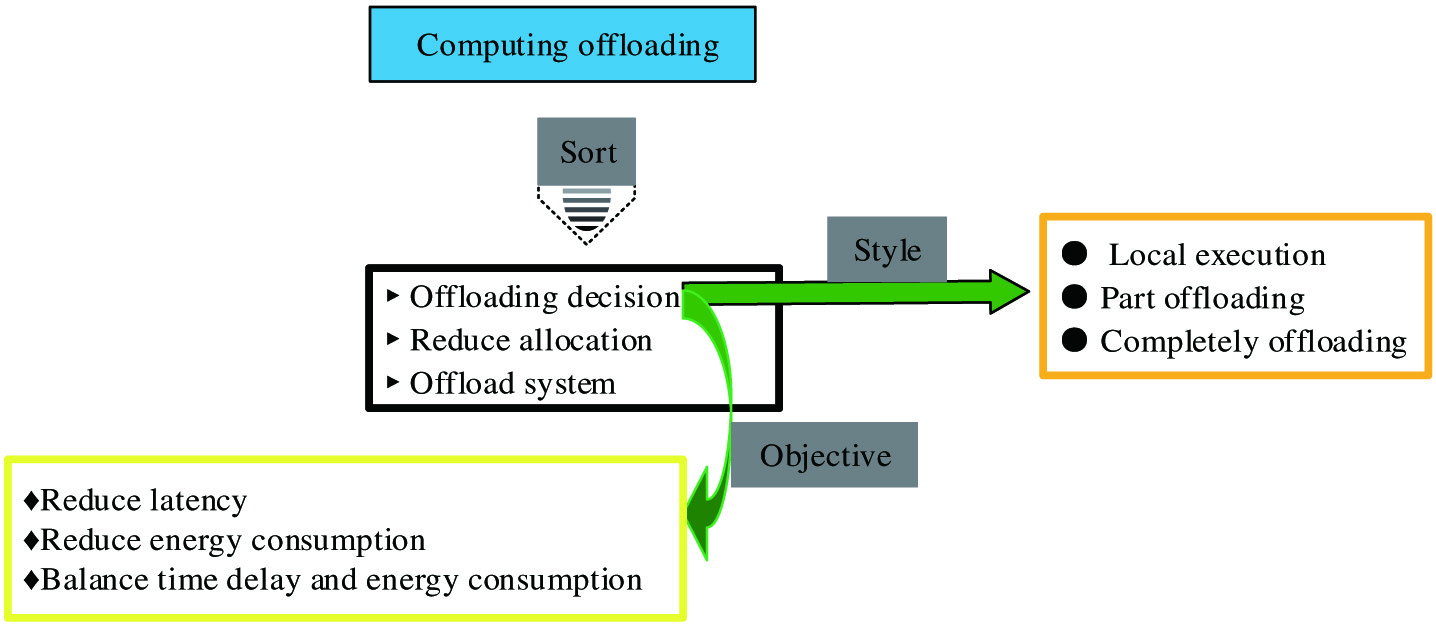

The computing offloading in VEC is that the vehicles upload the computing tasks to edge servers for processing to reduce the consumption of time and energy for vehicles. As shown in Fig. 3, computing offloading includes offloading policy, resource allocation, and offloading system implementation. The offloading process is as follows.

- (1)Request service. The task vehicle sends a service request to the infrastructure within its communication range (e. g. base station, RSU).

- (2)Upload tasks. The task vehicle sends the computing tasks to edge servers through V2X (Vehicle to Everything) communication.

- (3)Execute tasks. According to resource allocation policy, the edge server determines to offload tasks somewhere to finish computing tasks.

- (4)Return results. The edge server sends computing results back to the task vehicles.

Fig. 3. The computing offloading.

Fig. 3. The computing offloading.

The computing offloading could reduce network overloading and communication delay. Content requesters can obtain content directly from edge service nodes without accessing core networks, thus reducing end-to-end delay and improving network bandwidth utilization efficiency [7].

C.OFFLOADING POLICY

The offloading policy is to determine if the vehicle will offload and how much to offload. There are three types of results that are usually offloading policy VEC, local execution, complete offloading, and partial offloading policies. The results for the task vehicle are determined by the energy delay of the vehicle and the time delay of the computing task. The goal of offloading policy could be summarized as several aspects, reducing delay, reducing energy consumption, balancing delay, and energy consumption.

- (1)Local execution. The computing task is completed by the vehicle itself.

- (2)Complete offloading. The computing task is offloaded and processed by MEC.

- (3)Partial offloading. Part of the computing task is processed locally, and the others are offloaded to the MEC server for processing.

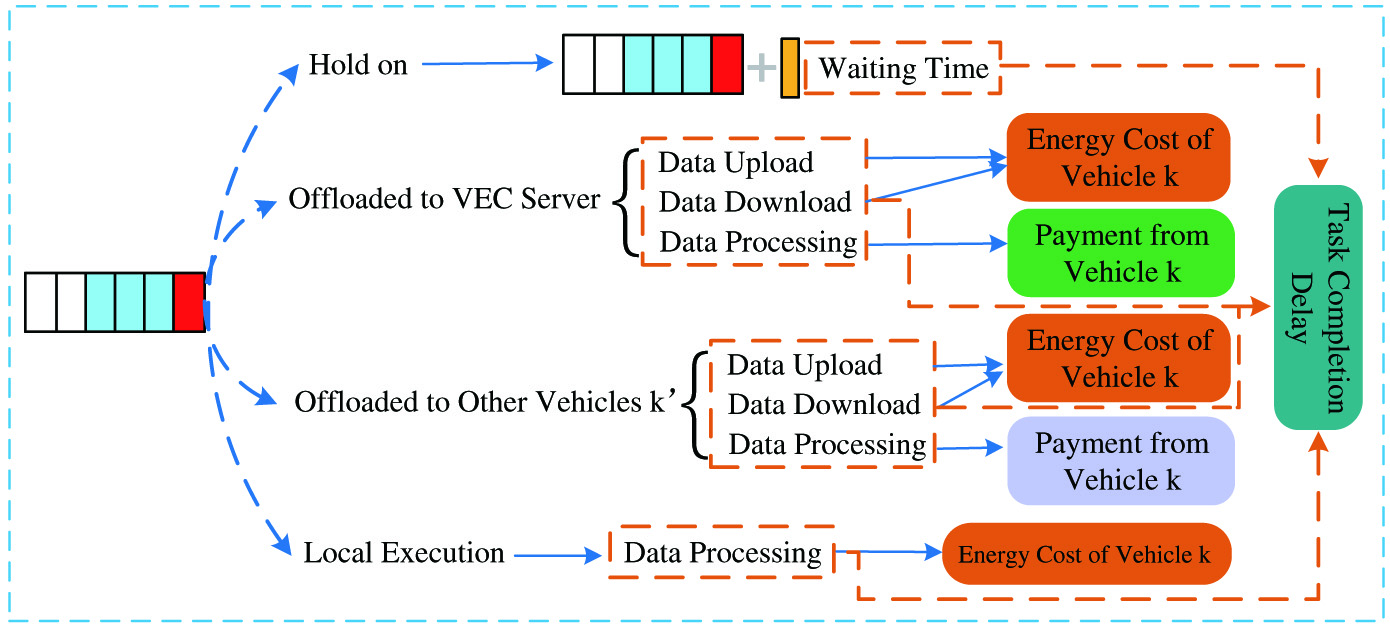

The delay, energy cost, and delivery of task vehicles in different offloading policies are listed, as shown in Fig. 4.

Fig. 4. Delay, energy cost, and delivery of different offloading policy.

Fig. 4. Delay, energy cost, and delivery of different offloading policy.

The offload policy needs to consider the factor of computing delay, as the delay could affect the quality of user experience. In addition, the problem of energy consumption needs to be considered. If the device’s energy consumption is too high, the battery of the mobile device terminals will run out.

D.OFFLOADING MODES

A vehicle in VEC could be a task vehicle, service vehicle, or relay node at one time. Therefore, the computing offloading modes of VEC are various. The main computing offloading modes are as follows.

Vehicle to infrastructure offloading (V2I). The onboard equipment communicates with the RSU to offload the computing task directly to the infrastructure.

Vehicle-to vehicle-offloading (V2V). Carry out the communication between vehicles through the onboard terminals and offload the task directly to the adjacent service vehicles through the remaining computing resource.

Vehicle to vehicle to infrastructure offload (V2V-I). Through the communication between multiple vehicles, the target vehicle offloads the computing offloading task to the infrastructure through other vehicles.

Vehicle to vehicle-to-vehicle offloading (V2V-V). Through the communication between multiple vehicles, the target vehicle offloads the computing offloading task to the service vehicle through other vehicles.

III.THE APPLICATION SCENARIOS OF VEC

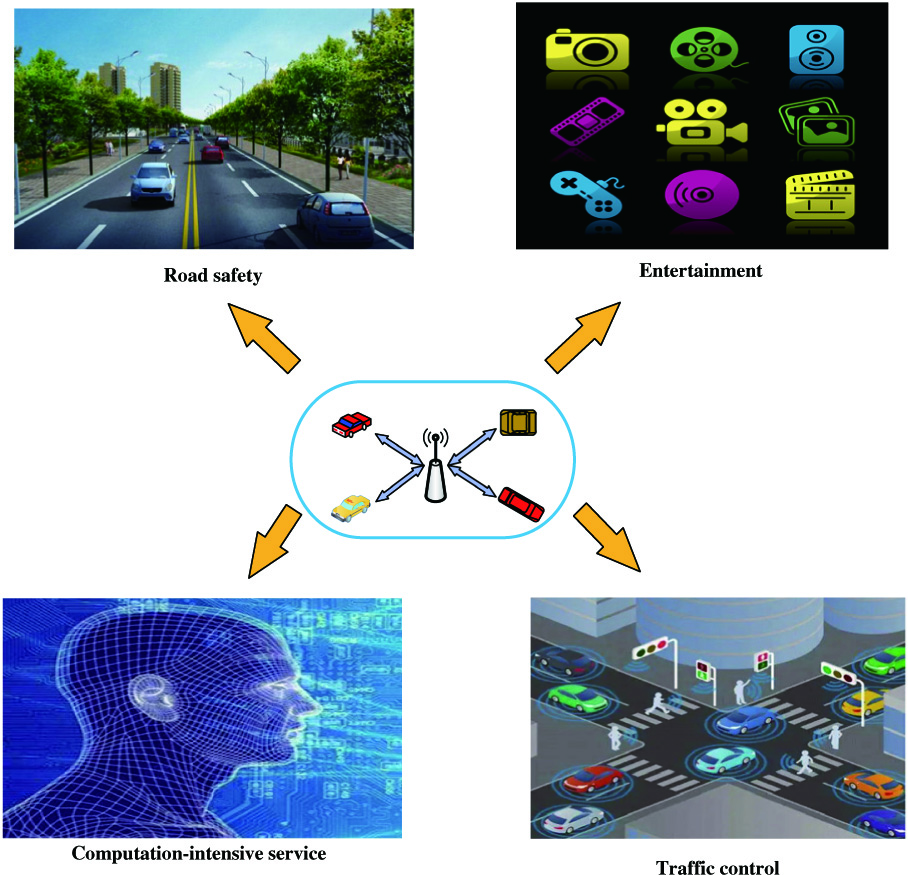

IoV is usually applied in security, efficiency, and entertainment. For limited computing and energy resources, mobile vehicles tend to offload the computing-intensive tasks to edge servers. Traditional methods still work well for entertainment applications that are insensitive to delay and reliability. For example, it could greatly improve computing power for vehicle entertainment applications by offending computing-centric tasks to remote cloud centers and returning results from cloud centers. When computing tasks are very sensitive to delay and reliability, such as emergency obstacle avoidance, path planning, road state recognition, vehicles are usually far away from the cloud center, thus resulting in high network delay between the core network and backbone network. The emergence of VEC enables various applications to be realized. Several typical VEC application scenarios are shown in Fig. 5.

Fig. 5. VEC application scenarios.

Fig. 5. VEC application scenarios.

A.ROAD SAFETY

VEC is applied to road safety (such as emergency obstacle avoidance, path planning, road state recognition) for edge servers. Its role is to perform real-time analysis and processing of data from vehicles and sensors deployed along the road, and the edge servers could give danger warnings when they find insecurity factors. Thus, the surrounding vehicles could take proper braking, lane changing, and turning to avoid possible hazards.

B.ENTERTAINMENT

The benefit of VEC’s flexible computing and storage resource is that intelligent vehicles make the driver’s driving operation much simpler. Drivers can be distracted attention from driving on entertainment activities like games, music, and videos [8]. Through the content cache by the cooperation between vehicles and edge servers, vehicles’ applications obtain the content resource without the help of a remote cloud, reducing the delay and improving the user experience.

C.TRAFFIC CONTROL

In VEC, the edge server covers a certain communication area. Each vehicle sends its current state (position speed) and information collected around the vehicle (weather conditions and road conditions) to the relevant server. By receiving information uploaded by vehicles from the VEC network, servers in VEC could get the local traffic conditions in time and then control the traffic flow to help avoid traffic congestion [9].

D.COMPUTING-INTENSIVE SERVICES

Computing offloading of VEC can be used to provide services for computing-intensive applications, such as augmented reality and facial recognition. Vehicles usually cannot meet computing requirements for their applications due to their limited resource. Therefore, they reduce latency and improve user experience by offloading computing tasks to edge servers.

IV.COMPUTING OFFLOADING IN VEC

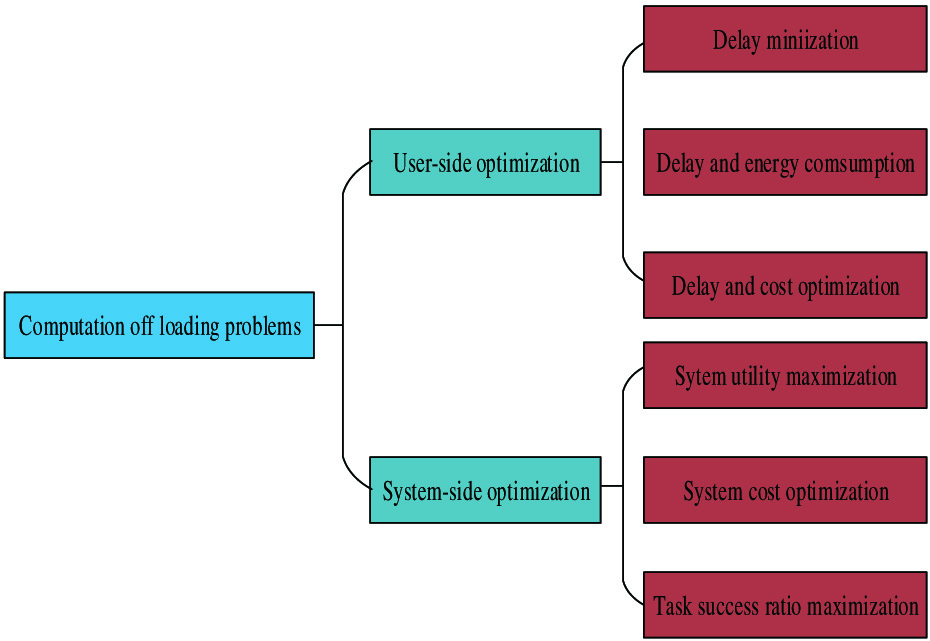

The existing computing offloading research usually focuses on optimizing delay and energy. The computing offloading in VEC puts various solutions and methods for different objectives. The computing offloading in VEC could be divided into two categories, as shown in Fig. 6.

Fig. 6. Categories of computing offloading in VEC.

Fig. 6. Categories of computing offloading in VEC.

A.USER-SIDE OPTIMIZATION

1)DELAY MINIMIZATION

The development of vehicle applications has improved the user experience a lot. However, these applications are usually real-time, complex, and intensive [10]. Taking too much time to process application tasks might reduce the effectiveness of data, thus even leading to traffic accidents. VEC delay includes transmission delay, computing delay, and communication delay. Computing delay has an offloading delay, queuing delay, and processing delay. Therefore, minimizing the latency of VEC applications is of great significance. Zhao et al. proposed an edge cache and computing management scheme to optimize service caching, request scheduling, and resource allocation strategies. The results show that this method achieves suboptimal delay performance [11]. Dai et al. proposed a hierarchical edge cloud computing architecture to minimize energy consumption and task execution delay of mobile terminals by utilizing computational offloading algorithms and resource allocation mechanisms [12].

2)DELAY AND ENERGY CONSUMPTION OPTIMIZATION

Computing-intensive applications with strict time constraints bring challenges to VEC, usually resulting in higher energy consumption. When the energy of the vehicle itself is low, it is difficult to meet the needs of onboard applications. Therefore, energy consumption needs to be considered with time constraints, which means that the VEC offloading needs to balance and minimize the delay and energy consumption to obtain larger channel gain and lower local calculation energy consumption. Sun et al. proposed a task offloading algorithm based on adaptive learning. The proposed algorithm understands the delay performance of adjacent vehicles in calculating offloading and minimizes the mean load delay and energy consumption for each task vehicle [13]. Zhan et al. studied the computing offloading scheduling problem in VEC scenarios, modeled it through a well-designed Markov decision process, and designed the latest near-end policy optimization algorithm based on DRL [14].

3)DELAY AND COST OPTIMIZATION

Another goal of VEC is to reduce offloading, which includes communication costs and computing costs. Taking cellular network communication as an example, vehicles may need to pay for transmitting and receiving data to a cooperative distributed computing architecture using vehicles as a computing resource for VEC is constructed. Qiao et al. proposed a cooperative task offloading mechanism and transmission mechanism to reduce the delay and energy consumption of the system [15]. Zhao et al. proposed a cooperative computing offloading and resource allocation optimization scheme and designed distributed computing offloading and resource allocation algorithm. This scheme maximizes system utility (task processing delay and computational resource cost) by optimizing offloading strategy and resource allocation. The offloading strategy is optimized by game theory [16]. Salman Raza et al. proposed a motion-aware partial task offloading algorithm to minimize the total offloading cost. This method considers the cost of required communication and computing resources, divides the task into three parts, and further determines the task allocation ratio of the three parts according to the available resources of vehicle. The simulation results show that this method could not only reduce communication cost of nearby vehicles but also relieve the burden on VEC servers, especially when deployed in dense urban environments [17].

B.SYSTEM SERVER OPTIMIZATION

1)SYSTEM UTILITY MAXIMIZATION

In the computing offloading for VEC, the system resource needs to be balanced. There are no overloaded devices and no idle devices, ideally. The purpose is to use the system utility of VEC more efficiently. According to specific scenarios, Liu et al. proposed an offloading strategy and resource allocation scheme for vehicle edge servers and fixed edge servers. Considering the randomness and uncertainty of vehicle communication, VEC network’s total utility maximization problem is reconstructed into a semi-Markov process, and a reinforcement learning method and DRL method based on Q-Learning are proposed to find the best strategy for computing offloading and resource allocation [18]. Dai et al. proposed an AI-enhanced VEC and cache scheme. The proposed architecture cooperates edge computing, intelligently caches resources, realizes cross-layer offloading, multi‐point caching, and delivery, and improves system utilities using DRL and DDPG algorithms [19].

2)SYSTEM COST MINIMIZATION

Tan et al. developed a joint optimal resource allocation framework for communication, caching, and computing in VEC networks. Aiming at the optimization problem of resource allocation, they propose a multi-time scale frame-based algorithm based on deep reinforcement learning with the aim of optimizing resource allocation with limited storage capacity, computational resources, and severe delay constraints of vehicles and RSUs [20]. Zhang et al. formulated a joint cache and computing allocation strategy for minimizing system caching. A mobile recognition active cache technology is proposed in which core ideas are to obtain and store video content in the cache of the base station, and a KNN algorithm is used to find the optimal joint view set to maximize the total reward and minimize the cost of the system [21].

3)MISSION SUCCESS RATE MAXIMIZATION

Qiao et al. focused on cooperative edge caching and auxiliary caching and proposed a cooperative edge caching scheme to optimize content placement and delivery. The joint optimization problem is a two-time scale Markov decision process [22]. Liang et al. employ model resource sharing and deep Q-network (DQN)-based methods as multi-agent reinforcement learning vehicles in network spectrum sharing. The V2V spectrum and power allocation scheme are designed to improve payload delivery reliability of the V2V link and realize periodic safety critical message sharing [23].

V.CHALLENGES FOR VEC

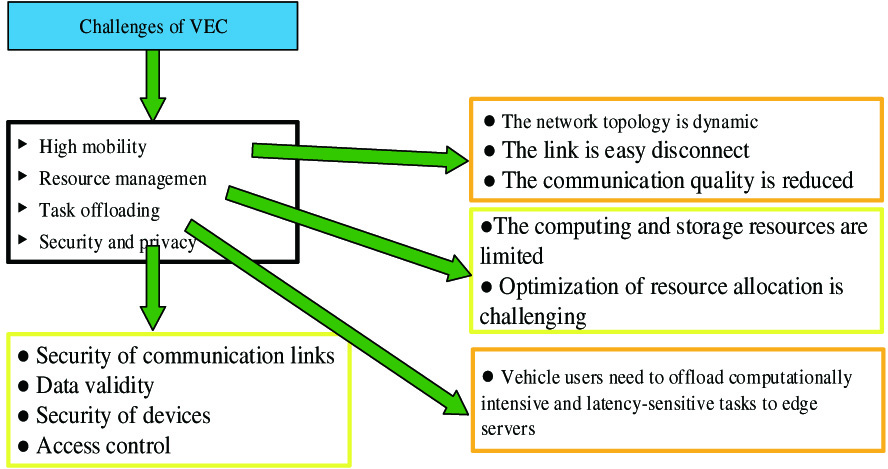

The environment of task offloading for VEC is dynamic and random. Its network topology, wireless channel state, and computing workload are changing rapidly. These uncertainties bring additional challenges to VEC. We list some main challenges and their relationships in VEC, as shown in Fig. 7.

A.HIGH MOBILITY

In the scene of IoV, the high mobility of vehicles and the frequent switching of information lead to the dynamic change of its network topology, and the communication link is easy to be disconnected, which affects the communication quality. Therefore, developing a mobile model is imperative, which is used to judge its mobility mode accurately according to a different environment in practical applications, perceive and learn the information of popular vehicles better, and improve communication reliability and resource utilization. Junior et al. constructed a mobile offloading system (MOSys) that supports seamless offloading operations when users move between wireless networks, which can effectively reduce offload response time. The mobility management application of MOSys has proved to have good energy efficiency [24]. Boukerche proposes a mobility-oriented prediction search protocol for the offloading scheme in VEC. The protocol uses position prediction to estimate the location of the user and achieves a more efficient search for delay and retrieval rate [25].

B.RESOURCE MANAGEMENT

The rapid channel change in high mobility vehicle environment makes it difficult to collect accurate instantaneous channel state information at base stations for centralized resource management. Edge servers have limited computing and storage resources, and this limitation brings challenges to resource allocation. The cognitive engine is introduced into the IoV to realize more intelligent vehicle deployment and resource allocation. The recognition engine is introduced to IoV to achieve more intelligent vehicle deployment and resource allocation. Cognitive engines could recognize the content requirements of users and match content providers and requesters to improve cache hit rates. The cognitive engine recommends RSU or vehicle-related content requirements to perceive content requirements based on machine learning or deep learning (DL) methods and provide content. This content acquisition method connects different vehicles on the one hand but brings the problem of unreliability on the other hand.

To solve the problem of resource management, Lin et al. found that social edge computing (vsec) can improve the quality of experience and service. To further enhance the performance of the vsec, network utilities are modeled and maximized by optimizing the management of available network resources [26]. Zhang et al. designed an effective wholesale scheme to maximize the total expected profit of mobile edge servers and proposed a fast convergence real-time repurchase scheme that minimizes the repurchasing cost considering the reserved computing resources [27]. Li et al. proposed an intelligent resource scheduling strategy to meet real-time requirements in smart manufacturing with edge computing support. The proposed strategy proved excellent real-time satisfaction and energy consumption performance of computational services in intelligent manufacturing by edge computing [28]. Wang et al. proposed a mechanism for configuring and automatically scaling edge node resources and developed an edge node resource management framework. The results verified the potential of this method in improving transmission efficiency and low delay [29]. Wang et al. proposed a task scheduling strategy suitable for an edge computing scenario. Load balancing of the network is measured according to the load distribution. By combining with the reinforcement learning method, the computational task continuously matches the appropriate edge server and satisfies the resource differentiation needs of the node task. The proposed strategy effectively reduces the load between the edge server and core traffic network and reduces the task processing delay [30]. You et al. proposed an energy-saving resource management strategy for an asynchronous mobile edge computing offloading system. An energy-saving offloading controller was designed for the asynchronous mobile edge computing offloading system, eliminating the overhead required for network synchronization and reducing the offloading and computing delay [31]. Peng et al. proposed a multi-agent deep deterministic policy gradient (MADDPG) . Resource allocation on MEC servers is formulated as a distributed optimization problem to maximize the number of offload tasks while satisfying the demand for heterogeneous quality of service. The MADDPG model is trained offline, while the car association and resource allocation decisions are made faster by online running stages. Simulation results show that the proposed resource management scheme based on MADDPG improves resource utilization and achieves a high degree of delay in heterogeneous service quality [32]. The summary of resource management based on MEC is shown in Appendix A.

C.TASKS OFFLOADING

Most research on task offloading in VEC networks rarely considered the load balance of computing resources on edge servers. To support centralized network management and various communication technologies of fiber-optic radio (FiWi) technology, Zhang et al. introduce FiWi to enhance VEC network. They proposed a load balancing task offloading scheme based on a software-defined network in FiWi-enhanced VEC networks to minimize the processing delay of vehicle computation tasks. Theoretical verification shows that the scheme reduces the processing delay by using the computing resources of the edge server [33]. Zhan et al. proposed a computing offloading game formula to solve the dynamic computing offloading decision-making problems among edge computing users in the dynamic environment [34]. Tang et al. propose a cost-effective offloading method with a deadline in the MEC environment to provide an efficient offloading service in the edge computing environment [35]. Zhang et al. proposed a technique based on meta-reinforcement learning to solve problems of computing offloading in MEC [36]. A summary of computing offloading based on MEC is shown in Appendix B.

Many researchers have carried out relevant research on task offloading. Li et al. proposed a heuristic algorithm with low complexity to obtain the offloading strategy and ensure load balance among multiple MECs simultaneously. Then, the Lagrange dual decomposition method was used to solve the computational resource allocation sub-problem [37]. Ding et al. proposed a cache-based NOMA downlink heterogeneous network architecture, formulated a messaging user coordination strategy, and allocated users’ power reasonably. The MEC system, which built a new offloading system model based on D2D communication, used utility function optimization and game theory to solve the computing diversion problem [38]. Ke et al. propose local computing offloading and resource allocation scheme for distributed reinforcement learning. The arrival rate and the signal-to-noise ratio of the workload are calculated according to the time-varying channel state. Distributed reinforcement learning can achieve optimal resource allocation and privacy protection without exploring and learning prior knowledge [39]. Ding et al. proposed a new MEC offloading system based on D2D communication offloading system model. The parameter is replaced with the function optimization using the utility function. Game theory was used to solve the problem of calculation conversion. This scheme has good convergence, improves data transmission rate, and reduces the energy consumption of the task [40]. Chen et al. propose a strategic computation load algorithm based on a DQN without understanding prior knowledge of network dynamics, and we study an optimal strategy. By the additive structure of the utility function, the Q-function decomposition technique is combined with the double DQN, and a new learning algorithm to solve the conversion of the random calculation is obtained. The Markov decision process was used to solve the problem of optimal computing diversion strategy, and the offloading decision was made according to tasks queue state, energy queue state, and channel quality between mobile users and base stations [41]. Chen et al. proposed a task layout algorithm to solve the problem of task placement and resource allocation. The proposed scheme improved the offloading efficiency, reduced the task duration, and reduced energy consumption [42]. Su et al. proposed the layered computing offloading strategy and developed the layered computing offloading framework in the network virtualization scenario by using the advantages of MEC. The first step was to propose an incentive mechanism, which was realized by considering individual rationality and incentive compatibility constraints. After obtaining the CPU contribution of edge computing nodes, the second step is to consider computing resource allocation between MEC and computing service subscribers. They have developed an iterative matching algorithm that can be used to model the problem with an external Bayesian matching algorithm and converge to always stable results. This strategy improved the computing power of nodes and allocated resource reasonably [43].

D.PRIVACY PROTECTION AND SECURITY

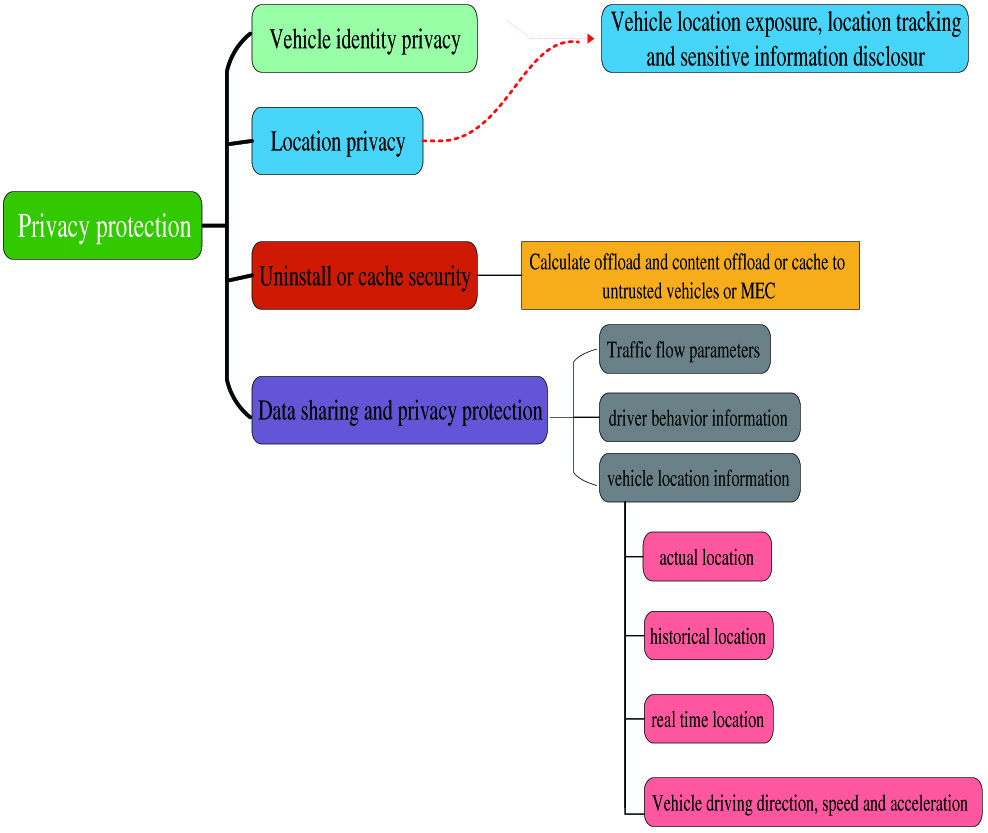

In VEC, the high mobility of vehicles makes it difficult to solve the reliability problem between nodes. How to ensure the high security of servers and solve the complex computing offloading problem in VEC is still an urgent problem to be studied. Due to the particularity of IoV service, vehicles need to broadcast their location information and exchange data with each other. Under this complex network condition, it is easier for hackers, malicious users, and malicious wireless nodes to mix in. Thus, the security and privacy of the edge network environment are greatly threatened [44]. The vehicle broadcast information is collected by the attacker, and it steals the privacy information by analyzing the collected data. Furthermore, attackers could even track the owner through the driving trajectory, which brings serious security problems. Privacy types could mainly classify the research work in this area. The classification of privacy protection is shown in Fig. 8.

Fig. 8. Types of privacy protection.

Fig. 8. Types of privacy protection.

Recently, some researchers have focused on integrating blockchain into IoV to provide the latter with the security and privacy required for operation. It is recommended that using blockchain-based access control disable the cloud illegally to improve the load loading safety of computing. Boukerche et al. proposed a reliable access control mechanism to effectively detect and prevent the illegal loading of devices. The purpose is to verify vehicle identity, offload tasks, and manage offload data to ensure system security and privacy [45]. Liu et al. proposed a group authentication scheme of vehicle blockchain authorization with decentralized identity based on secret sharing and dynamic agent mechanism, which realized the cooperative privacy protection of vehicles and reduced the communication overhead and computing cost [46]. Qian et al. propose a privacy-aware service placement method to solve the problem of privacy-aware service placement in edge cloud systems. The service allocation on the mobile edge cloud is modeled as a 0–1 problem. Next, we propose a hybrid service placement algorithm combining the concentrated greedy algorithm and the distributed coupling learning [47]. Shen et al. established a privacy game model to optimize the targets of defenders/attackers and solved the problems of mutual privacy protection and task allocation [48]. Ma et al. proposed a new method to protect edge computing systems from illegal access based on biometric features [49].

VI.OUTLOOK

With the rapid development of IoV, although many researchers have conducted in-depth research on the VEC network, many technical problems have been solved in calculating the VEC’s offloading. We list some research hotspots and trends in computing offloading in the future.

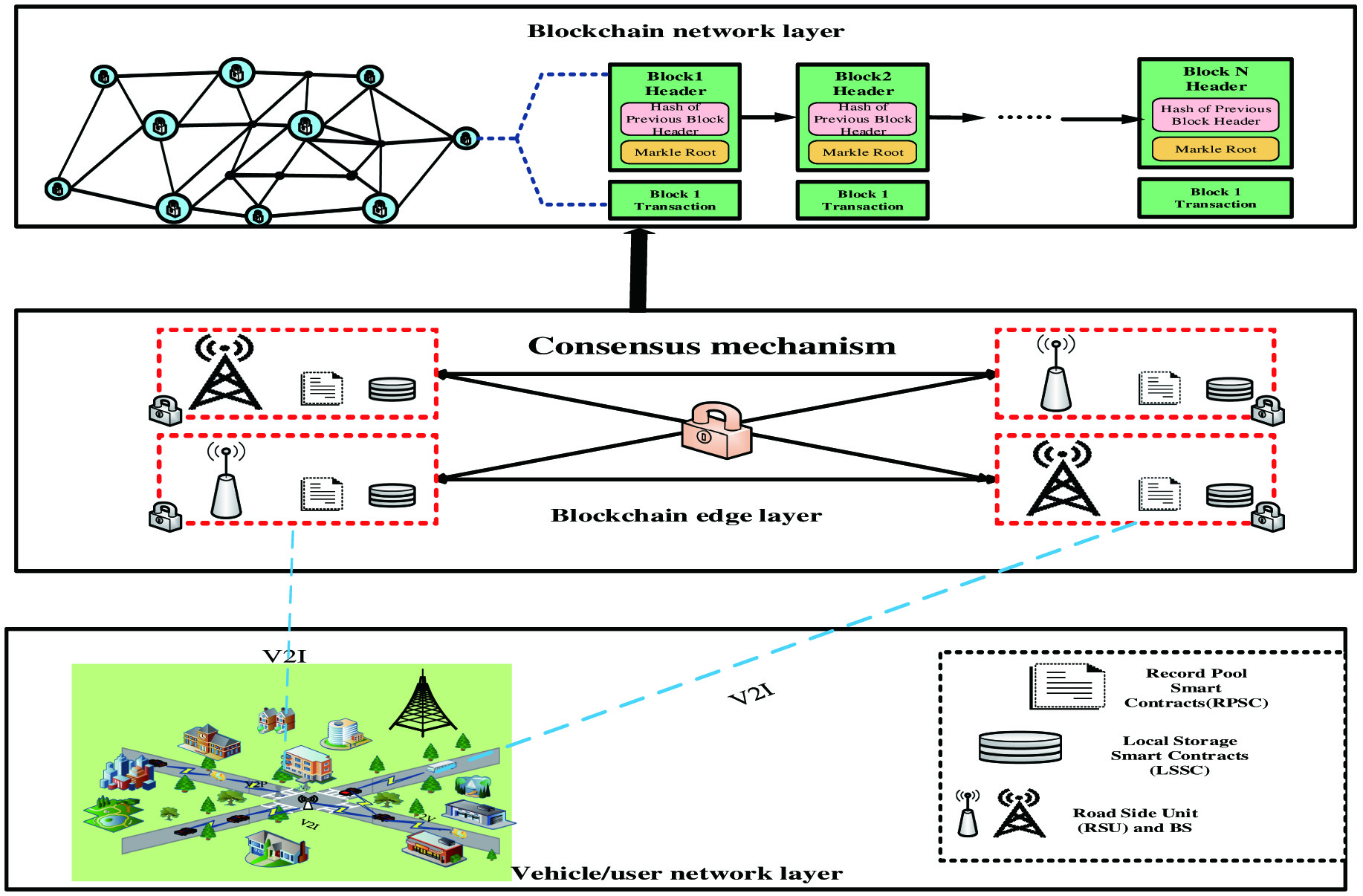

A.VEC BASED ON BLOCKCHAIN

Integrating blockchain and computing offloading of VEC could realize the data transmission and sharing mechanism. Blockchain can protect the cached content and data from malicious node attacks or denial of service attacks in the dynamic network environment under VEC. MEC provides the blockchain with computing resources and consensus intelligent algorithms to ensure efficient data processing and transmission by edge nodes. By adopting blockchain on IoV, security threats such as interruption, single node failure, and availability attacks could be solved. Blockchain builds a decentralized platform, establishes a trust mechanism, protects vehicle privacy, and reduces communication delay. Yang et al. use the integration of blockchain and edge computing and their distributed management and distribution services to meet future network and system safety, scalability, and performance requirements [50]. Ma et al. propose a blockchain framework for developing a unified scheme to realize reliable cooperation between edge servers in the MEC environment, secure trust among servers, and realize real-time cooperation and task sharing [51]. Liu et al. propose a blockchain model which could support cross-domain authentication. This model overcomes the shortcomings of identity-based encryption (IBE), which is not suitable for large-scale cross-domain architecture, allowing communication across different IBE and public key infrastructure domains while ensuring the security of resource or service sharing in a distributed multi-domain network environment. Taking advantage of the distributed and immutable characteristics of blockchain, this model is highly scalable but without changing the internal trust structure of each authentication domain [52]. Guo et al. proposed to design an optimized practical Byzantine fault-tolerant consensus algorithm on basis of building a blockchain network to shape an alliance blockchain that stores authentication data and logs to ensure trusted authentication and achieve the traceability of terminal activities [53]. Rehman et al. proposed reputation-aware fine-grained joint learning based on blockchain to ensure credible cooperative training in MEC systems [54]. Therefore, the data processing interaction between blockchain and computing offloading is the focus of VEC in the future.

The architecture of VECN based on blockchain is shown in Fig. 9. The architecture is divided into three planes, user plane, data plane, and storage plane. The storage plane is composed of block headers, and its main function is to package transaction records. The data plane is composed of RSUs deployed on both sides of the road and their affiliated MEC servers. The user plane refers to the vehicle users driving on the road.

B.VEC BASED ON EDGE INTELLIGENCE

Edge intelligence (EI) is AI in edge computing and developed by edge devices. EI refers to terminal intelligence that meets the essential needs of industry digitization from the perspective of agile connectivity, real-time business, data optimization, application intelligence, security, and privacy protection. Deng et al. divided EI into smart edge computing and AI for edge computing. The former is supported by AI technology, which provides a more optimized solution to the key problems in edge computing. The latter studied the whole process of AI model construction at the edge, model training, and reasoning, which provides a broader vision for this new interdisciplinary field [55]. Li et al. proposed a collaborative reasoning framework of DL models with device edge synergy. For low-delay EI, in the DNNS partition, it adaptively divides the DNN calculation between the mobile devices and edge servers based on the available bandwidth to make use of the processing capacity of edge servers and reduce the data transmission delay [56]. Salman Raza et al. proposed an edge computing framework based on Intel Up-Squared boards and Neural Compute Sticks that provides vision, voice, and real-time control services. Based on this framework, a guide dog robot prototype was proposed, which realizes the guide dog's obstacle avoidance, traffic sign recognition and following, and voice interaction with humans [57]. Zhou et al. proposed a method to realize intelligent collaborative edge computing in the Internet of Things to realize the complementary integration of AI and edge computing [58]. Xu et al. proposed a data-driven EI network anomaly detection method. Firstly, the main motivation of EI and its application in network anomaly detection were analyzed, and then, a network anomaly detection intelligent system based on edge computing was proposed. Finally, a data-driven network anomaly detection scheme was proposed. In this scheme, four stages were designed, which combined statistics and data-driven methods to train a learning model that can detect and identify network anomalies robustly [59]. Therefore, EI could reduce the delay and cost of data processing, store the original data in edge devices or terminal devices, protect private data, and promote the popularization and application of DL. Diversified services of DL extend the business value of edge computing. EI and in-depth learning will become an important direction for the future development of VEC.

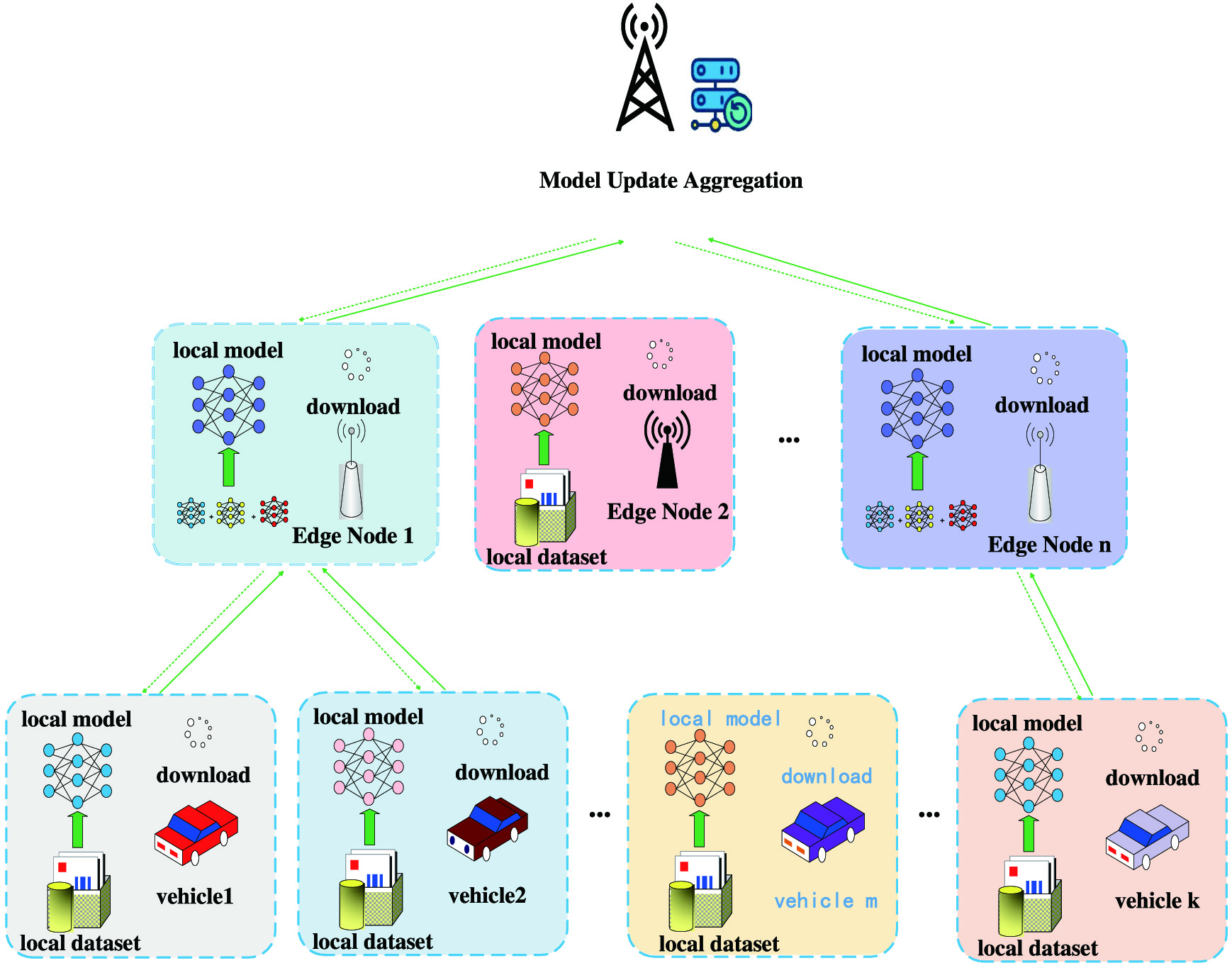

C.VEC BASED ON FEDERATED LEARNING

As a distributed machine learning architecture, federated learning aims to protect the data security and data privacy for terminal devices and realize energy-efficient machine learning among computing nodes. On the one hand, edge computing helps federated learning save network resources, reduce training time, and enhance data privacy protection. On the other hand, terminal devices and edge nodes are limited by their delivery resource communication and computing processing capabilities due to the characteristics of their software and hardware. Federated learning could provide some effective solutions for edge computing systems. As shown in Fig. 10, the computing task offloading for federated learning in the VEC network includes information on the tested vehicle, edge nodes processing, and model aggregation. The vehicle information is downloaded to the local database, and the local database and edge nodes deliver and update the local model to form a local model aggregation system. In this process, multiple edge servers interact with multiple heterogeneous edge nodes, and each node stores its own user data. Zhou et al. proposed an efficient asynchronous federated learning mechanism for edge network computing, which reduced data transmission delay [60]. Ye et al. proposed an edge computing method based on federated learning, which reduced the cost of terminals participating in federated learning while protecting user privacy [61]. Zhang et al. proposed a mobile edge computing federated learning framework that integrates model partitioning technology and differential privacy, providing strong privacy guarantees while reducing the heavy computational cost of DNN training on edge devices [62].

Fig. 10. Federated learning-oriented computing task offloading in VEC.

Fig. 10. Federated learning-oriented computing task offloading in VEC.

There are often security problems when combing VEC offloading tasks and terminals. Blockchain could enable edge computing with encryption, non-tampering, and distributed characteristics, laying the foundation for edge computing to solve security problems. Lu et al. designed an adaptive block checking authorization federation learning framework for robust EI, a new digital dual wireless network model to reduce unreliable long-range communication between users and base stations by incorporating digital twins into wireless networks. Based on this model, data analysis could be performed directly on the digital twins at the base station, thus enhancing the security and privacy of the digital twin data [63]. Chu proposes a scalable blockchain and task offloading technique based on neural networks for mobile edge computing scenarios to solve the problems of high computing cost and large data propagation delay caused by traditional blockchain data offloading [64]. Shahbazi proposed a blockchain, edge computing, and machine learning to develop and promote intelligent manufacturing systems to solve the blockchain data offload, the blockchain of large computation, and data transmission delays [65].

Based on blockchain-enabled federated learning, VEC offloading undertakes terminal computing tasks, significantly reducing data interaction delay and network transmission overhead. Therefore, VEC offloading data are likely to be processed on the edge side.

D.VEC BASED ON DL

Ubiquitous sensors and intelligent devices from factories and communities generate large amounts of data. Growing computing power drives computing tasks and services from the cloud to edge networks. Due to the efficiency and latency of the power grid, the current cloud computing service architecture has hindered the vision of providing AI “for any organization and anyone, anytime, anywhere.” It could be a possible solution that uses the resource of the edge network, which is close to the data source, to release DL services. As the representative technology of AI, DL can be integrated into the edge computing framework, constructed intelligent edges, and realized the dynamic and adaptive edge maintenance [66]. The integration of DL and computing offloading in VEC are mainly in the following aspects.

- (1)The application of DL on edge. Construct the technical framework of VEC based on DL to provide intelligent services.

- (2)DL reasoning in edges. Focus on DL’s actual deployment and reasoning in VEC to meet various needs, such as accuracy and delay.

- (3)The VEC offloading network architecture for DL. Focus on DL computing and how to adapt to the edge computing platform in terms of network architecture, hardware, and software.

- (4)DL training on edge sides. Use distributed edge devices to train edge intelligent models under the constraints of resources and privacy.

- (5)DL for optimizing edges. Maintain and manage aspects of the VEC offloading network with DL, such as edge cache, computing offloading, communication, network, and security protection.

Edge DL aims at seamless training between vehicles or edge nodes to solve the challenges of non-independent synchronization of training data, limited communication, unbalanced training contribution, privacy, and security in VEC. The integration of DL and VEC could be one of the development directions of DL training on edge sides in the future.

VII.CONCLUSIONS

Offloading computing-intensive and delay-sensitive tasks to edge servers is of great significance to vehicle terminals with increasing requirements for computing and storage resources, which is an important driving force for VEC development. In this paper, we conduct extensive research and literature analysis on the existing work of VEC and introduce VEC in detail, such as architecture, application scenarios, computing offloading, and recent studies. Then, we further expound the challenges of VEC and analyze research direction in future and development trends. In summary, the research on VEC is still in its infancy, and there are still many problems to be solved. We hope that the investigation from our work could provide a useful reference for further research on the computing offloading of VEC.