I.INTRODUCTION

Bird eye chili is a widely used and flavorful ingredient in various cuisines around the globe. Plucking and sorting these tiny chili peppers can be challenging and time-consuming, often requiring a lot of manual effort. Recent advancements in computer vision (CV) technology have enabled automating this process using You Only Look Once (YOLO) algorithms. With the help of this technology, it is possible to pluck and sort bird eye chili more efficiently and accurately, saving valuable time and labor costs for farmers and producers. This paper will discuss how YOLO and advances in CV are causing a seismic shift in the market for bird eye chili.

Capsicum annuum, more often known as bird eye chili, goes by numerous names; kanthari mulaku is one of the more common ones. In South Asian cuisine and traditional plant medicine, it is used as a seasoning and appetite stimulant. It helps with weight loss, clears the airways, stimulates digestion, and lowers blood pressure and cholesterol levels, among many other medicinal benefits [1]. The risk of heart failure, artery blockage, and other cardiovascular disorders is reduced with a faster metabolism and lower cholesterol level [2]. Particularly potent in killing cancer cells without harming healthy ones is the bird eye chili. The strong vitamin B2 concentration of bird eye stew makes it beneficial for eyes. These characteristics elevate bird eye chili above other common green chilies, making it the preferred kind. Picking, arranging, and categorizing bird eye chilies have become more valuable due to the global premium placed on high-quality products [3]. A unified aesthetic increases the likelihood that consumers will purchase a product. It takes a lot of time and effort to manually choose and arrange chili peppers. One method involves using knowledgeable examiners to choose and choose chilies, although this strategy is prone to prejudice. Bird eye chilies are in high demand on the global market because of their medicinal properties.

One kind of automation in agriculture is smart farming, which uses several automated devices to increase productivity and quality while reducing the amount of human interaction required [4]. Due to their small size, bird eye chilies might be a real pain to harvest mechanically. The grading and sorting of chili peppers, both red and green bird eye, are still done today, but it is now a completely manual operation based on visual examination. Factors such as visual impairments, fatigued witnesses, and varying opinions on quality reduce the method’s credibility [5]. There could be a drop in quality if too much time is spent sorting through the chilies. Before the chili can be properly arranged, this must be foreseen by utilizing a procedure and tools for early decision-makers. Alternative technology like image analysis can be used to judge characteristics such as stature, color, material, physical strength, odor, and touch. Mechanization, fast filtering, and decreased human errors are the most often cited advantages of image processing in the workplace and other settings. The proposed sorting technique uses CV and is expected to offer a long-term and efficient solution for classifying red chili.

In conclusion, this chapter has provided valuable insights into the critical roles and current research trends of CV in agricultural fields. After the introduction, it emphasizes the research’s importance and presents a clear outline of the structure of the paper and the topics that will be addressed in each section. The second chapter comprehensively analyzes the research topic, including the research questions and objectives. The third chapter provides a detailed explanation of the research methodology, as well as the significance of the research. The second last chapter includes information on model development, robotic arm design, and experimental results. The last chapter of the research report is a conclusion that summarizes the significant findings from the investigation.

II.RELATED WORK

Classifiers such as the support vector machine (SVM) and the k-nearest neighbor (k-NN) are just two examples of how machine learning is used outside of agricultural tracking to tackle categorization challenges in processing images and object identification [6]. k-NN and the SVM are just two examples of how machine learning is applied outside of agricultural tracking. In addition, machine learning models have shown remarkable effectiveness in several settings when it comes to accurately classifying objects, which is a testament to their versatility. Even more so, they hold great analytical potential for the agricultural industry. In today’s industries, where arranging items can be laborious and time-consuming, using automated technology to boost productivity and cut down on labor expenses is an urgent need. In Abu Salman Shaikat et al.’s [7] study, one of the topics discussed was the automatic categorization of objects based on color and height. CV sorts things according to their hue, while the Haar cascade approach sorts things according to height. To do this, a CV system is employed with an artificial limb with six degrees of freedom (DoF).

Using the open-source HI-ROS framework, Mattia Guidolin [8] suggested a real-time human motion recognition method based on the camera acquisition of 3D pictures. Chanchal Gupta et al. [9] published new research investigating potential phenotyping and plant growth monitoring technology. In this instance, a real-time image processing technique calculates the height and width of the jalapeño plants in the garden. Open CV modules and the PyCharm Editor are evaluated using the RMSE. Md. Abdullah et al. [10] proposed a system that automatically categories objects based on their color, shape, and size data. In this experiment, a PixyCMU video sensor is used to determine the colors of various objects. The size and shape of an item are set through the outline method. An autonomous arm powered by a stepper motor and other essential components has developed to streamline the pick-and-place sorting procedure. Automatic item classification based on color and form is shown in Lennon Fernandes et al. [11] using a robotic arm. Outlines are determined using the modified border fill approach, and shapes inside those borders are identified using the Douglas–Peucker algorithm.

Learning that relies on a framework Hasmath channel state information (CSI), a metric for Wi-Fi sensors, was introduced by Farhana Thariq Ahmed [12] to improve identification. Juan Daniel et al. [13] developed a technique using convolutional neural network (CNN) designs to enhance the efficacy of quality assurance inspections, particularly for spotting bruising. The system’s near-infrared and color CMOS sensors use different types of light. Human error while physically sorting tomatoes by color led to the development of a computerized tomato sorting system. The proposed method uses two TCS3200 RGB color monitors to check whether a tomato is ripe for human consumption. Image processing features like color, shape, and history of gradient may be used to classify produce [14]. Using a CNN, Arnab Rakshit [15] suggested a modified approach that aids the robotic arm in identifying the item using an RGB-D camera, picking the objects, and placing them in the right spot automatically, saving both time and effort. Hiram Ponce [16] developed a similar method of detecting low nutrients in tomato crop by analyzing images of leaf. By his novel CNN-based classifier, CNN+AHN incorporated a set of convolutional layers as feature extraction part, thereby increasing the efficiency from normal CNN methods.

Iman Iraei [17] proposed a novel method to identify the blur elements in a blurred image. For blur length analysis, frequency domain and wavelet-based factor are used, which is used for feature extraction and to identify the range of blur in an image. Detection of images with accuracy is needed for any automated system. Yingming Wang [18], in his study, aimed at maintaining the accuracy of high-resolution aerial images that detect the objects with the help of rotated bounding boxes that help in the process of training and provide better accuracy and detection output.

Since farming accounts for the primary source of income in India, improvements in agricultural technology are particularly pressing there. This improves farm productivity while reducing expenses [19]. Meer Hannan Dairath [20] developed an alternate approach of picking and grading fruits using CV technology where fruits are detected by color scheme conversion, masking of normal skin, masking of defects, and morphological dilation operations. Anand Kumar Pothula [21], in his case study, aims to evaluate the singulating and rotation performance of the sorting system for automatic inspection and grading of apples in terms of conveying speed, fruit size, and the percentage of apples exposure area for imaging. Dataset creation is essential for specific CNN frameworks like YOLO. Yogesh Suryawanshi [22] developed a dataset on four vegetables based on unripe, ripe, old, dried, and damaged. This dataset was developed by capturing real-time images of the vegetables with various possibilities. Md Hafizur Rahman [23] did a similar method for dataset creation and designed a system, which can automatically identify the position of a facemask that can give an alert to the individual before entering a crowded area. This model is trained in the MobileNet model by capturing various real-time images of an individual wearing a facemask.

To overcome sorting algorithm errors, we developed and deployed a YOLO V5 framework on Raspberry pi 4B computer to organize bird eye chilies in real-time. The developed CV-assisted robotic system uses an autonomous tool to inspect and grade chilies as they move along a moving line for differences in hue.

III.METHODOLOGY AND DESIGN

Bird eye chilies have recently been the subject of research in CV and machine learning because of their vast popularity and unique features. The YOLO V5 object recognition model’s study of size, shape, and color has been used to classify bird eye chilies. The YOLO paradigm is a state-of-the-art approach to object recognition widely used in academia and industry [24]. Unlike previous object identification algorithms, YOLO was designed for speed and accuracy, making it ideal for real-time applications like autonomous vehicles and surveillance systems [25]. Bird eye chilies may be counted by using CV and machine learning to identify and count individual peppers. This paper explores the possibility of counting bird eye chilies with the help of the YOLO object identification algorithm.

To begin counting bird eye chilies, the YOLO object identification system requires a set of labeled images. To achieve this, we capture photos of bird eye chilies at different stages of growth and annotate them with boundary frames that identify the chilies in the image. We use this information to teach the YOLO algorithm to recognize bird eye chilies in photographs. A labeled picture collection is required for YOLO to be helpful for bird eye view categorization. The ideal set would have several distinct varieties of chilies, each accurately labeled to indicate its size, color, and shape. Commentary may be made using either human labeling or the currently available annotation systems that can automatically detect and label objects in photos [26]. Once a labeled training sample is available, a deep learning system like TensorFlow may be used to teach the YOLO model. A deep neural network is given the data and trained to recognize the features useful for classifying chilies. This process is continued until a desired level of accuracy is achieved, both on the training data and the confirmation data. The trained algorithm may then be used for the task of classifying new images of chilies. The YOLO model takes an image as input and produces a set of bounding boxes representing the positions of objects in the image as output. The YOLO model can recognize bird eye chilies even when there are other objects in the image’s background. This tool is useful for farmers who wish to keep a check on their chilies plants and predict how much they will yield. Growers may save time and effort using the YOLO method instead of labor-intensive hand counting while harvesting bird eye chilies.

A.ARCHITECTURE OF THE PROPOSED SYSTEM

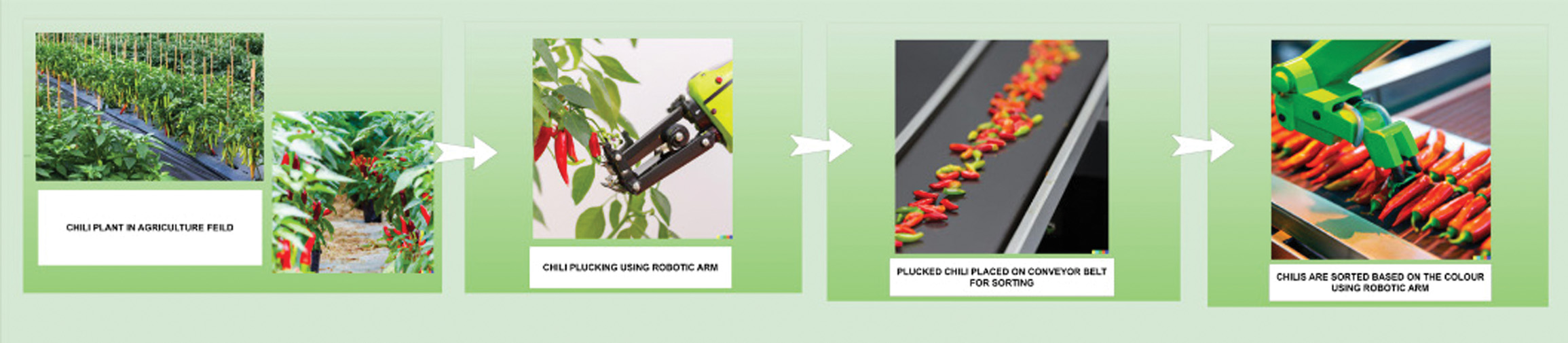

Figure 1 provides a visual representation of the suggested process. The YOLO-powered design of the system allows it to recognize colors and distinguish between green and red chilies using CV technology [27]. The gadget utilizes a motorized tool and a mechanical actuator controlled by a Raspberry pi 4B to do categorization. We propose a self-sufficient agricultural instrument for color-sorting chilies by machine [28]. The suggested system employs a YOLO architecture for fast computer-vision-based red and green bird eye detection. The Raspberry pi 4B, one of a family of small single-board CPUs, is often used for regulating the position of an autonomous tool. It is powered by a Broadcom BCM 2711 quad core Cortex-A72 64-bit SoC running at 1.5 GHz. The algorithm uses a reference set of five images to pinpoint the exact location of each chili in the real-time images.

Fig. 1. A system of classification and sorting of bird eye chili, powered by YOLO and aided by computer vision, has been devised. This wondrous contraption doth employs the Raspberry pi 4B computer to great effect.

Fig. 1. A system of classification and sorting of bird eye chili, powered by YOLO and aided by computer vision, has been devised. This wondrous contraption doth employs the Raspberry pi 4B computer to great effect.

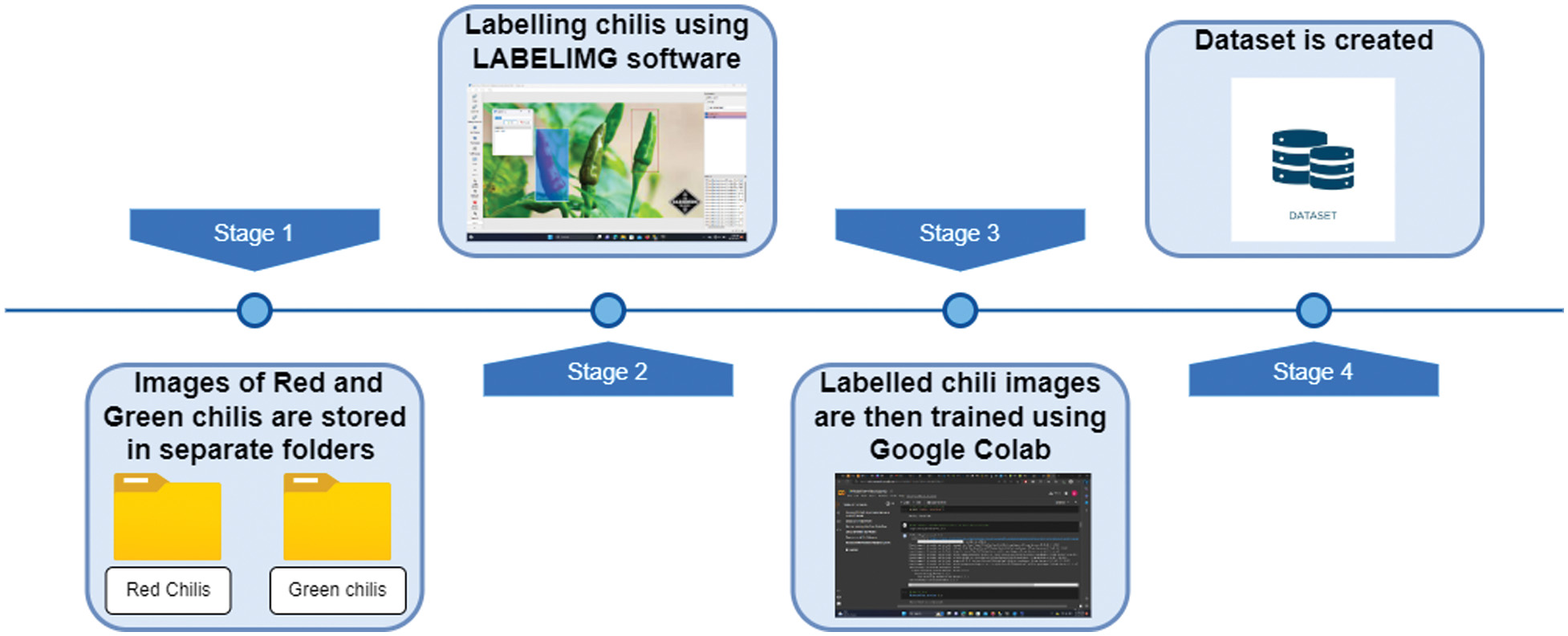

The labeling and training processes needed to produce datasets are shown in Fig. 2. To train a classification model, we need to collect many labeled images of chili peppers and assign meaningful labels by hand. Each image is converted into a text file with the corresponding category indicated by a red or green chili. Then, these records are taught using the Google Colab platform. A weight_chili. weights file is generated when training is complete.

Fig. 2. The logic behind the generation and labeling of red and green chili datasets.

Fig. 2. The logic behind the generation and labeling of red and green chili datasets.

Seven hundred and thirty-nine images of red and green chilies were utilized to create this database for the algorithm. We used the labeling application to rename each file and give it a new category label. Labeling the photographs will automatically create both the image file and the Word document, which can then be saved in the designated location. The Word file and its associated images are saved to a repository. Images are trained using the Google Colab graphical processing unit (GPU) platform after the compressed file is uploaded to a Google Drive subfolder. GPU processing can be enabled by training these photos and importing the necessary tools into Google Colab. The captured picture is utilized as a benchmark against which the learnt dataset may be evaluated.

In conclusion, the YOLO item identification technique can be used to categorize bird eye chilies based on their size, color, and general appearance. Using annotated datasets and deep learning frameworks, the YOLO model might be a helpful tool for tracking food quality and determining how long delicate goods can be stored. It has many potential uses, including in agriculture (particularly vegetable cultivation), processing, pest detection [29], and preserving food [30]

IV.EXPERIMENTAL SETUP

The experimental setup will consist of the following components:

- •Conveyor Belt: The chilies will be transported by conveyor belt to the inspection and sorting machinery. For reliable identification and sorting, the belt will be built to keep the chilies moving at a constant velocity.

- •CSI Camera: To get clear shots of the chili, a high-resolution camera will be mounted above the conveyor belt.

- •Processing System: A Raspberry Pi 4B computer with the latest version of YOLO (V5) installed will serve as the processing system. The system will receive the collected photos of chilies and analyze them for detection and categorization.

- •Chili Classification Model: Images of various bird chilies will be used to train a categorization model. The chilies will be detected and categorized in real-time using YOLO V5.

A camera on a tripod is set up above the sorting apparatus. The shaking causes a loss of precision. Red and green chilies are separated using a camera and a Raspberry pi. The Raspberry pi is the system’s central processing unit and data storage device, with its 4GB SD RAM it helps in smoother multitasking and better performance in resource-intensive applications. CSI cameras monitor chili on the conveyor belt for any indications of manipulation. The Raspberry pi 4B acts as the brains of the operation, delivering signals to the sorting motor that sends the red chilies to the left, the green chilies to the right, and the defective chilies and other commodities in a straight line. We may use the same camera to count both the red and green chilies. The quality of the chilies is evaluated in real-time by comparing photos to a database. The quality of chili can be judged based on its size, shape, color, and deformations. Real-time images are classified as red or green based on historical images. Bounding boxes are used to infer the positions of the peppers. The sums of red and green chilies were determined by counting how many fell into their respective border boxes.

A CV camera examines the chili patterns on the moving conveyor belt. A robotic manipulator is now sorting this batch of chilies by color. After a quality assessment of the detection is finished, the previously trained dataset can tell the difference between red and green chilies. The worst chilies are pushed off a conveyor belt, while the better red and green chilies are sorted out.

In Fig. 3, we see the outcome of applying a bounding box detection algorithm on a collection of red chili peppers; the detected peppers are surrounded by colored boxes that match to their respective labels. The acquired real-time image is compared to the trained models, which was fed a total of 1558 images. It lists the total number of chilies in each image and the total number of green and red chilies.

Fig. 3. The images were detected with bounding boxes containing information regarding the aggregate quantity of chilies present and the number of chilies categorized by their respective colors.

Fig. 3. The images were detected with bounding boxes containing information regarding the aggregate quantity of chilies present and the number of chilies categorized by their respective colors.

From Table I, we can figure out the overall accuracy of the system. The table shows the number of identified chilies from the total number of chilies present in a frame along with their accuracy percentage for each attempt. The accuracy in detecting both green and red chilies can be understood separately. At the first attempt, all the six chilies present in the frame were able to get identified by the system giving an accuracy of 100%. At the first attempt, the proposed system could identify all chillies present in the frame, giving an accuracy of 100%. Only 17 of the 18 red chillies and 8 of the nine green chillies were identified during the second attempt. At the sixth attempt, the total number of chilies in a frame was about 18 and the total number of detected chilies was only 16, where out of the 13 green chilies only 11 were detected. This showed that as the number of chilies increased the percentage of accuracy decreased proportionally. The attempt was repeated for about ten times for which the red chilies gave an average accuracy of 92.79% and for green chilies the average accuracy rate is about 93.46%. We can understand that the accuracy of chilies identified decreases with the total number of chilies present. The decrease in accuracy may be due to the broken off chilies or physically damaged chilies, the blurred images can also be a cause.

Table I. Accuracy of bird eye chili detection framework using YOLO V5

| No. of attempts | Total no. of red and green chilies in a frame | Total no. of chilies identified | No. of red chilies identified | No. of green chilies identified | Red chili accuracy (%) | Green chili accuracy (%) |

|---|---|---|---|---|---|---|

| 1 | 06/05 | 11 | 6 | 5 | 100 | 100 |

| 2 | 08/10 | 17 | 8 | 9 | 100 | 90 |

| 3 | 04/03 | 7 | 4 | 3 | 100 | 100 |

| 4 | 09/15 | 19 | 7 | 11 | 77.7 | 73.33 |

| 5 | 11/6 | 16 | 10 | 6 | 90.9 | 100 |

| 6 | 05/13 | 16 | 5 | 11 | 100 | 84.61 |

| 7 | 07/07 | 13 | 7 | 7 | 100 | 100 |

| 8 | 09/15 | 21 | 8 | 13 | 80.8 | 86.66 |

| 9 | 03/05 | 8 | 3 | 5 | 100 | 100 |

| 10 | 14/06 | 16 | 11 | 6 | 78.5 | 100 |

| Average accuracy | 92.79 | 93.46 | ||||

The proposed methodology entails the operation of the experimental setup in the following manner:

- •The chilis are slated to be positioned onto the conveyor belt.

- •The CSI camera shall acquire visual representations of the chili peppers in motion along the conveyor belt.

- •The acquired visual data will be transmitted to the processing infrastructure implemented in Raspberry pi 4B utilizing YOLO V5.

- •The YOLO V5 algorithm can identify and categorize chili peppers based on their respective species.

- •The data pertaining to classification shall be duly recorded for analysis.

The developed computer vision-assisted Raspberry Pi 4 B-based system for plucking chilies according to their ripping stage is shown in Figure 4. The system comprises a robotic manipulator, servo motors, and its control unit Raspberry pi 4B. The system works with the help of CV, provided by the camera that sends in visual input to the system. This input is then processed by the Raspberry pi control unit, which is a debit card sized low-cost computer developed with independent processor, memory, and graphics drivers. This control unit can act as an independent computer as it has its own memory and processor that could process the data on its own.

Fig. 4. The developed working model of the proposed system.

Fig. 4. The developed working model of the proposed system.

The Raspberry pi unit has been programmed to enable comparison of the captured image with the previously trained models of chili known as the datasets. The YOLO V5 framework, which compares the acquired photos to a set of pretrained data, is used to train the system. Using the labeling software, these datasets are produced by labeling the models or reference photos. Self-identification and the detection of previously trained samples are both made easier by this technology. On identifying these chilies a signal is delivered to the robotic manipulator, which is operated by three Tower pro MG995 servo motors. These metal gear servos are capable of moving arm, base, and gripper portion of the manipulator in desired angles.

The robotic manipulator is moved to perform the sorting operations with the green chilies being sorted to the right and the red chilies being sorted to the left of the conveyor belt based on the information about the coordinates collected from the image. To move the manipulator to exact positions, a mathematical approach called inverse kinematics is used, which determines the angle for the manipulator’s movement.

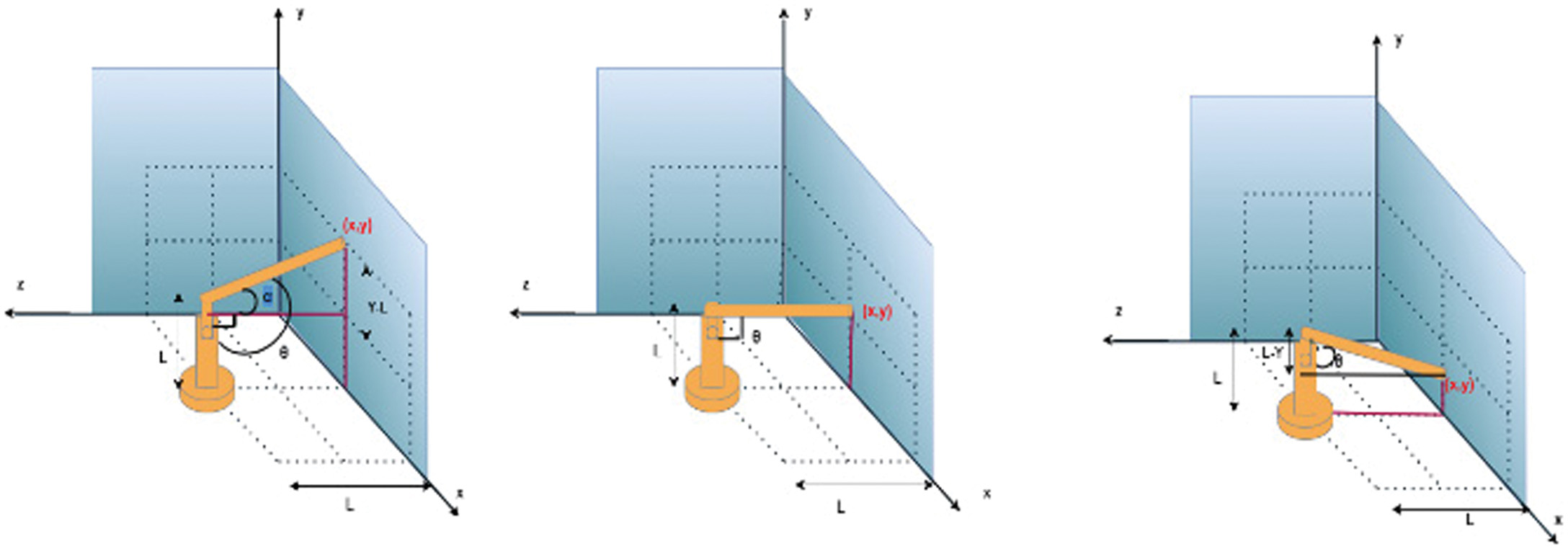

Figure 5 shows three different cases to find angle of the elbow or the middle part with the coordinate

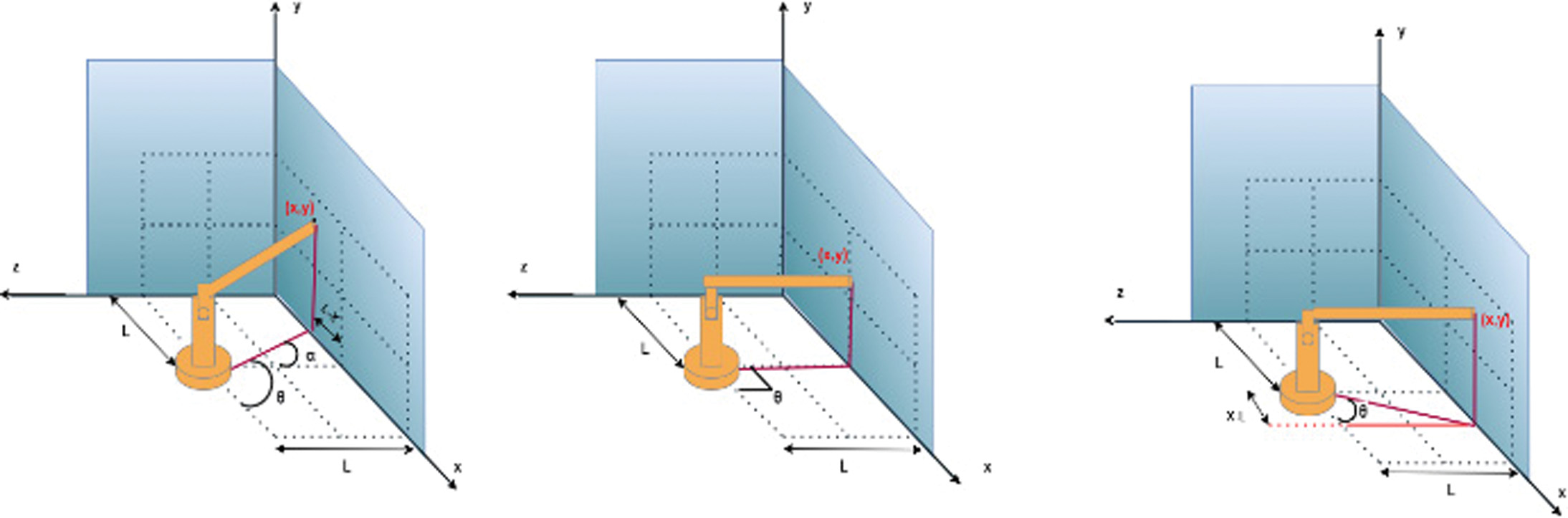

Figure 6 also gives three different cases to find angle of the base with coordinate in plane. Equations 5–8 are used to identify the precise angle for the movement of the robotic manipulator. They are programmed in such a way that the coordinated images are utilized on a real-time basis to identify the angles. Fig. 5. Inverse kinematics of elbow part with the coordinate.

Fig. 5. Inverse kinematics of elbow part with the coordinate.

Fig. 6. Inverse kinematics of base part with coordinate.

Fig. 6. Inverse kinematics of base part with coordinate.

The proposed system along with sorting operation does quality check in a similar manner by comparing the real-time footage captured with pre-trained datasets. The YOLO V5 framework enables a fast and highly accurate trained models. Further, they are a single shot detector that uses a CNN. This facilitates a better sorting of chilies based on the ripping stage and quality. The control unit of Raspberry pi along with the manipulator performs the sorting task in a fully automated manner. The system is powered by batter source that can be recharged from time to time.

V.CONCLUSION

This paper discussed the process for developing a 3-DoF robotic manipulator guided by CV for use in the agricultural sector. It employed CV technology based on the YOLO V5 architecture to recognize red and green bird eye chilies in real-time. The identification and classification procedures in the proposed system are all managed by a Raspberry pi 4B GPU computer. The YOLO V5 framework compared the detected data in real-time with the trained database. The robotic manipulator used a color comparison method to distinguish between red and green chilies before sorting them. The manipulator’s servo motor aids in the sorting process, while the Raspberry pi, which also serves as the system’s control unit, uses color recognition to determine how to divide the chilies. The systems used the same technology of chili detection to execute the sorting of red and green chilies to a predetermined location and quality assessment. If put into practice, this technique has the potential to free farmers from the time-consuming chore for many hours. Even if it does not use the Raspberry pi design, this technology might help farmers track the number of chilies they produce. The YOLO V5 framework allows the system to be further developed into a fully automated robotic manipulator capable of detecting chilies with CV technology and distinguishing between red and green chilies. The suggested technology has much room to grow into a robot farm hand that can pick chilies with precision.