I.INTRODUCTION

Over the most recent 50 years, research identified with software engineering has endeavored to reproduce the (human) essential capacity of acknowledgment. This capacity was specific across the human development as per the various types of items/circumstances requiring motion, language, composed images, and substantial articles, peer amicable or unfavorable goal, and so forth, or, as a rule, issues of pattern recognition (PR). Biometrics substitutes a strange role in PR: the acknowledgment of human subjects by their bodily (appearance) or potentially conduct (action) characteristics, which is one of the most enticing but also functional tasks in many structures. Dependable acknowledgment should permit to both practicing and summing up, while keeping a partition edge to recognize classes and characters. In biometrics, the steady test is to deal with the persistent substitutes in human qualities, due to inward (e.g., maturing or articulation) or outside (e.g., enlightenment or catch goal) elements. With respect to iris, the latter plays a major role. Actually, iris is time invariant and amazingly recognizable. At the point when the web has arrived at its pinnacle and has framed the base for all advanced financial and business frameworks, the precise validation for getting to our data in web-based financial area will be important. During the most recent years, by the advances of innovation space particularly in biometric, data servers have prompted to feel secure everywhere in the world [1]. Robust popularity offers with distilling the huge look range displayed through the items surrounding us, with the intention to summarize the fundamental discriminative in addition to characterizing functions, permitting to generalize popularity results. As the human iris appearance has forte and steady functions for each human being over time, it is far dependable with its surprising traits which may be used for identity structures [2]. A portion of the basic components that play a significant situation in developing the iris biometrics are given in [3]: iris specific sample, stability of the iris shape in the course of the human’s lifetime, and easy to use picture obtaining gadgets with wide upgraded capacities. In this unique circumstance, an entrancing chance is not just that of recreating the human capacity of acknowledgment, however, even that of learning, particularly identified with the actual acknowledgment. In particular, iris popularity is one of the maximum prominent fields in biometrics. However, there are not many of studies addressing it through machine learning strategies educated with instance inputs and their accurate outputs, given through a human supervisor. There is a requirement for clever innovation where we can make use of security IPIN, OTP, secure signs/pictures, and so on advancing potentially to use for most security reasons in financial areas [4]. The innovation and strategy for AI are created. Like a man-made brainpower (computer-based intelligence), decision tree, self-coordinating organizations, support vector machine (SVM), and a lot more procedures are created. The feature highlight that is utilized in this hypothesis is a human iris. [5]. Additionally, it is apparent yet secured, and its picture can be gained without contact. Some well-known utilization of these advancements are: line security, air terminal security, cell phones, resident enlistment, criminal examination, well-being, and returning Afghan outcasts [6,7]. Several techniques were evolved for the iris reputation system. As essential elements for comparing the Iris Recognition System (IRS) are precision price and estimation time, the goal of this investigation is largely focused on improving the iris acknowledgment execution by applying exact component extraction methods and genetic algorithm.

The remaining of this paper is prepared as follows. In Section II, we survey about the past commitments of this examination. We audit the dataset and environment creation in Section III. In Section IV, we clarify our suggested technique and specialized perspectives in minute details. The trial outcome and assessment of our investigation are illustrated in Section V. At last, in Section VI, we conclude the paper and outlook the future work.

II.RELATED WORK

This section briefly reviews earlier research on wavelet transformation and genetic algorithm, and two techniques are used in IRSs.

Tan et al. [8] created a novel iris detection approach in order to handle a variety of iris images, which is based on a code-level framework. The link between the nonlinear and homogeneous iris parallel element codes was captured using artificial neural network (ANN). Compared to other current techniques, they had the highest precision rate. In [9], they suggested a methodology that can recognize an eye in various head presents. Their study depended on location and confirmation of the same. They utilized multiresolution iris shape highlights and essential picture for the initial process and in the subsequent advance.

Thepade [10] illustrated a representation for feature extraction procedure. They used the block truncation coding technique for minimizing the size of vector and also, and they registered various color spaces for enhancing genuine acceptance ratio. In [11], a novel methodology acted to find the effective boundaries for circles in standardization step. Their method depended on dynamic form strategy (Represent) elliptic shapes. Lin et al. [12] put forward a nondivisible wavelet structure methodology for tackling revolution issues for removing the iris highlights. Sheoran et al. [13] introduced an element extraction strategy for iris acknowledgment dependent on two-dimensional Gabor channel. They accomplished an elevated exactness rate with blend of XOR-Whole administrators contrasted with the other component extraction strategies. Ahmadi [14] introduced a hearty iris acknowledgment technique. In the initial step of [15] strategy, they utilized wavelet channel for resolving the information and the subsequent advance was for determining the boundaries.

Many iris acknowledgment calculations are based on Daugman’s [16] foundation, which relies on Gabor wavelets. Boles’ [17] calculation is based on wavelet change zerocrossing, and Lim’s [18] calculation is based on 2D Haar wavelet change. The standard discrete wavelet change (DWT) is an amazing asset utilized effectively to take care of different issues in sign and picture handling. An image is divided into four subsampled images by the DWT. Ajay Kumar [19] used the Haar wavelet to five times decompose the image, producing a feature vector with just HH components Daouk [20]. This method uses Haar wavelets to divide the improved image into 5 levels [21].

III.MATERIALS AND METHODS

With regard to IRS applications, earlier IRSs have been exceptionally effective, while the examination region in regard to validation framework with mix of suitable element enhancement and choice has been often detected and the subject is essentially explored. As indicated by Akbarizadeh [14], the issue of IRS with wavelet change includes extractor method, and blend of multifacet perceptron neural organization molecule of genetic algorithm classifier is viewed as an advancement arrangement and their precision rate is at a satisfactory level, which additionally can be enhanced.

A.ORIGIN OF CASIA DATABASE

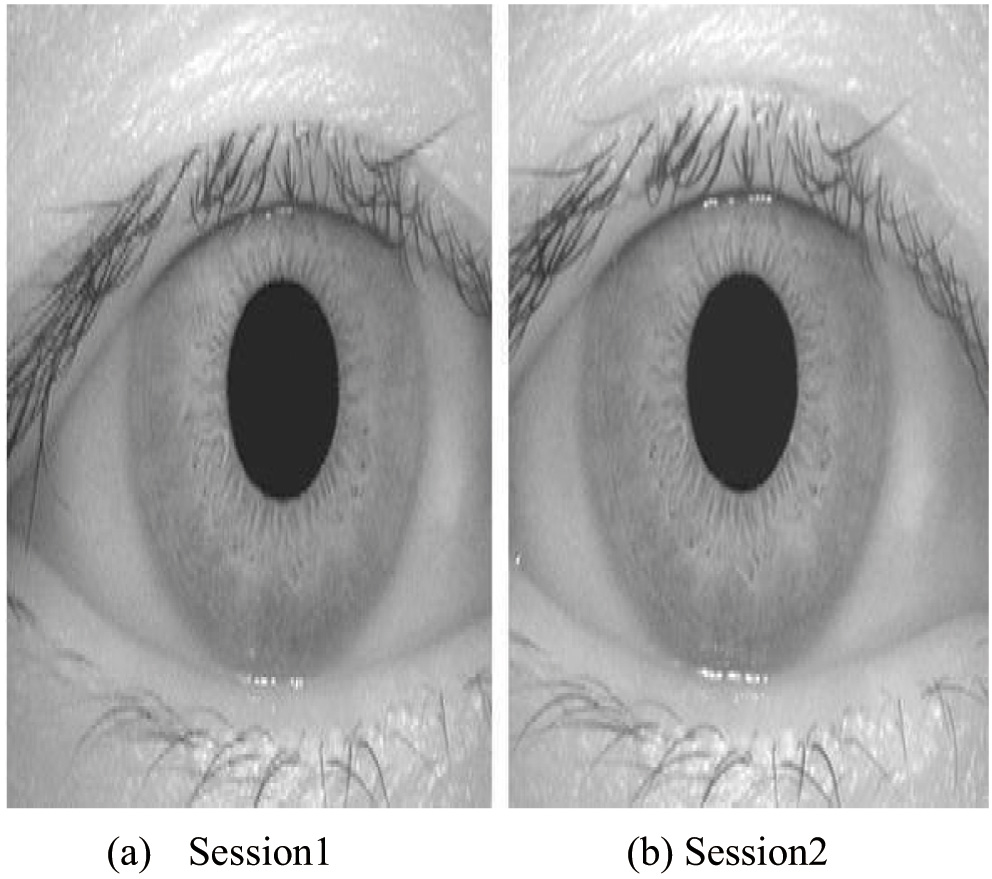

Iris acknowledgment has been a functioning exploration subject as of late due to its high precision. There are relatively few public iris datasets, while there are many face and finger impression datasets. The investigation of iris acknowledgment may be hampered by a lack of iris information [22]. The National Laboratory of Pattern Recognition freely makes available to iris recognition experts its database of iris information in order to enhance research. CASIA V1.0 (CASIA-IrisV1)’s natively built iris was used to capture iris images. The 756 iris images from 108 eyes are part of the CASIA Iris Picture Information base Variant 1.0 (CASIA-IrisV1). For each eye, 7 pictures are caught in two meetings with our self-created gadget CASIA close-up iris camera as shown in Fig. 1, where three examples are gathered in the principal meeting in Fig. 2(a) and four in the subsequent meeting in Fig. 2(b). All images are saved as BMP files with a 320*280 resolution. The understudy regions of each iris picture in CASIA-IrisV1 were naturally recognized and substituted in order to get rid of the specular reflections from the NIR illuminators (see Fig. 1). While this modification clearly simplifies iris limit location, it has little to no impact on other components of the iris recognition framework, such as feature extraction and a classifier strategy. It is recommended that you think about two examples from a similar eye taken in various meetings when you need to process the inside class changeability. For instance, the iris pictures in the principal meeting can be utilized as preparing dataset and those from the subsequent meeting are utilized for testing.

Fig. 1. The self-developed iris camera used for collection of CASIA-IrisV1.

Fig. 1. The self-developed iris camera used for collection of CASIA-IrisV1.

B.MATLAB ENVIRONMENT

MATLAB is a significant level specialized processing language and intelligent setup for calculation advancement, information representation, information examination, and numeric calculation. It incorporates calculation, representation, and programming environment. These features make MATLAB an excellent tool for teaching and research. In comparison to standard scripts (like C or FORTRAN) for handling specialized difficulties, MATLAB has many advantages. An exhibit that does not require dimensioning serves as the core information component of the intelligent framework known as MATLAB. Since 1984, the product bundle has been available to businesses and is now regarded as a standard tool for businesses around the world. It has incredible implicit schedules that empower an extremely wide assortment of calculations. Additionally, it includes straightforward design layouts that enable the representation of results readily available. Explicit programs are bundled together and referred to as “tool compartment” bundles. MATLAB is an interactive system whose basic data element is an array that does not require dimensioning. This allows you to solve many technical computing problems, especially those with matrix and vector formulations, in a fraction of the time it would take to write a program in a scalar noninteractive language such as C or Fortran. For managing signals, doing representative calculations, testing the control hypothesis, advancing, and a few additional areas of applied science and designing, there are toolkits available. MATLAB offers a number of benefits for capturing and sharing your work. MATLAB code can also be incorporated with different dialects and applications. Its feature highlights include:

- •Specialized language for high-level processing

- •A development environment for keeping track of code, documents, and data

- •Interactive tools for planning, iterative research, and critical thinking

- •Straight variable-based math skills, insights, Fourier analysis, separation, progress, and mathematical combination

- •The ability to visualize data using 2-D and 3-D illustrations

- •Resources for creating personalized graphical user interfaces

MATLAB is likewise a programming language. Like other PC programming dialects. A content document is an outer record that contains a grouping of MATLAB proclamations. Content records have a filename augmentation.m and are frequently called M-documents. M-documents can be scripts that just execute a progression of MATLAB explanations, or they can be capacities that can acknowledge contentions and can deliver at least one yield. MATLAB has some dynamic constructions for control of order execution.

C.MACHINE LEARNING APPROACHES TO IRIS RECOGNITION

One of the major elements obstructing the selection of AI outlook for biometrics is the gigantic figure of classes (people) that must be separated. Every individual needs a specially appointed preparing with positive and negative examples. However, the previous are ordinarily not accessible in an adequate number. In addition, a secure arrangement of features across time is normal. Therefore, in gigantic real-world implementations, classic machine learning perspective seems to have limited trials. The capability of such procedures draws in specialists since the primary investigations regarding ANNs. Regarding the iris, this implies that a human would also have a challenging time measuring it due to its intricate and high-resolution visual structure.

Two principal classes of techniques can be distinguished as the ones depending on ANNs and the others depending on SVM or all the more classes of techniques and large kernel machines.

- •The ANNs are layers of neurons, each of which registers actuation work in accordance with loads associated to connections between neurons. The class of binary classification issues is identified with a single yield neuron; however, due to diverse classes, additional yields are used until they are equal to the number of classes. A variety of actuation functions are included in the ANN architecture, and choosing one of these choice functions is possible during network training. This family is distinguished by the complexity of the neural organization, including the quantity of covered up layers, the density of neurons within these layers, and the geographic distribution of the organization. By choosing the right loads for the neural organization associations, the choice capacity is managed. Ideal loads generally limit a mistake work for the specific organization design.

- •SVM classifiers are basically created using a two-step process. This information space’s element is fundamentally larger than the previous one. A hyperplane in this new space with the largest edge (directly) isolating types of information is then discovered by the calculation. Ordinarily, an appropriately high-dimensional goal space is needed for arrangement precision. On the off chance that it is beyond the realm of imagination to expect to discover the isolating hyperplane, a compromise should be acknowledged between the size of the isolating edge and punishments for each vector that is inside the edge. Express planning to another space can be kept away from on the off chance that it is feasible to ascertain the scalar item in this high-dimensional space for each two vectors.

IV.PROPOSED METHODOLOGY AND TECHNIQUES

In this paper, we propose an iris acknowledgment strategy based on feature selection and feature optimization techniques. Presently, the research pattern of biometric security utilizes the cycle of feature advancement for better improvement of iris acknowledgment. Fundamentally, our iris comprises of three sorts of highlight, for example, shading color, surface, and shape and size of iris. The main component of iris is shading color and surface of iris. For the surface extraction of an image, we utilized a wavelet change work, which is considered to be the most encouraging surface investigation highlight so far. For the determination of feature selection and enhancement, we propose genetic algorithm. Genetic algorithm is a populace based on searching techniques and characterizes two constraints functions for the process of selection and optimization.

A.WAVELET TRANSFORMATION FUNCTION (FEATURE EXTRACTION)

The feature extraction phase of the iris recognition system is crucial [22]. Color, texture, and size make up the main three categories of features in an iris image. The process of translating an image from image space to feature space is known as feature extraction. Finding good characteristics that accurately depict a picture today is still a challenging endeavor [23]. The features utilized for iris images from the database are covered in this section. The visual material is essentially represented by features. You can additionally categorize visual information as either generic or domain specific. Wavelet filters were employed in this extraction procedure. Texture analyzers with 2-D implementation in a face image, Gabor functions exhibit a significant association with the texture information [20]. Complex sinusoids modulate Gabor functions in a way that is Gaussian. They appear as follows in the two dimensions:

where μ = n¼/K, and K is the number of orientations.As the multiresolution scales increase, Ul and Uh are the relevant lower and upper center frequencies, respectively, and a = (Uh/Ul)1/S1. It is necessary to develop a compact representation for learning and classification purposes. The Gabor wavelet transform of an image I(x, y) is then defined.

As a result, we are given a 30-dimensional numerical vector with 6 different orientations and 5 different scale changes. Also take note that only rectangular grids are used to compute the texture feature because it is challenging to compute the texture vector for a single arbitrary location. A feature matrix is produced by the extracted texture feature for the optimization process. As a result, we are given a 30-dimensional numerical vector with 6 orientation and 5 scale modifications. Also take note that only rectangular grids are used to compute the texture feature because it is challenging to compute the texture vector for a single arbitrary location. A feature matrix is produced by the extracted texture feature for the optimization process.

B.FEATURE SELECTION

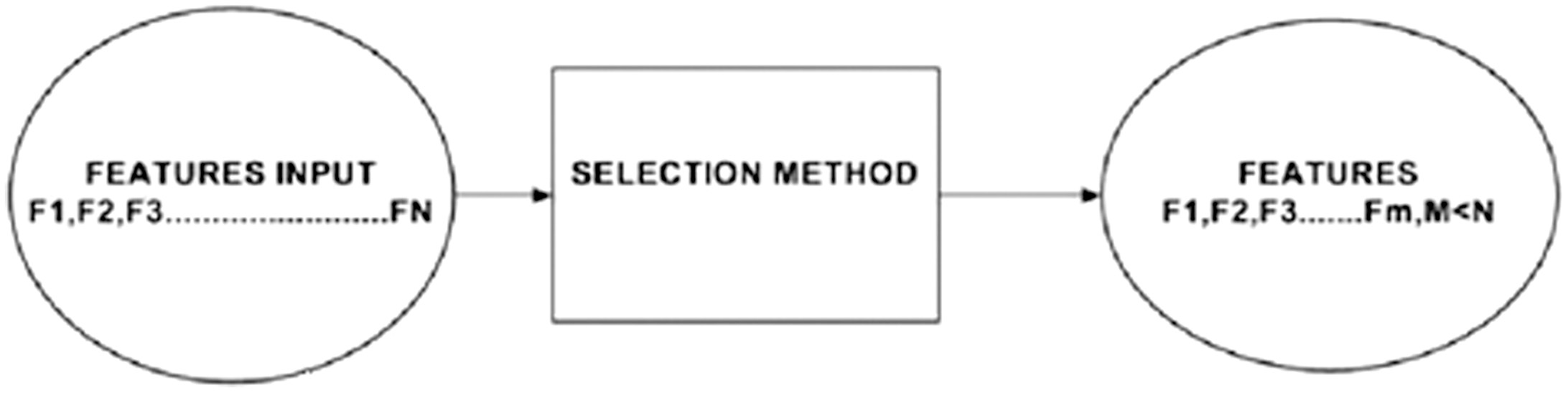

The features in the feature selection technique are either manually selected from the observed data or selected using a specific feature selection tool. Based on the system’s prior knowledge of the environment, the most appropriate characteristics are manually chosen from the feature spectrum. For the network biometric model training, features such as those that can tell one type of image from another taken by the camera are chosen. The purpose of the feature selection tools is to narrow down the number of characteristics to a manageable subset of features that do not correlate with one another. Bayesian networks (BN) and classification and regression trees (CART) are two examples of feature selection techniques. A probabilistic graphical model that depicts the probabilistic correlations between features is a Bayesian network. CART is a method for classifying the dataset into various groups by creating if-then prediction patterns that resemble trees using tree-building algorithms. Figure 3 shows the process of feature selection. The features are (F0…FN) on the left.

Fig. 3. Feature selection process in feature variable.

Fig. 3. Feature selection process in feature variable.

That can be accessed from the data being monitored, such as network traffic. The selection tool’s output (F0…FM) is located on the right side. The selection tool employed and the inter-correlation of input features affect the number of features in the output.

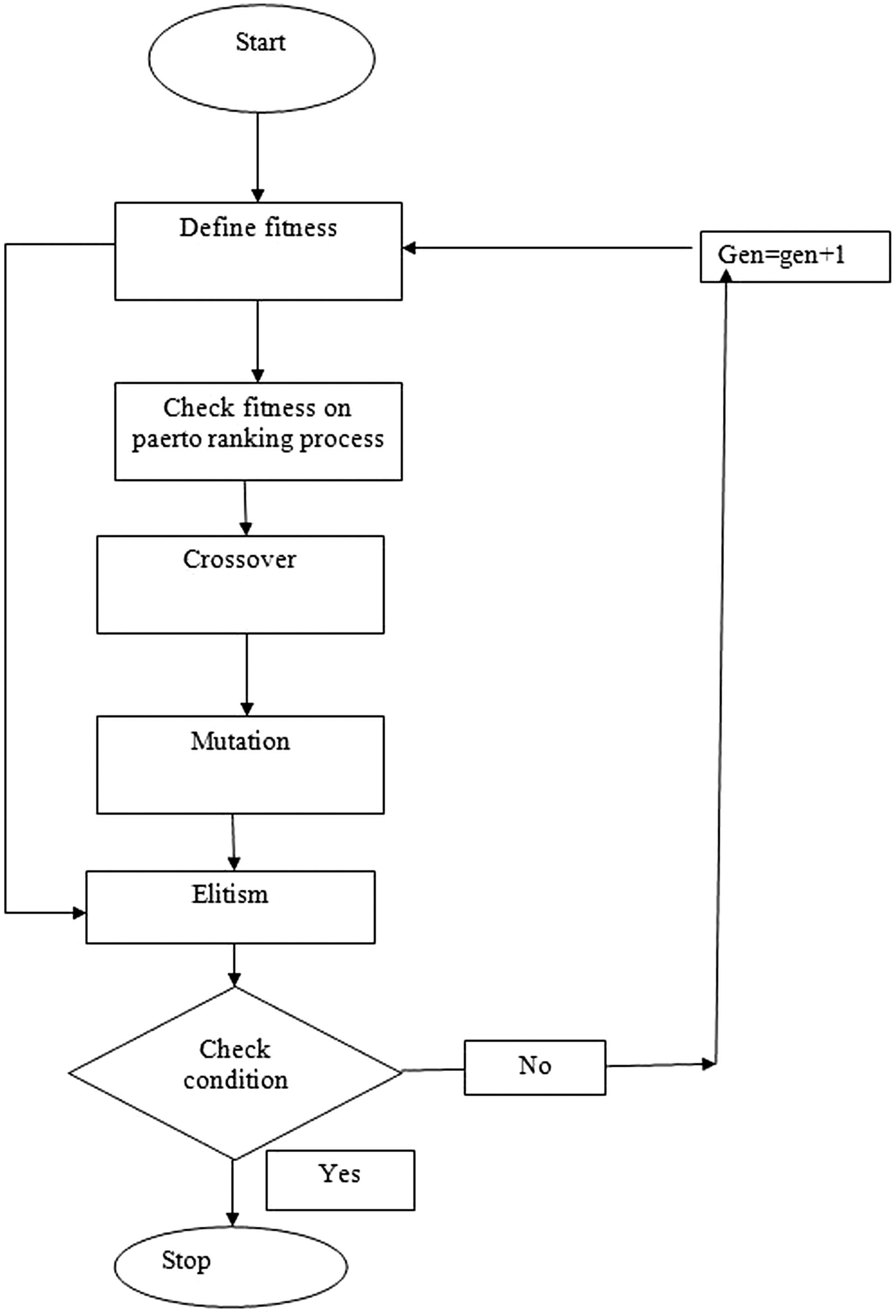

C.GENETIC ALGORITHM

To handle the streamlining issues, GA was designed as a multiple-reason search method. Some of the ways that GA is used lead to better solutions, the completion of every task, and the limiting of the improvement concerns without the need for any numerical requirements. Applying the two global searches and fusing the space autonomous heuristics is another advantage of GA for specific problems [24]. The underlying GA population is composed of a random collection of chromosomes that are then programmed with various configurations. Gens in GA are the factors of an issue in machine eligibility restrictions and environment. They also exist in a chromosome. Consequently, to evaluate the chromosomes, the upsides of wellness work are utilized which we need to improve. From that point forward, posterity (new chromosome) is made utilizing the difference in the gens mix by playing out the two known administrators: hybrid and transformation. For making a populace among the ages, the regular choice artificial form is preceded as the determination administrator and most elevated wellness values of chromosomes get the opportunity of being picked in a future.

Darwin’s idea of evolution serves as a major inspiration for genetic algorithms. Genetic algorithms evolve a solution to a problem. The population of the algorithm is the initial collection of solutions (represented by chromosomes or also known as strings). One population’s solutions are used to create a new population. The belief that the new population would be superior to the previous one drives this.

Numerous processes, including population, fitness function, mutation, and crossover, are used in the feature optimization and multiobjective function to spread the selection method. The steps are broken down into the four phases listed below.

- 1.Fitness Function with Pareto Ranking Approach

The Pareto ranking approach is used to calculate fitness function, where each individual datum is assessed by the general population based on the non-domination idea. The Pareto ranking process is then completed.

- 2.Crossover

One genetic process used to produce new chromosomes (offspring) is crossover. Single-point crossover is used in this study, and the crossover probability is then further computed.

- 3.Mutation

Mutation is another genetic operator. The risk of alterations to the current outcomes is exploited by this approach. Both the process of choosing chromosome mutations and the location of a gene that will be altered will be done at random.

- 4.Elitism

The survival of the nondominated solutions in the following generation is not guaranteed by the random selection. In a multiobjective genetic algorithm, the real step toward elitism is the doubling of the nondominated solution in population Pt, which will now be included in the population of Pt + 1.

V.RESULTS AND DISCUSSION

A.OPEN DISCUSSION

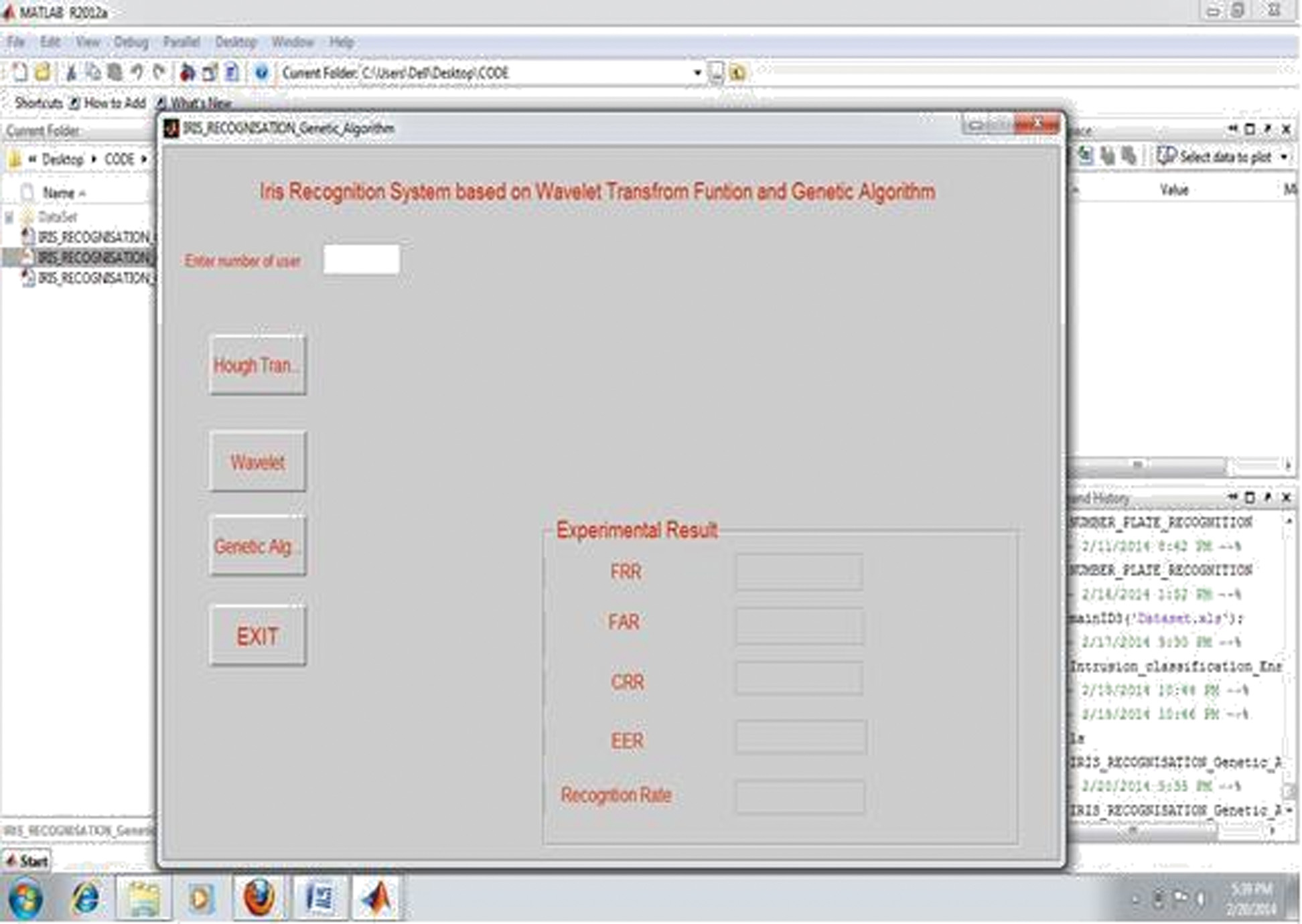

Despite the fact that iris acknowledgment is a moderately youthful field in biometrics and in design acknowledgment as a rule, it is fascinating to see that ML methods have not been completely examined and misused for this issue. The proposed framework has been created in MATLAB. Choice of partition point is basic. In the event that little division point is chosen, there will be less false acceptance rate (FAR) and all the more false rejection rate (FRR). By expanding the worth of division point more FAR and less FRR will be accomplished. There should be a compromise among FAR and FRR. Beneficiary Working Trademark (ROC) bend is utilized as a norm to check the presentation of the proposed framework. Error rate (EER) implies a point on the diagram, where FAR and FRR are equivalent. Least EER of a framework implies better acknowledgment.

B.END RESULTS AND FRAMEWORK REPRESENTATION

Here we have taken CASIA Iris dataset for experimental process, which contains different types of iris and we obtain the results of FAR and FRR by using wavelet transformation. Finally, we concluded that findings based on wavelet transforms are superior to those obtained using other methods. The following two parameters can be used to calculate results:

- •FAR: Probability that a system may identify someone mistakenly or fail to recognize a fake. It shows the likelihood that a specific biometric system will consider an inaccurate input to be a match.

- •FRR: Probability that a registered user will not be correctly identified by a system. It is a measure of the likelihood that a biometric system may mistakenly identify an input as a match and reject it.

First, we get the values of FAR and FRR through Hough transform wavelet transform and then genetic algorithm after run the program code. Then, we get the better result of genetic algorithm with compare as wavelet transformation.

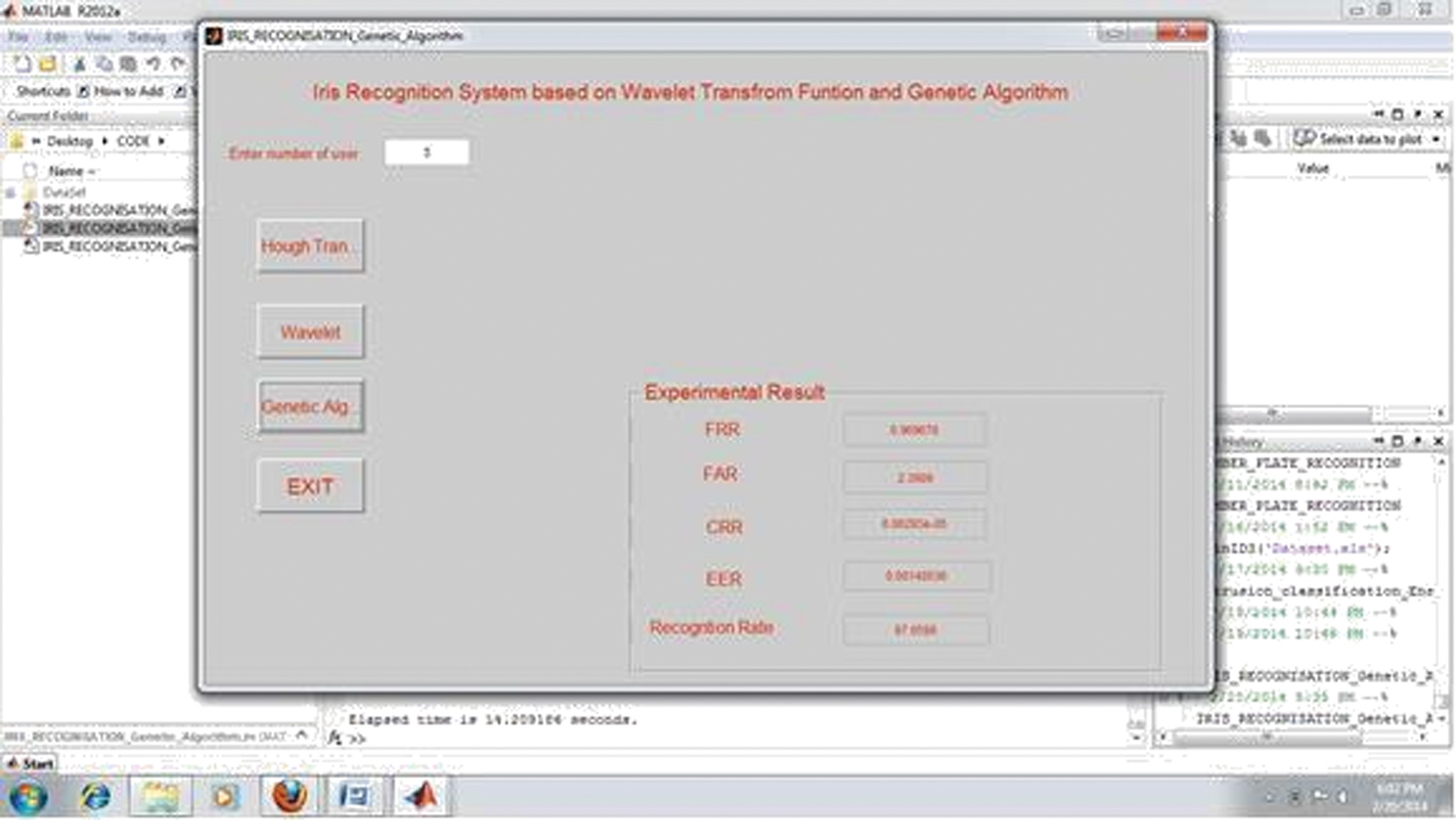

Output 1: Main window of our experimental process.

Output 1: Main window of our experimental process.

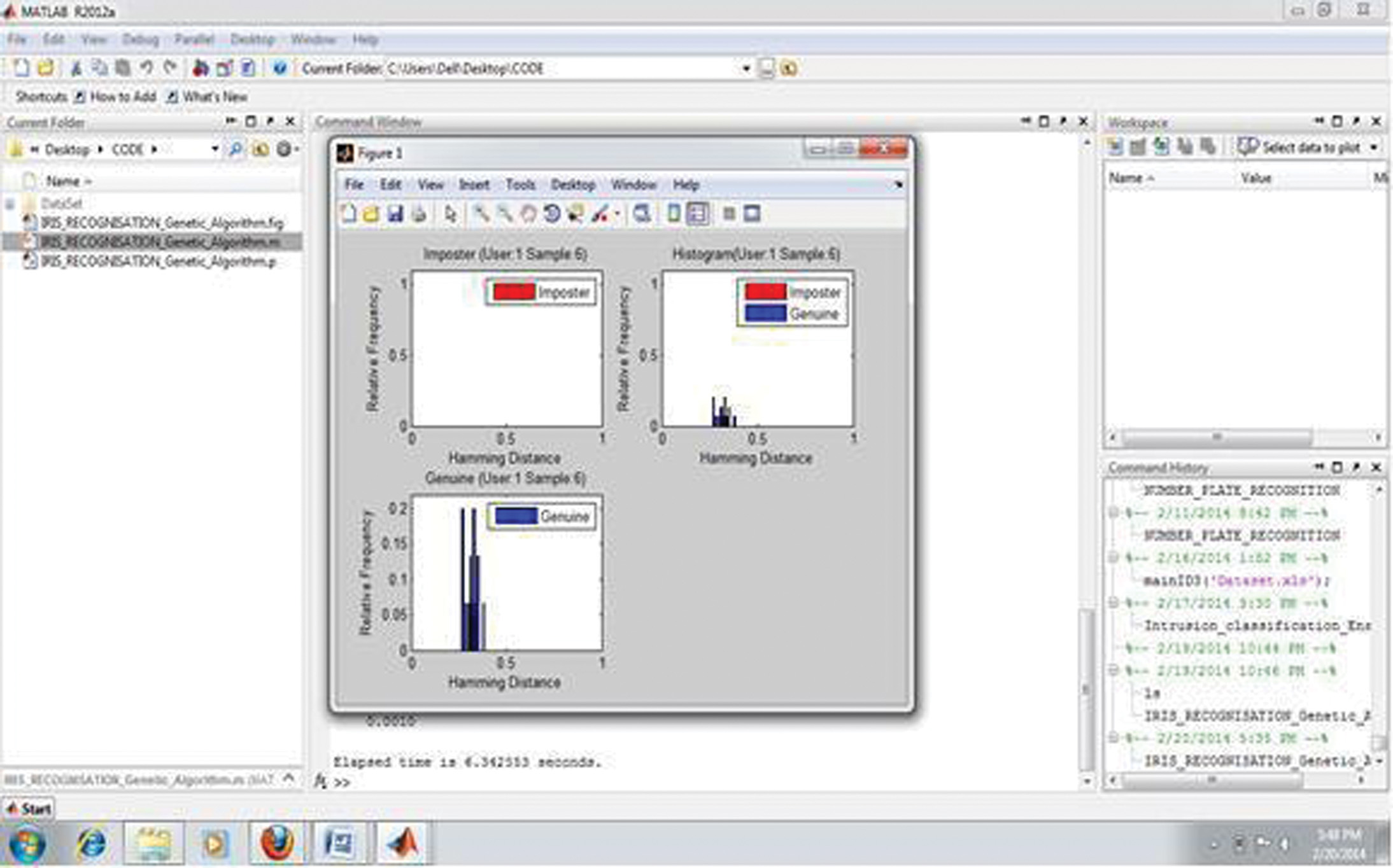

Output 2: It shows that the comparative information of hamming distance between imposter users and genuine users of histogram in compassion of relative frequency and hamming distance, for Hough transform and the number of user is 1.

Output 2: It shows that the comparative information of hamming distance between imposter users and genuine users of histogram in compassion of relative frequency and hamming distance, for Hough transform and the number of user is 1.

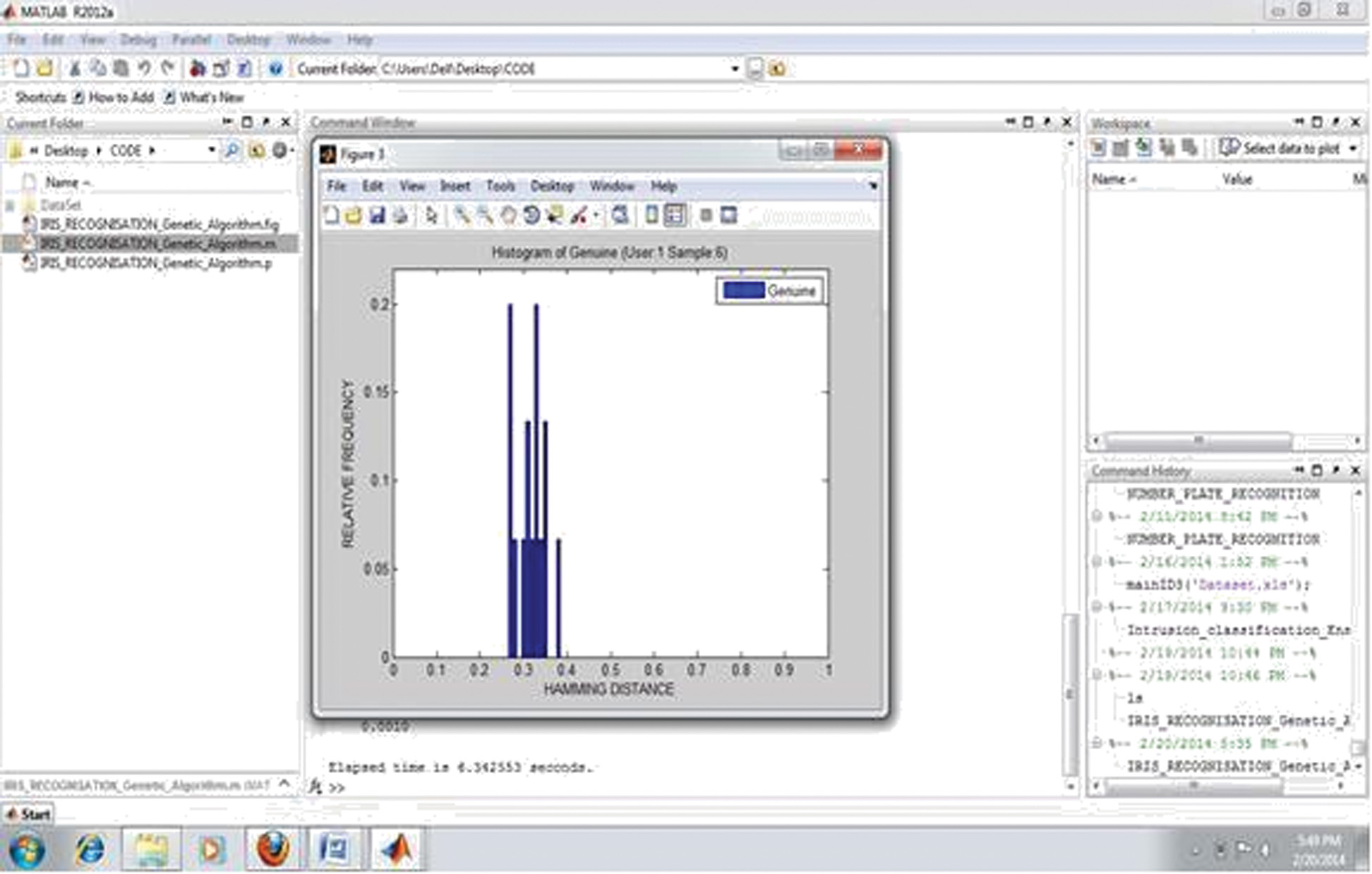

Output 3: It shows that the comparative information of hamming distance between imposter users and genuine users of histogram in compassion of relative frequency and hamming distance, for Hough transform and the number of user is 1.

Output 3: It shows that the comparative information of hamming distance between imposter users and genuine users of histogram in compassion of relative frequency and hamming distance, for Hough transform and the number of user is 1.

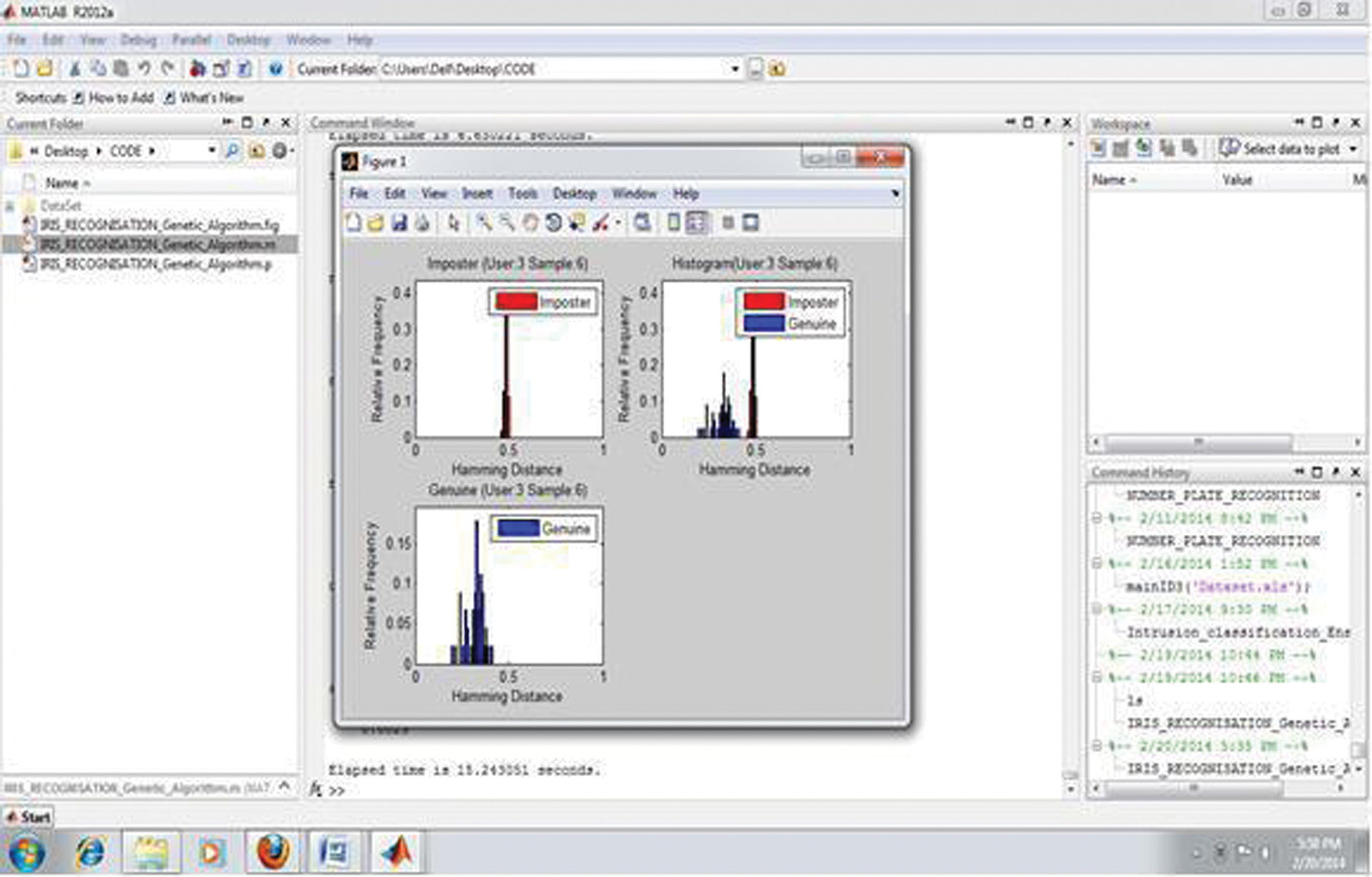

Output 4: It shows that the computation results of false acceptance ratio and false rejection ratio using Hough transform for the number of user is 3.

Output 4: It shows that the computation results of false acceptance ratio and false rejection ratio using Hough transform for the number of user is 3.

Output 5: It shows that the result window for genetic transform and the number of user is 3.

Output 5: It shows that the result window for genetic transform and the number of user is 3.

VI.CONCLUSION AND FUTURE WORK

One of the difficult perspectives in the biometric documented is iris acknowledgment. It becomes a well-known and valuable attribute as a result of various advantages and applications in different regions. In this way, it will have expanded soon. This study depicted our suggested strategy that depended on iris acknowledgment method which comprised of feature extraction by wavelet change. The proposed extraction strategies were extricated. After providing the genetic algorithm classifier, it was registered on this procured vector for grouping reason. As per the acquired outcomes, it was depicted that our strategy could decide subsets of highlight with prevalent grouping precision.

For future work, we intend to examine diverse characterizations and work on various blend of networking models. Further examinations in future will focus on advancement of the same by joining modern methods like profound learning for preparing the organization with another evolutionary-based algorithm to decrease the memory area and intricacy.