I.INTRODUCTION

In the current societal context, the phenomenon of population aging is becoming increasingly severe [1,2]. The proportion of elderly individuals is steadily rising, along with the prevalence of chronic diseases like Alzheimer’s. Alzheimer’s disease (AD) is a neurodegenerative disorder that affects memory, cognition, and daily living skills. Early detection and intervention are crucial for slowing disease progression and improving patients’ quality of life. Therefore, the early diagnosis and intervention of AD in the elderly have become key research topics in medicine and smart elderly care.

With the rapid development of Internet of Things (IoT) technology, smart elderly care and healthcare have entered a new era [3]. Integrating IoT smart devices with cloud-based data platforms facilitates remote healthcare services for elderly individuals living alone, particularly in the early detection of chronic conditions like AD. IoT devices, such as wearables, can continuously track patients’ vital signs and physiological data, enabling more accurate health assessments and supporting clinical decisions [4].

Currently, IoT devices for Alzheimer’s detection mostly rely on expensive, specialized medical equipment or sensors, such as wearable sensors. These sensors are not only difficult to deploy but also hard for elderly individuals to accept. Using smaller sensors like smart speakers and microphones to collect voice and text data from seniors makes Alzheimer’s detection more efficient. This approach reduces the need for specialized equipment, increases both convenience and acceptance. However, as voice data is highly sensitive personal information, it poses considerable challenges regarding privacy protection during collection, transmission, and processing. Unprotected data may result in privacy breaches or be maliciously exploited. Therefore, effectively utilizing data while ensuring privacy protection has become a critical issue that IoT healthcare systems must address [5].

To address these issues, this paper proposes a privacy-preserving method for AD detection based on IoT technology. The system utilizes an architecture based on federated learning (FL) [6] and differential privacy (DP) [7] techniques. Raspberry Pi or similar computational devices are installed in the homes of elderly individuals living alone. These devices are equipped with microphones and speakers to collect voice data during the elderly’s daily activities. By playing prerecorded greetings from their children, the system simulates natural conversation scenarios. The terminal devices capture and process voice data in real time, converting it into spectrograms. Edge devices use lightweight convolutional neural networks (CNNs) to perform localized training on the spectrograms. During the training process, DP techniques protect model parameters to ensure data privacy. The trained model parameters are then securely transmitted to a cloud server, where data from different terminals are aggregated and further trained to form a more accurate global model. The optimized model is subsequently sent back to the edge devices for continuous personalized adjustments and learning, enabling early and precise detection of AD while ensuring privacy protection.

The rest of the paper is organized as follows. Section II presents the related work in AD detection and FL. Section III provides an overview of the system, while Section IV elaborates on the design. Section V outlines the experiment design and results analysis, and Section VI concludes the paper.

II.RELATED WORK

A.IoT HEALTHCARE

As AD is a severe chronic condition, IoT-based healthcare offers significant advantages over traditional screening methods, such as professional medical examinations and neuropsychological assessments [8]. It does not require complex medical equipments and can remotely detect AD in elderly individuals, enabling timely intervention by family members or healthcare providers. Individuals can use IoT devices at home for continuous health monitoring and timely intervention [9]. Currently, most research focuses on wearable devices for continuous tracking of health data and behavior [10]. For example, [11] developed a prototype system using wearable IoT devices to provide psychological support for AD patients and ensure secure information transmission for family members to review. In [12], mobile health applications and IoT-based wearable devices were used to assist in the continuous health screening of AD patients. Ebrahem et al. [13] developed a small, lightweight, portable IoT prototype to track AD patients in real time and remind them to take their medication through timely alerts. However, wearable sensor devices still face challenges in deployment and acceptance by elderly individuals. As a result, researchers have begun exploring smaller, nonintrusive IoT devices for AD detection and medical services for AD patients. For instance, [14] collected data from Alzheimer’s patients using sensors and smartwatches installed in their homes, enabling timely treatment. Li et al. [15] improved AD detection accuracy by deploying IoT devices in smart home environments to collect users’ audio data and analyze classical speech features. IoT-based AD detection offers more efficient, cost-effective, and convenient solutions, although its accuracy is slightly lower than traditional hospital-based screenings.

B.AD DETECTION METHODS

Currently, AD detection methods are primarily categorized into neuroimaging-based analysis and voice-based or text-based analysis. Neuroimaging-based detection methods diagnose AD by analyzing imaging data such as magnetic resonance imaging (MRI), positron emission tomography (PET), and functional MRI (fMRI). These methods have played a significant role in early AD detection. For example, [16] proposed a deep learning method for predicting AD, achieving excellent results on the fMRI AD imaging dataset. In [17], a depthwise separable CNN model was introduction for AD classification, significantly reducing parameters and computational costs compared to traditional neural networks. Ebrahimi et al. [18] proposed a method using transfer learning in 3D-CNN, enabling knowledge transfer from 2D image datasets to 3D image datasets. All the aforementioned methods predict AD stages using single data modalities. In contrast, [19] proposed a holistic approach that integrates multiple data modalities using deep learning, proving superior to traditional machine learning models.

Although image-based methods are effective, collecting such data is costly and often difficult. As a result, many researchers have focused on detecting AD through speech or text analysis. For example, [20] conducted a systematic evaluation of methods for detecting AD in elderly individuals through speech analysis, examining relevant features and diagnostic accuracy. In [21], speech recognition and natural language processing techniques were used to detect AD and assess its severity.

Since most AD detection methods rely on highly sensitive data, such as images or speech, privacy protection has become a critical consideration in this research. Some scholars have explored using FL for AD detection. For example, [22] proposed a hybrid FL framework that utilizes unlabeled data to train deep learning networks while ensuring data privacy protection. The authors also introduced a novel brain region attention network (BANet) that highlights important regions of interest using attention mechanisms. In [23], a hierarchical FL model with adaptive model parameters aggregation were studied to improve learning efficiency. Lakhan et al. [24] proposed a novel scheme called Evolutionary Deep Convolutional Neural Networks (EDCNNS), which focuses on convex optimization problems, aiming to minimize computation time while maximizing prediction accuracy for AD. Ouyang et al. [25] proposed an end-to-end system integrating multimodal sensors and a novel FL algorithm to detect multidimensional AD digital biomarkers in natural living environments.

III.SYSTEM OVERVIEW

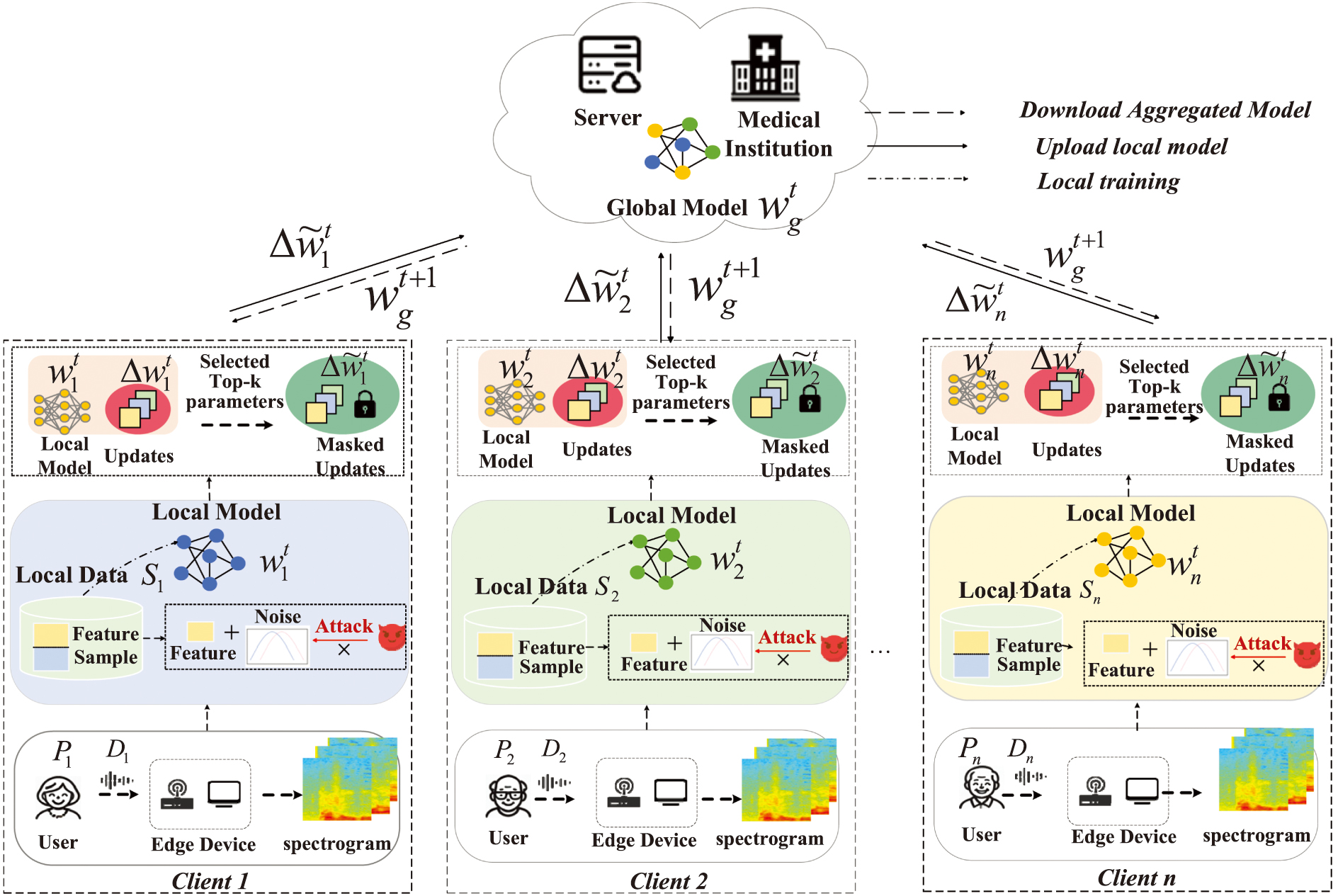

In the Efficient Differential Privacy-Alzheimer’s Detection (EDP-AD) framework, there is a global server and n clients participating in training, as illustrated in Fig. 1. The framework consists of the following steps: (1) At the current round t, the server of the community or medical institution initializes a global model and distributes this model to all participating clients. (2) Clients process the collected data by converting the voice data into spectrogram representations and extracting features. DP noise is then added to these features. (3) Each client downloads the global model from the server and trains it using their local data. Upon completion of training, each client obtains updated local model parameters , resulting in model parameter updates . (4) Each client employs a Top-k parameter selection method and adds a mask to perturb the parameter updates, thereby preventing adversaries from accessing the original model and compromising client privacy. The perturbed model parameter updates are then uploaded to the server for aggregation. (5) The server utilizes an aggregation algorithm to aggregate all model updates uploaded by the clients, yielding a new global model. In the next communication round , this updated model is redistributed to the clients for further training, iterating through the aforementioned steps for training rounds.

Fig. 1. Figure system architecture diagram.

Fig. 1. Figure system architecture diagram.

A.DESIGN GOALS

- 1.Easy Deployment: A key design goal of our system is ease of deployment in various home-based elderly care environments. For AD detection, elderly individuals only need to interact with IoT devices (such as Raspberry Pi) via voice, without requiring additional analysis or complex equipment setups.

- 2.High Performance: Due to the limited computational resources of client devices, it is essential to optimize communication overhead while maintaining high accuracy, ensuring overall system efficiency and performance.

- 3.Privacy Protection: It is crucial to protect local model parameters, user raw data, and classification features from exposure, both during data collection and AD detection, as well as during transmission over the network.

B.THREAT MODEL

In our proposed system, we assume the central server is honest, meaning both the clients and the server comply with the protocol. While clients enhance privacy by training data locally, there remains a potential risk of privacy leakage when uploading trained model parameters to the central server. Specifically, attackers could infer sensitive data from clients by analyzing the uploaded model parameters [26]. To mitigate this risk, we apply DP techniques during local data processing and model updates, ensuring that changes to an individual data point have a minimal impact on the overall output. This effectively prevents data reconstruction attacks and privacy breaches. These measures collectively ensure data security and privacy during transmission. In this paper, we assume attackers cannot infiltrate users’ networks to extract raw data. Based on these assumptions, our goal is to protect client privacy throughout the training process and prevent adversaries from compromising it.

IV.SYSTEM DESIGN

This section provides a detailed explanation of the overall system design and the functionality of each component. First, we introduce the formal definitions used in this paper. Table I presents the main symbols used in this work.

A.DATA COLLECTION AND PROCESSING MODULE

This subsection explains how user voice data is collected and processed. The data collection devices consist of Raspberry Pi units deployed in the homes of elderly individuals living alone. Raspberry Pi [27] is a widely used IoT device in smart elderly care systems due to its small size, easy deployment, and low cost. These devices efficiently meet the requirements for intelligent data collection and provide reliable technical support. As the system’s terminal device, the Raspberry Pi, automatically initiates conversations with the elderly at preset times each day to systematically collect data. The device plays prerecorded personalized audio messages from the elderly’s children, engaging in daily communication that includes greetings, reminders, and emotional support. This nonintrusive interaction is easily accepted by the elderly, as it does not disrupt their routines while simplifying data collection and ensuring privacy and comfort. We adopted the audio data processing method proposed by Liu et al. [28]. The following section will provide a detailed description of the data collection and processing procedures.

| Notations | Explanation |

|---|---|

| Random algorithm | |

| Adjacent datasets | |

| Total privacy budget | |

| Relaxation factor | |

| Sensitivity | |

| N | Number of participating clients |

| T | Collecting time points of audio recordings from the elderly |

| t | Current communication round t |

| Model parameter vector after server aggregation | |

| Local loss function of the i-th client | |

| Model parameter updates of the i-th client in round t | |

| Masked model parameter updates of the i-th client in round t | |

| Client i’sj-th component of the local model updates in round t | |

| Global model parameters in round t | |

| Learning rate |

Let represents all the audio collected from each elderly individual, and represents the total number of elderly individuals from whom data are collected. That is, represents the i-th elderly individual and represents all the audio data collected from the i-th elderly individual. That is, , . Here, n represents the total number of elderly individuals from whom data are collected, and represents the audio data collected from the i-th elderly individual at the fixed time point T. It is represented as: , an . Where represents a set of audio segments from as shown. represents the total duration of audio collected from the i-th elderly individual. Since the collected audio in each session is a continuous segment with varying duration, it is necessary to divide it into multiple subsegments. Where represents the j-th subsegment of the audio segment . That is,

where represents a subset of the audio segment collection, and represents the total number of audio segments for the elderly individual. The value of depends on the duration of the audio segments.B.FEATURE EXTRACTION AND NOISE ADDITION MODULE

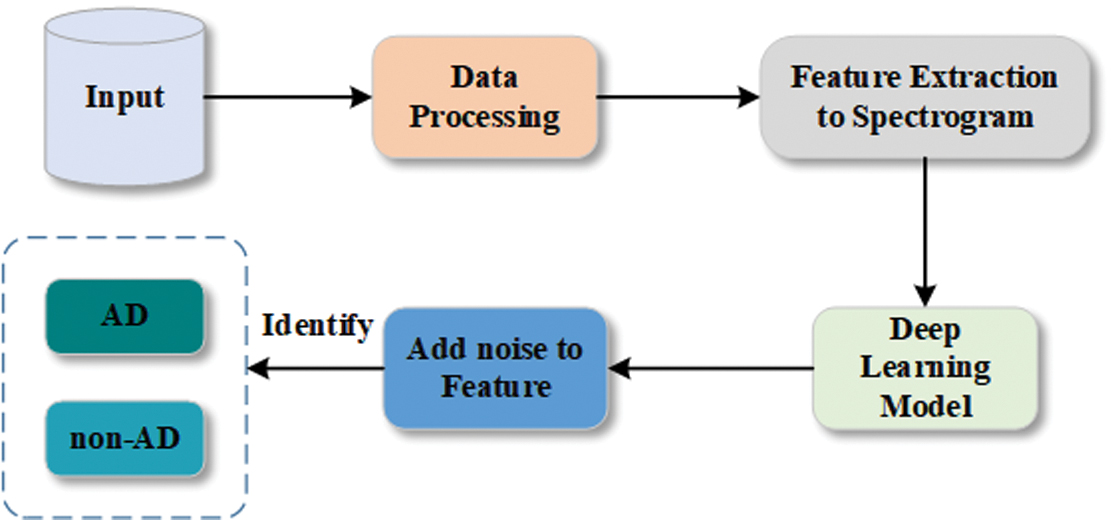

This subsection explains how the client extracts features from the data and applies DP to prevent the leakage of classification model parameters. In the AD system, after the terminal collects the audio data, it undergoes preprocessing, where spectrogram features are extracted from each audio subsegment. Random noise is added using DP to obscure internal feature correlations, thereby protecting information privacy. The detailed process is shown in Fig. 2.

Fig. 2. Data feature extraction and noise addition process.

Fig. 2. Data feature extraction and noise addition process.

The system extracts spectrogram features from the audio subsegments , which more clearly represent the variation in speech frequency energy for each segment. represents the spectrogram features of the j-th audio segment from the i-th elderly individual’s audio data, where

Where represents the function used to extract the spectrogram features. For the extracted spectrogram feature dataset, DP is applied to add noise, obscuring the feature information and ensuring privacy protection during the feature extraction phase. In our system, refers to the spectrogram feature dataset of all audio subsegments from the i-th elderly individual. is the adjacent dataset of , where . The random algorithm is used to train the deep neural network, and the parameter space of the network (also referred to as weights or coefficients) is denoted as . The definition of DP is as follows:Definition 1(DP). If and represent adjacent datasets differing by only a single record, and the output space of the random algorithm is , then for all output results, if the algorithm satisfies -DP on datasets and , the algorithm satisfies the following equation:

Here, (privacy budget) controls the level of privacy protection, and is the failure probability. If , the random algorithm is said to have strict DP. Since controls the similarity of the random algorithm’s output between two different input datasets, a smaller indicates a higher level of privacy protection, and vice versa. Common methods to achieve -DP include the Gaussian and Laplace mechanisms, which calculate noise based on the sensitivity of the query function and add it to the output. The definition of sensitivity is provided below:Definition 2 (Sensitivity). Given two adjacent datasets and , which differ by at most one record, and a query function , the sensitivity of the query function is defined as:

When selecting the DP noise mechanism, the system considered the following two options:

Gaussian Mechanism: For a query function with sensitivity , noise generated from a Gaussian distribution is added to the output of to satisfy (-DP if and only if . Here, is the sensitivity of the function .

Laplace Mechanism: For a query function with sensitivity on dataset , it satisfies -DP if the following condition holds:

Where the added noise follows the Laplace distribution with a probability density function (PDF) given by:

In the proposed EDP-AD system, we utilized both of these methods and conducted corresponding evaluations.

C.FL-BASED MODULE

In this paper, FL is used to address the issues of data silos and privacy. A FL system consists of a cloud server S, which maintains the global model and multiple clients .

Each client performs multiple iterations using Stochastic Gradient Descent (SGD) to train local model updates on their spectrogram dataset . The server aggregates all local model updates submitted by the N clients into a global model using a weighted averaging algorithm, based on the size of each client’s local dataset, thereby making accurate AD decisions. By keeping client data locally and only transmitting model parameter updates, deploying FL prevents the leakage of users’ sensitive information. During each training round, clients provide the server with model parameter updates of the local model instead of directly transmitting user data. The server updates the global model from to , where t represents the current round. The following formula outlines the training process of FL from round t to round t+1:

where represents the current training round, denotes the total number of participating clients, indicates the local training parameters of the i-th client in round , and represents the local spectrogram data. S denotes the total size of the spectrogram data held by all clients.Client Training Process: For the clients participating in FL, they first need to utilize the collected dataset , where represents the spectrogram data from all clients. (0 represents the healthy population and 1 represents AD patients). For each client i, its loss function is defined as:

where is the predicted value of the j-th sample, is the activation function, is the corresponding label, and is the data size of client .

AD Detection Process: After training, the client’s model is optimized to minimize the loss function. When voice data is received from the user, the client can predict the result as either AD or healthy (HC).

D.TOP-K-BASED UPDATES SELECTION AND MASKING MODULE

Uploading local model parameters from each client to the server in FL results in significant communication overhead [29]. We propose a mask-based sparse updates strategy to reduce communication costs. Sparsification is a widely used technique in deep learning to improve communication efficiency in distributed training [30–32]. Inspired by previous work, this paper aims to reduce communication overhead in FL by eliminating certain parameter updates, selecting them based on importance while minimizing the impact on model performance. Therefore, we select the model parameter updates with the largest absolute values for model aggregation and updating.

Assume that during the client’s updates process, the initial model weights are , and the model weights for the i-th client after training with its private dataset in round are , with the corresponding updates being :

where represents the global model parameters from round and represents the locally trained model parameters of client . The specific parameters in the model are denoted as , and the absolute value of each component in the updates are calculated as :

where represents the j-th component of the updates vectors. Let represent the k maximum values from the parameters updates set of the i-th client. To sparsify the client’s updates parameters , we use a masking function to generate a 0–1 mask matrix , which is a binary vector of the same dimension as :

Thus, after applying the mask matrix to the updated vectors , the sparsified updated vectors are obtained, that is,

where ȯ represents the Hadamard product. After sparsification, the top largest updates components are retained, while the other values are set to zero. As a result, the sparsified updates vectors will always have fewer nonzero elements than the original vectors . By adjusting the value of , the sparsity of the local updates can be controlled, improving the efficiency of uploading model updates. Table II presents Algorithm 1, which is based on the Top-K sparsification mask.

Table II. Top-K-based updates and mask selection algorithm

| Algorithm 1 Federated learning with Top-k updates selection and masking |

|---|

| 1: |

| 2: |

| 3: |

| 4: |

| 5: |

| 6: |

| 7: |

| 8: |

| 9: |

| 10: |

| 11: |

| 12: |

| 13: |

| 14: |

| 15: |

| 16: |

| 17: |

| 18: |

| 19: |

| 20: |

| 21: |

| 22: |

| 23: |

V.EXPERIMENTS AND PERFORMANCE ANALYSIS

In this section, we first introduce the experimental setup, including the dataset, model architecture, and algorithm. Then, we evaluate the system’s performance in terms of privacy levels, number of participants, and communication overhead.

A.DATASET AND SETTINGS

The dataset used in this paper is the Voice-Based Spectrogram Dataset (VBSD), a real-world dataset collected by our team and published in [28]. It comes from wearable IoT devices, with each audio sample having a frequency of 44.1 kHz and a duration of 1 second. Spectrogram features are extracted from the audio data and fed into the neural network model. The authors of [28] extracted 254 speech samples from AD patients and 250 from healthy controls (HCs), collected from 36 participants, resulting in a total of 504 speech samples and corresponding spectrogram features.

Table III shows the age and gender distribution of the collected data. The ages of AD patients range from 65 to 94 years, with 23 speech samples collected from AD patients, from which 254 spectrogram features were extracted. Additionally, speech data were collected from 13 healthy elderly individuals aged 65 to 92 years, resulting in 250 spectrogram features.

| Group | Age range | Males | Females |

|---|---|---|---|

| AD | 65–94 | 10 | 13 |

| HC | 65–92 | 5 | 8 |

In this paper, classification accuracy, precision, recall, F1 score, communication overhead, and privacy protection strength are used as evaluation metrics for the model. We build a neural network model using the PyTorch deep learning framework with Python version 3.10.14. Experiments are conducted on a 64-bit Ubuntu 22.04.3 system using a single NVIDIA GeForce RTX 3080 Ti GPU with 64 GB of memory.

B.EXPERIMENTS PERFORMANCE COMPARISON AND ANALYSIS

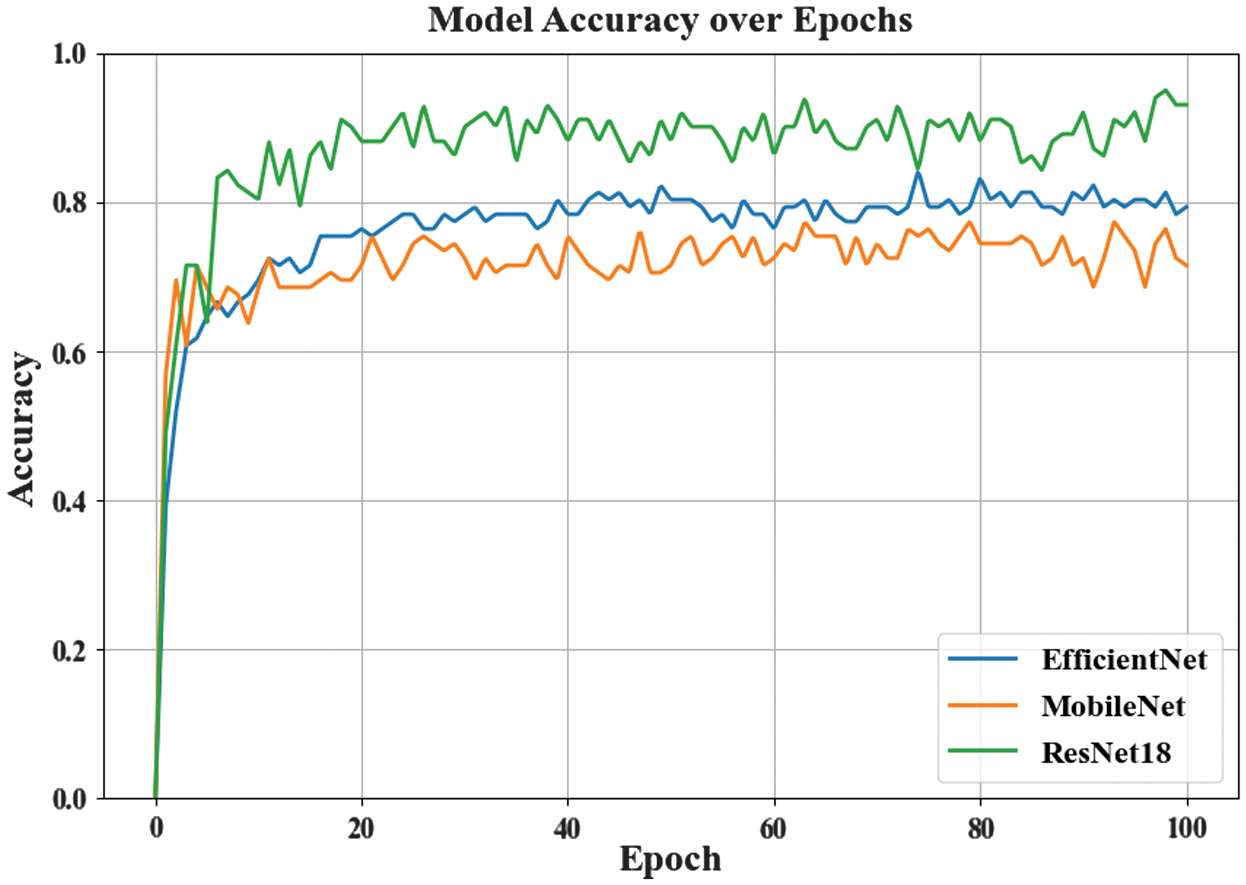

- 1.Comparison of System Recognition Accuracy Across Different Models: In the experiments for the AD detection system, we have evaluated three neural network architectures: ResNet18, MobileNet, and EfficientNet on the VBSD dataset. The learning rate is set to 0.001, and distributed training is conducted with three clients. As shown in Fig. 3, among these models, ResNet18 demonstrated significant performance advantages. As the number of training rounds increases, the accuracy approaches 95%, reaching a maximum of 95.1%. In contrast, the accuracy of MobileNet and EfficientNet is lower compared to the ResNet18 model, with maximum accuracies of 77.5% and 81.4%, respectively. The results indicate that the ResNet18 model used in FL performs better on the actual dataset. In FL, the training accuracy curve exhibits certain fluctuations, primarily due to uneven data distribution and differences in client training environments. Different clients may have distinct feature distributions in their data, leading to fluctuations during model updates aggregation. Additionally, variations in computational resources and training epochs among clients can result in inconsistent updates quality, further exacerbating performance fluctuations after model merging. These factors collectively contribute to the fluctuations in the accuracy curve during the FL training process, and the ability of ResNet18 to maintain high performance in such an environment, thanks to its stable architecture, is a key reason for our choice of this model.

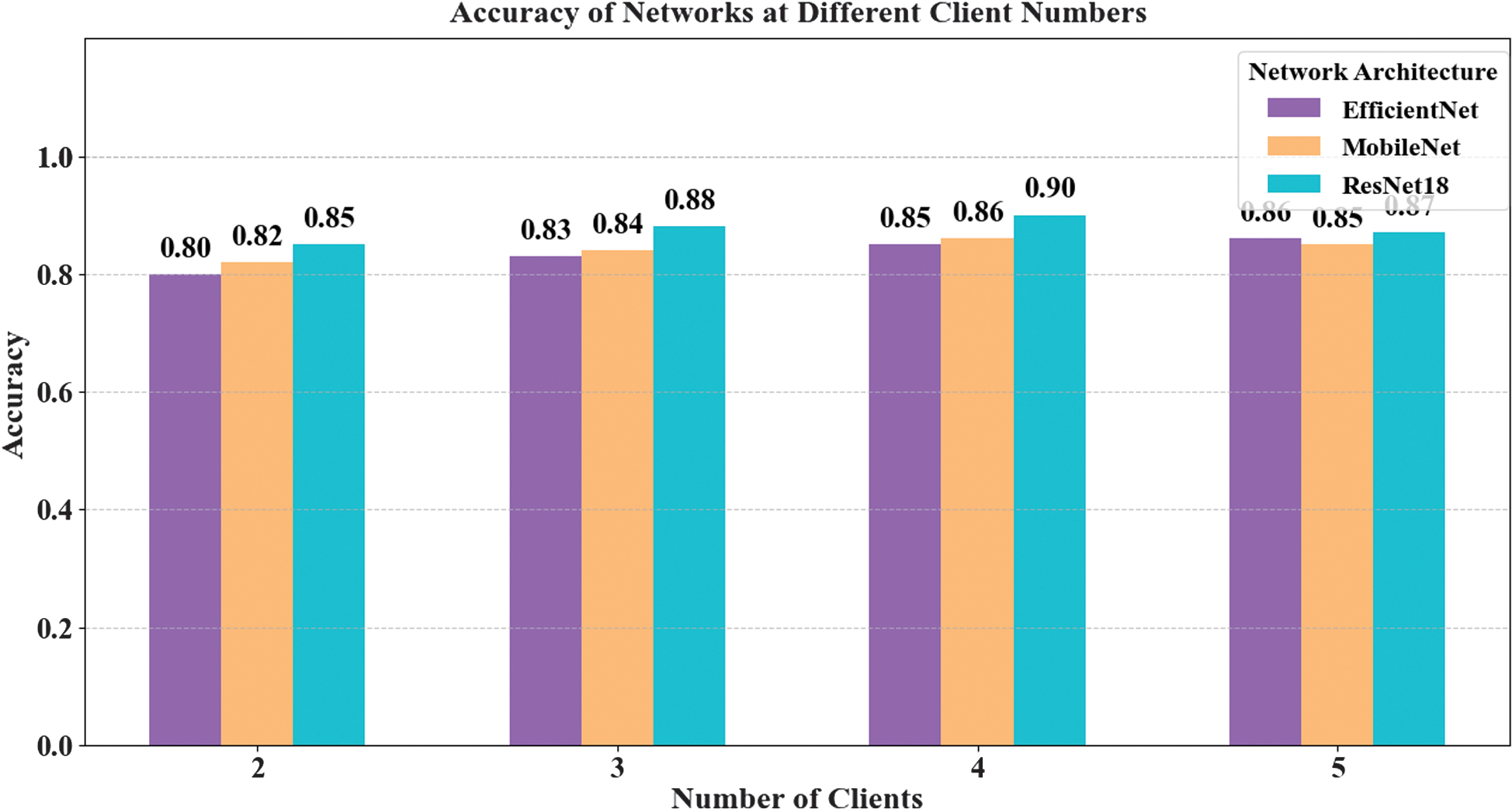

- 2.The Impact of Different Numbers of Clients on System Recognition Accuracy: As shown in Fig. 4, by comparing the performance of ResNet18, MobileNet, and EfficientNet with different numbers of clients, we analyze the impact of client numbers on AD detection accuracy in a FL environment. The study finds that ResNet18 consistently have achieved the highest accuracy across all configurations, particularly when the number of clients is 4, where its accuracy approached 90%, significantly higher than the other networks.

Fig. 3. AD detection accuracy across different models.

Fig. 3. AD detection accuracy across different models.

Fig. 4. The impact of client numbers on accuracy across different models.

Fig. 4. The impact of client numbers on accuracy across different models.

This result is attributed to ResNet18’s residual connection structure, which effectively maintains gradient flow stability and addresses the challenges of distributed data training. Additionally, we observed that as the number of clients increased, the accuracy of all networks fluctuated slightly but tended to stabilize overall. This suggests that the non-independent and identically distributed (Non-IID) nature of the data and communication efficiency between clients significantly impact model performance. As the number of clients increases, model aggregation becomes more complex.

- 3.Evaluation Results for Different Models: As shown in Table IV, we have evaluated three neural network models—EfficientNet b0, MobileNet v2, and ResNet18—on four metrics: accuracy, precision, recall, and F1 score, within the FL system. These metrics are essential for assessing the effectiveness of models in applications requiring reliable classification. Upon observation, it is clear that ResNet18 is the most robust model, performing well across all metrics and proving highly effective in a distributed environment. In contrast, EfficientNet and MobileNet show limitations in classification capabilities, making them less effective when handling complex and highly heterogeneous data.

Table IV. Evaluation results of the system across different models

| Model type | Accuracy (%) | Precision (%) | Recall (%) | F1-score (%) |

|---|---|---|---|---|

| EfficientNet b0 | 82.35 | 83.43 | 84.74 | 84.43 |

| MobileNet v2 | 76.47 | 77.13 | 87.52 | 76.43 |

| ResNet18 |

The Impact of Differential Privacy on the Model Under Different Privacy Budgets: To evaluate the impact of the DP mechanism on the system, we apply both the Laplace and Gaussian mechanisms with different privacy budgets to the ResNet18 model. As shown in Table V, both mechanisms exhibit a decline in accuracy as the privacy budget decreases, due to the increased noise added to enhance data protection. Additionally, the results show that the Gaussian mechanism achieves better accuracy compared to the Laplace mechanism.

Table V. Evaluation results of the system across different models

| Epsilon setting | Type | Accuracy (%) | Type | Accuracy (%) |

|---|---|---|---|---|

| ε = 0.1 | Gaussian | 80.02 | Laplace | 76.67 |

| ε = 0.5 | Gaussian | 81.57 | Laplace | 78.89 |

| ε = 1.0 | Gaussian | 82.19 | Laplace | 80.14 |

| ε = 1.5 | Gaussian | 85.56 | Laplace | 82.37 |

| ε = 2.0 | Gaussian | 83.24 | Laplace | 82.67 |

| ε = 2.5 | Gaussian | 84.41 | Laplace | 83.32 |

| ε = 3.0 | Gaussian | 88.89 | Laplace | 84.75 |

Furthermore, we observed that even with a privacy budget of 0.1, the system can still maintain an accuracy of 80.02%.

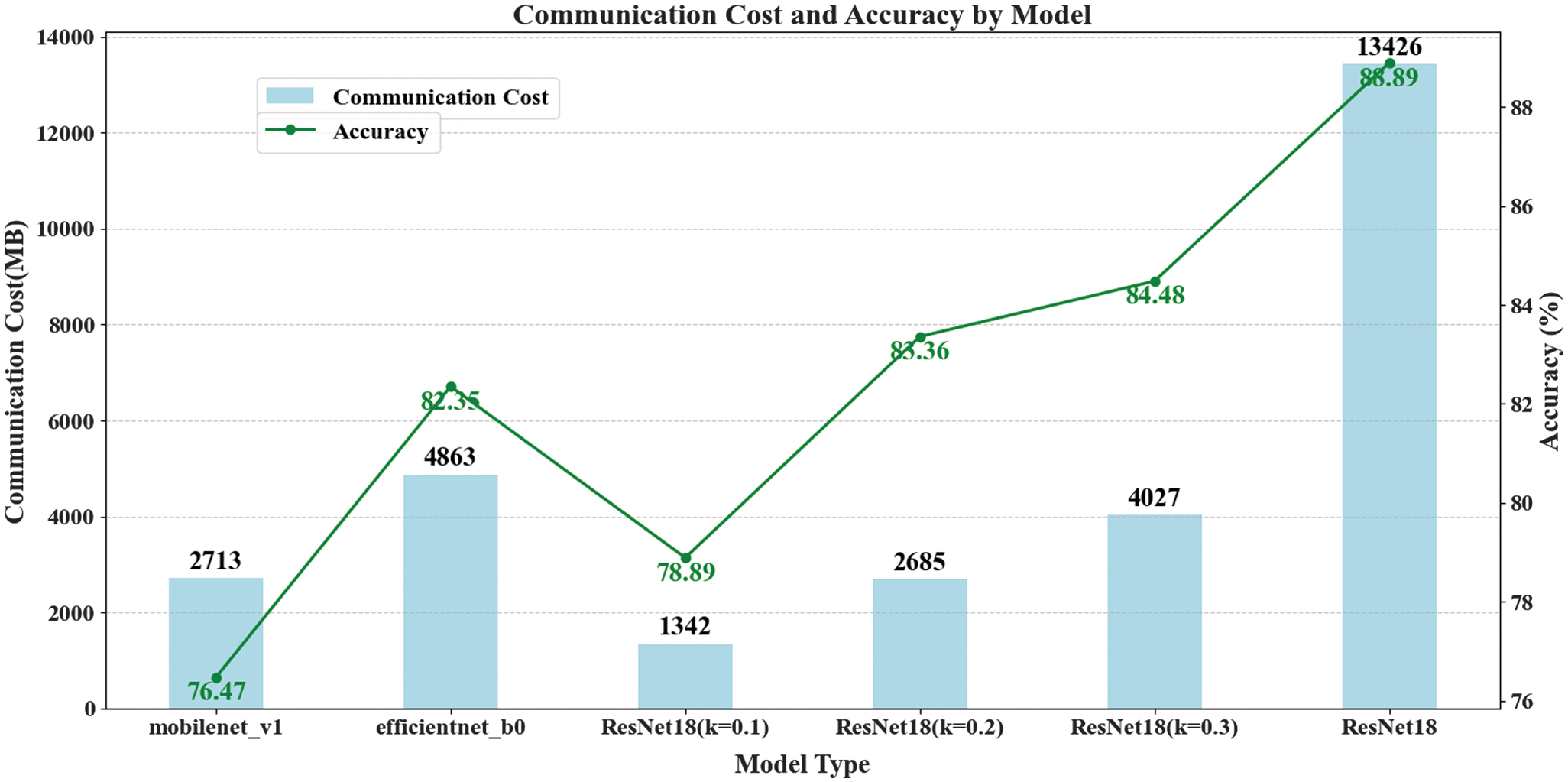

- 4.Communication Overhead Across Different Models

To evaluate the impact of the Top-k updates and masking selection algorithm on communication, we measured the communication overhead incurred by data uploads. As shown in Fig. 5, the communication overhead of the entire system reached up to 13,426 MB without any sparsification applied to the model. By adjusting different values of for comparison, we found that when =0.3, the communication cost for the ResNet18 model was significantly reduced to 4,027 MB, averaging a two-thirds reduction in communication volume while still achieving an accuracy of 84.48%. It is evident from the figure that although the lightweight models EfficientNet b0 and MobileNet v2 have significant advantages in terms of communication overhead, their accuracy in AD recognition is quite poor. The communication volume for the EfficientNet_b0 network reached 4,863 MB, with an accuracy of 82.35%. In contrast, with , ResNet not only reduced communication volume but also improved AD detection accuracy by 2.13%. This demonstrates the effectiveness of the Top-k updates and masking selection algorithm in balancing communication efficiency and model performance. This not only enhances the applicability of the model in resource-constrained environments but also provides a feasible technical pathway for developing efficient and accurate machine learning models.

VI.CONCLUSION

In this paper, we proposed a privacy-preserving AD detection system based on FL, designed for low-cost AD detection. The system utilized IoT devices, such as Raspberry Pi, to collect and preprocess audio data, while employing DP mechanisms and a FL framework to prevent raw data and model parameters from leaking during transmission. Additionally, the Top-k-based sparsification strategy reduced communication overhead. Experiments demonstrated that the system was highly efficient, lightweight, and ensured privacy protection.

For future work, we plan to deploy this system in real-world environments for broader field testing to evaluate its performance and practicality in everyday settings. We also aim to explore more advanced machine learning algorithms, to improve diagnostic accuracy, and to consider incorporating additional sensor data, such as video and physiological signals, for more comprehensive symptom monitoring and analysis. Furthermore, we will focus on optimizing data synchronization and model updates processes to reduce energy consumption and enhance responsiveness, making the system more suitable for resource-constrained devices.