I.INTRODUCTION

Healthcare applications worldwide generate an enormous amount of data, which possesses distinct characteristics. The majority of healthcare data exhibits multidimensionality, thereby posing challenges and complexities in the application of traditional learning models like decision trees and random forests. Nevertheless, contemporary machine learning (ML) models, particularly those based on deep learning (DL), possess the ability to address challenges associated with multi-dimensional data by virtue of their knack for self-learning. DL is instrumental in the healthcare sector, for identification of life-threatening illnesses. However, it does have certain constraints. A significant volume of data is required for effective training of DL model. However, it is important to note that each hospital possesses significantly restricted data, rendering a single data source inadequate for training a proficient DL model. One potential approach to address this issue involves the acquisition of data from diverse sources, followed by the training of the model using the gathered data. A significant concern associated with this approach pertains to privacy issues. Due to the sensitive and confidential nature of medical data, certain individual sources may exhibit reluctance in sharing their data with a centralized data collector.

Google introduced the concept of federated learning (FL) as answer for the dilemma of balancing data availability and privacy concerns [1]. FL is an approach that requires training a ML model in collaborative manner, without the need for centralized training data. FL facilitates the collaborative learning of a shared ML model by edge devices or servers possessing adequate computational capabilities, such as mobile phones, wearables, home computers, and other Internet of Things (IoT) devices. This approach ensures that all the data remains with the edge devices [2], thereby dissociating the capability to perform learning from the necessity of centrally storing the data at a single server or in the cloud.

A tremendous amount of data has been generated due to the extensive use of IoT devices in the modern day [3]. IoT devices have possessed the capacity to amass a substantial volume of data on a daily basis. The accumulation of information and the rapidly growing computational resources have opened new possibilities in the field of information technology, particularly in the realm of DL. This has raised concern about data security and data privacy in IoT devices. This has been addressed by implementing federated model-based learning in this paper.

An electrocardiogram (EKG or ECG) is a vital diagnostic tool in healthcare, particularly for cardiac care. There is a rise of 12.5% heart attack cases as per the 2022 records of the National Crime Records Bureau. ECG records the electrical signals in the heart to check the heartbeat’s rate and rhythm, helping diagnose conditions such as arrhythmias, heart attacks, or structural heart diseases [4]. ECGs are also important in preventive medicine, enabling early detection of potential heart issues, even in asymptomatic individuals, which allows for timely interventions and lifestyle modifications to prevent further complications [5].

ECG arrhythmia, commonly known as cardiac arrhythmia, is a medical disorder marked by abnormal heart rhythms. These irregularities can occur in the heart’s electrical system, causing it to beat too quickly, too slowly, or with an irregular pattern. Arrhythmias can vary in severity and may lead to serious health issues if left untreated.

Privacy in the healthcare system is critical for preserving patient confidence, guaranteeing legal compliance, and preventing discrimination. Trust is essential, as patients are more inclined to seek treatment if they believe their personal health information is secure. Legal frameworks such as HIPAA enforce privacy standards, ensuring that patient data are secure [6]. Furthermore, privacy helps prevent discrimination, especially for conditions such as mental health or genetic disorders, where unauthorized sharing of information could lead to stigmatization [7]. As healthcare increasingly relies on digital platforms, the security of electronic health records becomes vital to protect against breaches [8]. Additionally, patient privacy encourages participation in health research, fostering advancements in medical knowledge while ensuring confidentiality [9]. Thus, privacy safeguards enhance both the integrity and efficacy of healthcare delivery.

An essential part of clinical evaluations is automated ECG interpretation. There is a great chance to improve accuracy and scalability of automated ECG analysis given the growth of digital ECG data made possible by the Internet of Medical Things and the DL algorithm paradigm [10]. There has never been a thorough evaluation of an end-to-end DL approach for ECG analysis covering a wide variety of diagnostic categories. Recent advancements in DL have significantly improved ECG classification accuracy.

However, the need for secure and efficient models has become increasingly critical due to growing concerns over patient data privacy and regulatory compliance. The literature suggests that privacy-preserving FL and optimized neural architectures can enhance both data security and diagnostic accuracy. Despite these advancements, existing methods face challenges such as non-IID data distribution, communication overhead, and suboptimal model selection in federated environments. This research addresses these issues by proposing an optimized federated DL framework that ensures both privacy protection and performance optimization in ECG classification.

This paper is structured as Section II covers the related work, while Section III presents the proposed methodology, which includes information regarding arrhythmias and common classes, the dataset used, noise elimination, and model optimization at data centers. Section IV presents Comparative Analysis of Existing and Proposed Methods, Conclusions and future scope are discussed in the Section V.

II.RELATED WORK

Beforehand research has explored the diagnostic potential of ECG signals in detecting and classifying heart diseases, leveraging advancements in ML, DL, and signal processing techniques. Effective analysis of ECG signals enables accurate identification of cardiovascular abnormalities, facilitating timely interventions and improving patient outcomes. In this section, research works carried out in the detection of artifacts in ECG signal using various hybrid ML algorithms is presented.

Benmalek et al. [11] compared six micro-CNN architectures for ECG signal classification using scalograms derived from continuous wavelet transform (CWT) coefficients. Scalograms provided efficient time–frequency analysis, but the choice of CWT parameters and scalogram resolution may impact classification performance. CNNs showed promising ECG classification, emphasizing high accuracy with short training time for quality healthcare. Chen et al. [12] proposed optimization process and hyperparameter tuning for improved accuracy.

Singh et al. [13] studied various classification methods, including the recurrent convolutional neural network (RCNN) categorizing ECG signals. The methodology proposed using RCNNs for the enhanced outcomes requires more complex architectures and longer training times. Li et al. [14] utilized Deep CNN and Bidirectional Long Short-Term Memory for ECG signal classification. In this study, bidirectional long short-term memory with Deep CNN was utilized to extract the ECG features. The approach attained an accuracy 89.30% and an F1 score 0.891. This method enhanced ECG classification precision and facilitated self-monitoring of atrial fibrillation.

Gad [15] explored ECG report images for efficient diagnostic classification. Although this approach improved efficiency, it was limited to specific ECG image formats. Ayano et al. [16] evaluated the DL methods for arrhythmia identification using ECG signals. They assessed accuracy, specificity, precision, and F1 score. However, this study did not explore optimizing the architectures.

Alfaras [17] proposed disease-specific attention-based DL model (DANet). While the model shows promise, it has been evaluated on a limited range of arrhythmia types. Also, the integration of soft-coding or hard-coding waveform enhancement into existing neural networks could introduce complexity. Shinichi et al. [18] studied the application of a FL approach to develop models for the detection of hypertrophic cardiomyopathy utilizing ECG and echocardiography data. This model showed high performance.

Gutierrez et al. [19] employed FL methodologies to train AI models on 12-lead ECG data gathered from six heterogeneous sources. The models achieved performance comparable to state-of-the-art centralized learning approaches, demonstrated that the model appropriate for cloud-edge architectures due to their lower complexity and quicker training times. Zuobin Ying et al. [20] introduced FedECG, a federated semi-supervised learning framework created to foresee ECG abnormalities. The model outperformed state-of-the-art distributed methods by ∼3% by using pseudo-labeling and data augmentation techniques to achieve 94.8% accuracy with only 50% of the data labeled.

Weimann K et al. [21] proposed a privacy-preserving FL framework for detecting anomalies in ECG signals, utilizing homomorphic encryption to secure model updates. While the approach maintained data confidentiality, the added computational burden from encryption operations had not been thoroughly evaluated. Zhuo Chen et al. [22] presented a hierarchical federated learning model that enhances distributed learning by organizing clients into clusters based on data similarity. The author presented an adaptive model parameter aggregation technique that dynamically adjusts client contributions to improve global model accuracy compared to traditional FL methods. By addressing non-IID data distributions, the model enhances learning effectiveness across diverse datasets. The approach is scalable and well-suited for large federated networks, particularly in healthcare and IoT applications. The study significantly advances FL methodologies by improving communication efficiency and model performance. Overall, the research offered valuable insights and a promising direction for enhancing FL in distributed AI systems.

Lee et al. [23] introduced FedLAMA, designed to improve communication efficiency in FL with a scheme of layer-wise adaptive model aggregation. Recognizing that different neural network layers exhibit varying degrees of model discrepancy across clients, the model based on model discrepancy adjusts the aggregation intervals for each layer and communication costs. This targeted synchronization reduces unnecessary data transmission, while maintaining model accuracy comparable to traditional methods like FedAvg. However, implementing layer-wise aggregation introduces additional complexity in managing synchronization schedules for each layer. Maytham N. Meqdad et al. [24] introduced an aggregation method in FL to tackle imbalanced data, employing averaging stochastic weights and a multivariate Gaussian model to improve detection accuracy. The suggested technique attained 87. 98% accuracy in testing with the strong VGG19 architecture.

Literature underscores the increasing demand for secure and efficient DL models in ECG classification, emphasizing the need for both data privacy and enhanced diagnostic accuracy. This motivated us to use FL as a promising solution, enabling secure, decentralized model training without compromising patient data privacy. Additionally, studies indicate that optimized neural network architectures incorporating techniques such as GA and particle swarm optimization (PSO) can significantly enhance model accuracy and robustness.

Building on these insights, we propose a novel federated DL framework that integrates optimized neural architectures for ECG artifact identification and classification. Unlike conventional FL approaches, our method dynamically selects the best-performing model at each institution before federated aggregation, ensuring both efficient learning and improved generalization. This dual optimization strategy enhances both security and predictive performance, making it a scalable and privacy-compliant solution for real-world ECG analysis. The following section details the proposed methodology and its implementation.

III.PROPOSED METHODOLOGY

This work proposes a multi-tiered FL framework that organizes clients into clusters based on data similarity, enhancing communication efficiency and model performance.

This approach also introduces an adaptive aggregation method that dynamically adjusts the weighting of model updates from different clients, considering factors such as data distribution and model divergence, leading to more accurate and robust global models.

These innovations address challenges inherent in FL, such as data heterogeneity and communication overhead, by improving scalability and personalization in ECG classification tasks.

The ECG arrhythmia classification has been performed using four different networks viz., optimized OCNN, optimized RNN using long short-term memory (LSTM), and optimized ANN using PSO & GA in this paper. These algorithms aim to detect and classify distinct types of cardiac arrhythmias from ECG signals.

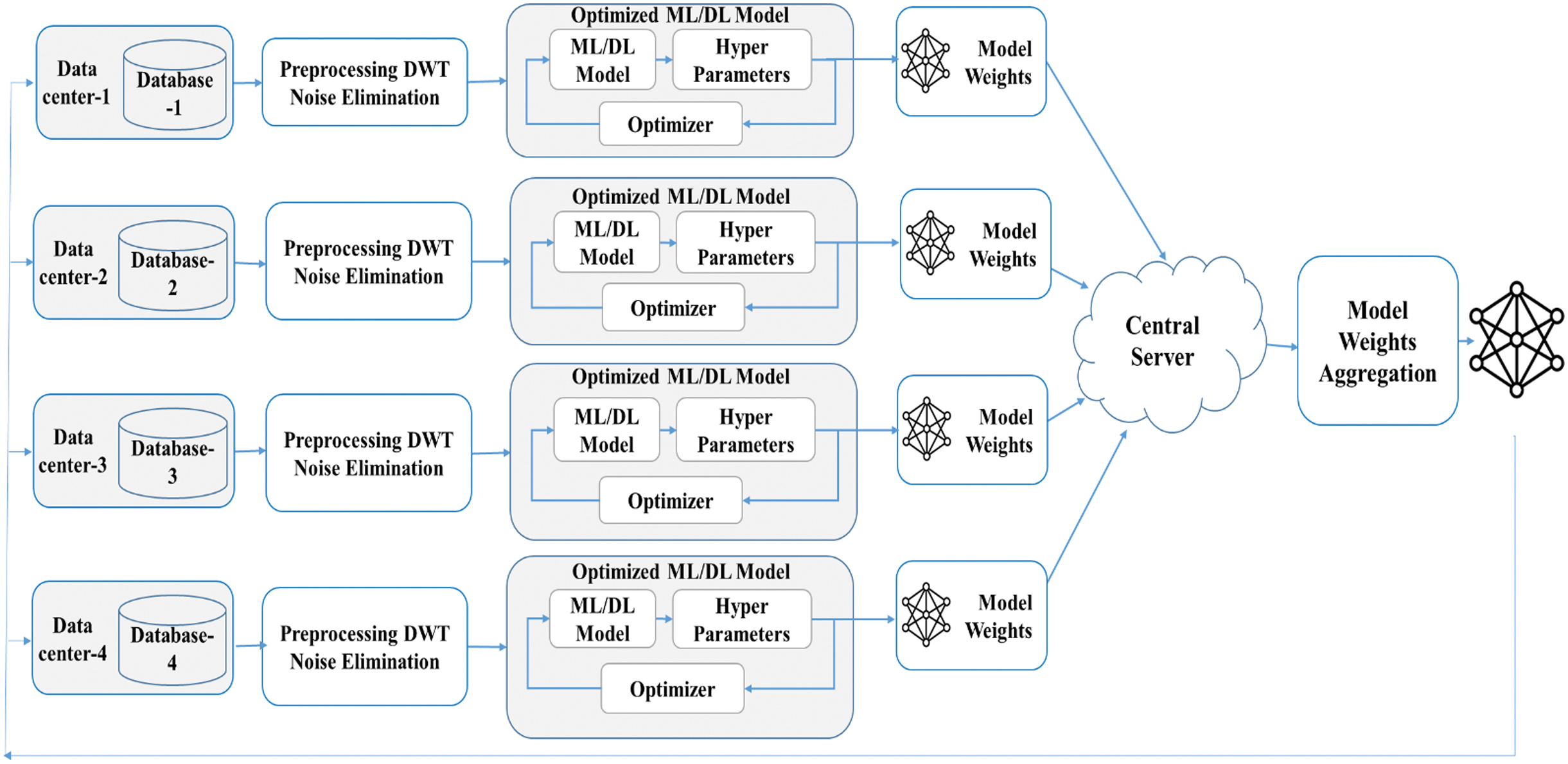

The proposed system architecture is shown in Fig. 1. It consists of several blocks, each playing a critical role in the preprocessing, training, and aggregation stages of the FL process. The details of each block are as follows.

Fig. 1. Proposed system architecture.

Fig. 1. Proposed system architecture.

A.ECG DATABASE

The ECG signals under consideration encompass sixteen types of heartbeats, which are categorized into two main groups: Normal heartbeats and Arrhythmia heartbeats.

MIT-BIH (Massachusetts Institute of Technology-Beth Israel Hospital) Arrhythmia Database is publicly available and can be accessed through the PhysioNet platform. It is a valuable resource that has significantly contributed to advancements in the field of cardiovascular disease identification using ML. Its realistic representation of arrhythmias and comprehensive annotations make it a go-to dataset for detecting cardiac arrhythmias, which are abnormal heart rhythms in short cardiac health monitoring.

The dataset consists of 48 half-hour excerpts of two-channel, 24-hour, ECG recordings obtained from 47 subjects, each sampled at 360 Hz [25]. The ECG recordings are annotated with beat annotations, including information about the types of beats (normal, premature ventricular contractions, atrial premature contractions, etc). sources: http://www.physionet.org [26].

Annotations are typically marked by experienced cardiologists, making the dataset suitable for training and evaluating arrhythmia detection algorithms.

B.ARRHYTHMIAS

Arrhythmias are abnormal heart rhythms that can lead to various cardiac disorders. Detecting and classifying arrhythmias is crucial for diagnosing and treating heart conditions.

Common Classes of Arrhythmias

Arrhythmias are generally classified into the following five main categories:

- 1.Normal (N): This represents a normal sinus rhythm, where the electrical activity of the heart follows a typical, healthy pattern. There is no significant deviation in heart rate or rhythm [27].

- 2.Left Bundle Branch Block (LBBB): If the electrical signal from the heart’s conduction system of the left bundle blocks or delays then this type of arrhythmia appears [27].

- 3.Right Bundle Branch Block (RBBB): Similar to LBBB, this arrhythmia occurs when the electrical signals are delayed or blocked, but this time in the right bundle branch [27].

- 4.Atrial Premature Contraction (APC or A): These are early heartbeats originating from the atria, causing the heart to beat out of its normal rhythm. Atrial premature contractions can lead to irregular heart rates and palpitations [27].

- 5.Ventricular Premature Contraction (VPC or V): This occurs when the ventricles of the heartbeat prematurely disrupt the normal cardiac cycle. VPCs can feel like a skipped heartbeat and are often seen in patients with heart conditions [27].

C.DATA CENTERS AND DATABASES

These are individual entities, e.g., hospitals, clinics, research institutions, etc., that host their own databases containing raw ECG signals. Each data center operates independently and does not share raw data with others. This data privacy approach is key in FL, where only model weights are shared with the central server to prevent leakage of sensitive data.

Each data center receives an equal portion of the dataset as shown in Table I, ensuring that all the data centers work with the same amount of data. Equal splitting helps ensure that models trained on data from different centers have similar exposure to the different types of heartbeats, leading to more consistent results across the centers. By evenly distributing the data, the process helps avoid any biases that could arise from one data center having more data than another.

Table I. Data splitting to the data centers

| Class | Total | DC1 | DC2 | DC3 | DC4 | DC1 | DC2 | DC3 | DC4 |

|---|---|---|---|---|---|---|---|---|---|

| Original Samples (Data Splitting for Data Centers) | Data Balancing (up- and down-sampled data) | ||||||||

| N | 75011 | 18752 | 18752 | 18752 | 18752 | 2000 | 2000 | 2000 | 2000 |

| L | 8071 | 2017 | 2017 | 2017 | 2017 | 2000 | 2000 | 2000 | 2000 |

| R | 7255 | 1813 | 1813 | 1813 | 1813 | 2000 | 2000 | 2000 | 2000 |

| A | 2546 | 636 | 636 | 636 | 636 | 2000 | 2000 | 2000 | 2000 |

| V | 7129 | 1782 | 1782 | 1782 | 1782 | 2000 | 2000 | 2000 | 2000 |

| 10000 | 10000 | 10000 | 10000 | ||||||

The dominant class (N) is down-sampled to match the number of samples in the minority classes. The minority classes (L, R, A, V) are up-sampled to reach the same number of samples as the other classes.

D.PREPROCESSING DWT NOISE ELIMINATION

Each data center preprocesses its raw ECG signals using discrete wavelet transform (DWT) to eliminate noise. This preprocessing step is crucial for enhancing the signal quality, which directly impacts the performance of subsequent ML models. DWT technique decomposes the ECG signal into different frequency components. By applying thresholding to the detail coefficients and reconstructing the signal, noise is effectively reduced.

Discrete Wavelet Decomposition (DWT): The DWT decomposes a signal into approximation coefficients (A) and detail coefficients (D). This process can be repeated to obtain multiple levels of decomposition.

Approximation Coefficients (A):

where φj,k[n] is the scaling function (low-pass filter).Detail coefficients (D):

where ψj,k[n] is the wavelet function (high-pass filter).Wavelet reconstruction (Inverse DWT): To reconstruct the signal from the wavelet coefficients [28]:

E.OPTIMIZED ML/DL MODEL

Post-preprocessing, each data center trains its own ML/DL model. The training process includes model selection, hyperparameter tuning, and optimization. After training, the optimized model is transferred to the central server.

ML/DL Model: Each time one of the optimized model viz., optimized ANN using GA optimized ANN using PSO, optimized CNNs, or optimized RNNs is selected for ECG classification and its performance is evaluated. The ANN parameters like epochs, batch size, and learning rate are selected to improve the performance of the model. To reduce the error and select weights, algorithms such as Adam, RMSprop, or SGD are used.

The framework is validated with the four optimized models of training. This enables the system to explore different architectures and optimization techniques to improve the classification accuracy of the models.

Model 1 – Optimized artificial neural network with genetic algorithm (OANNGA): In this model, each data center trains an OANNGA model. The GA is applied to optimize the NN’s hyperparameters, such as the number of hidden layers and the number of neurons. After training the OANNGA model independently at each data center, the resultant model weights are sent to the central server.

Model 2 – Optimized artificial neural network with particle swarm optimization (OANNPSO): In this model, the same data are trained using OANNPSO, where PSO is used for hyperparameter tuning. This approach allows the model to search for an optimal set of hyperparameters that may outperform the genetic algorithm in certain aspects, such as convergence speed or classification accuracy.

Model 3 – Optimized convolutional neural network (OCNN): The model involves training with an OCNN model. CNNs are known for their performance in pattern recognition and classification tasks, especially in handling temporal and spatial dependencies, which are common in ECG and other biomedical signals. Each data center trains its OCNN independently, and the results are sent to the central server.

Model 4 – Optimized recurrent neural network (ORNN): In this model, ORNN is employed, using recurrent neural networks (RNN) optimized for time-series data like ECG signals. RNNs excel in acquiring long-term dependencies and sequences in data. This model is trained separately at each data center, and the resulting model weights are transferred to the central server.

1.OANNGA AND OANNPSO

Optimized ANN using GA and optimized ANN using PSO are well explained in our prior work on ECG arrhythmias classification [29].

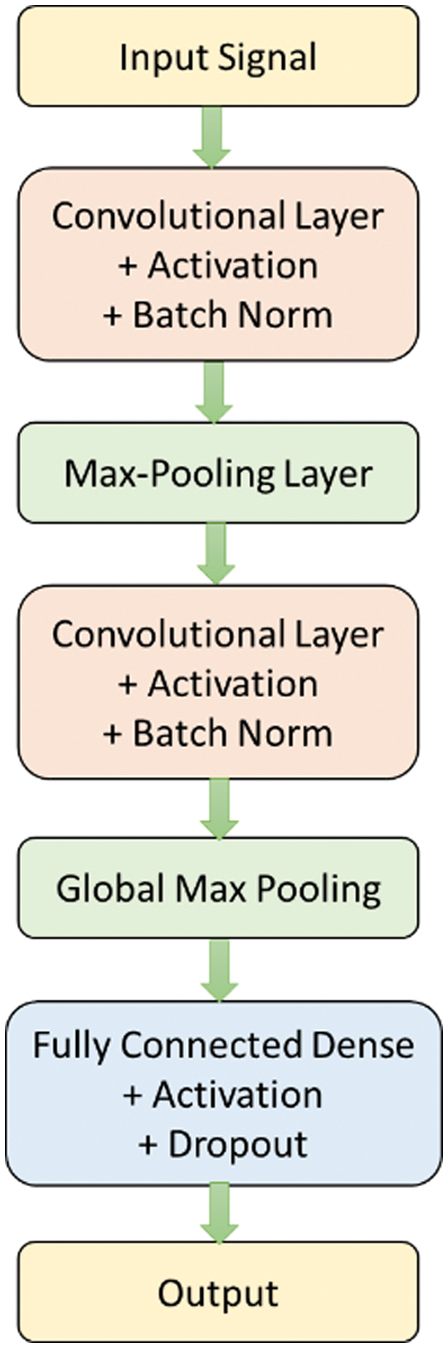

2.OPTIMIZED CONVOLUTIONAL NEURAL NETWORKS (OCNNS)

A convolution and pooling layers (PL) of 1D CNN are used to capture local patterns of the input ECG. This architecture is designed to learn ECG by extracting hierarchical features automatically.

Different filter is applied to the input ECG in the convolutional layer. The convolution equation is given as follows:

where Yi – ith output in the feature mapXi+j – (i+j)th input; Wj – jth filter weight

b – bias; f – activation function

To reduce the spatial dimensions, PL of CNN is used. In this work, max pooling is applied:

whereYi – ith output in the pooled feature map.

X(i*s) represents the input values within the pooling window of size s.

The rectified linear unit (ReLU) is a non-linear activation function that is typically implemented after the convolutional layer. It is defined as:

The model becomes more computationally effective by retaining the most significant features and reducing the spatial dimensions of the feature maps through the use of PL.

In a max PL, the operation takes the extreme value in each region of the feature map. Let’s denote the pooling operation as:

where s is the stride (step size) of the pooling operation, and P(l) is the pooled output.To provide input to the fully connected layers, the final feature maps generated by the convolutional and PL are condensed into a 1D vector (F):

To obtain class probabilities, softmax activation function is smeared to the output of the fully connected layer

where represents the predicted probability of class, and C is the total number of classes (for example, 5 classes for arrhythmia types).For classification tasks, the cross-entropy loss function is typically used to measure the difference between the true labels y and the predicted probabilities .

whereN – number of samples; – true label for sample i and class c;

– predicted probability for sample i and class c.

To optimize CNN’s performance, hyperparameters such as the number of filters, filter sizes, and learning rate are fine-tuned using an optimization algorithm, such as PSO or GA [29]. The OCNN architecture is shown in Fig. 2. The goal of the optimization is to minimize the loss function:

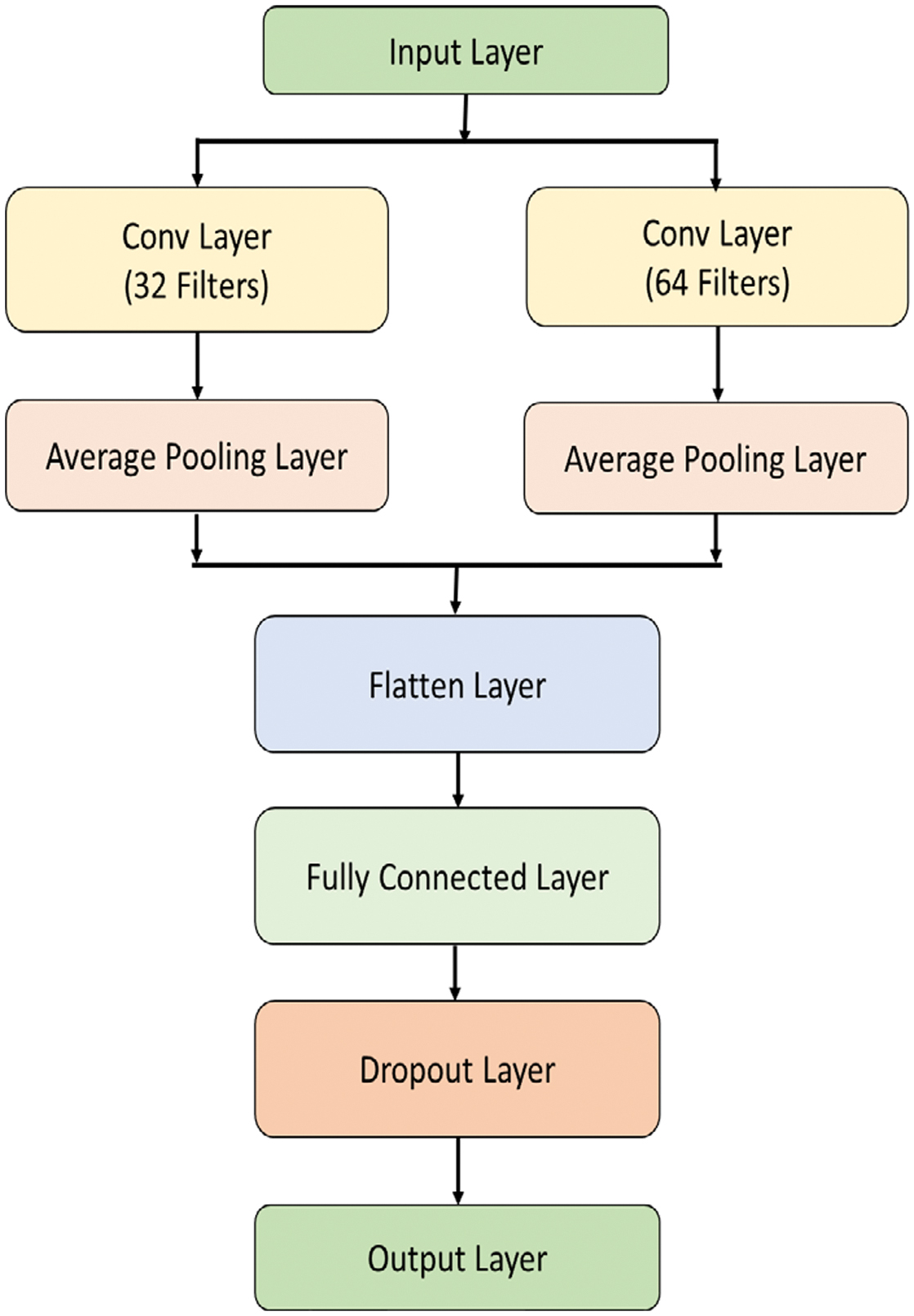

where θ represents the set of hyperparameters being optimized.3.OPTIMIZED RECURRENT NEURAL NETWORKS (ORNNS)

The proposed methodology leverages the capabilities of ORNN with LSTM units to model the temporal dependencies in ECG signals, aiding the accurate identification and classification of different types of heartbeats, especially focusing on detecting arrhythmia cases. The LSTM network is trained on a labeled dataset comprising various ECG signal segments corresponding to different heartbeats, ensuring robustness and generalizability of the model.

We train the ORNN LSTM network using a labeled dataset comprising normal and arrhythmia heartbeats. The network learns to distinguish distinctive types of arrhythmias based on the extracted features. The performance metrics used are accuracy, specificity, sensitivity, and F1-score. RNNs capture dependencies between time steps in sequential data such as ECG signals.

In ORNN, the model is optimized using optimization algorithms like PSO or GA. The objective is to find the optimal hyperparameters that reduce the loss function.

Let represent the set of parameters in the RNN. The aim is to minimize the loss function L(θ), which is typically the cross-entropy loss for classification tasks:

where N – the number of samples; – true label; – predicted probability for sample i and class c.The optimization algorithm adjusts the model’s parameters θ to minimize this loss.

Backpropagation through time (BPTT) is employed to train the RNN, which is an extension of backpropagation for sequences. The gradients of the loss with respect to each parameter θ are computed by BPTT by unrolling the RNN and applying the chain rule through the time steps.

The weight updates for each parameter are computed as:

where η is the learning rate, and represents the gradient of the loss function with respect to the parameter θ.Each data center uses its pre-processed ECG data to train a local ML/DL model. The model is optimized through hyperparameter tuning and using an appropriate optimizer. The architecture of the optimized RNN is shown in Fig. 3.

4.MODEL WEIGHTS AGGREGATION

FL is an innovative method to train models without sharing their raw data. This is specifically important in sensitive domains like healthcare, where data privacy and security are paramount [30]. This paper presents a framework FL applied to ECG data preprocessing and model optimization, ensuring enhanced model performance while maintaining data privacy across multiple data centers.

In this methodology, the models are trained, and the optimized weights (parameters) are extracted. These weights encapsulate the learned features and patterns from the ECG data. The optimized model weights from each data center are securely transmitted to the central server. A central server acts as the aggregation point for the model weights from all data centers. It does not have access to raw data but collects the trained weights from each center.

The central server averages the collected model weights using federated averaging (FedAvg), to generate a global model. This is performed by considering contribution of each data center for synthesizing the information learned at each local model into a global model.

wi are the weights from data center i and ni is the number of samples in data center i, the aggregated weights wglobal are given by

where N is the total number of samples across all data centers, and K is the number of data centers. The aggregated weights form the final global model. This model benefits from the diversity of data across all participating centers, leading to improved generalization and performance.The results obtained using these methods have been compared in the results and discussions section that highlights the outperformed neural structure proposed to implement the FedAvg.

IV.RESULTS AND DISCUSSIONS

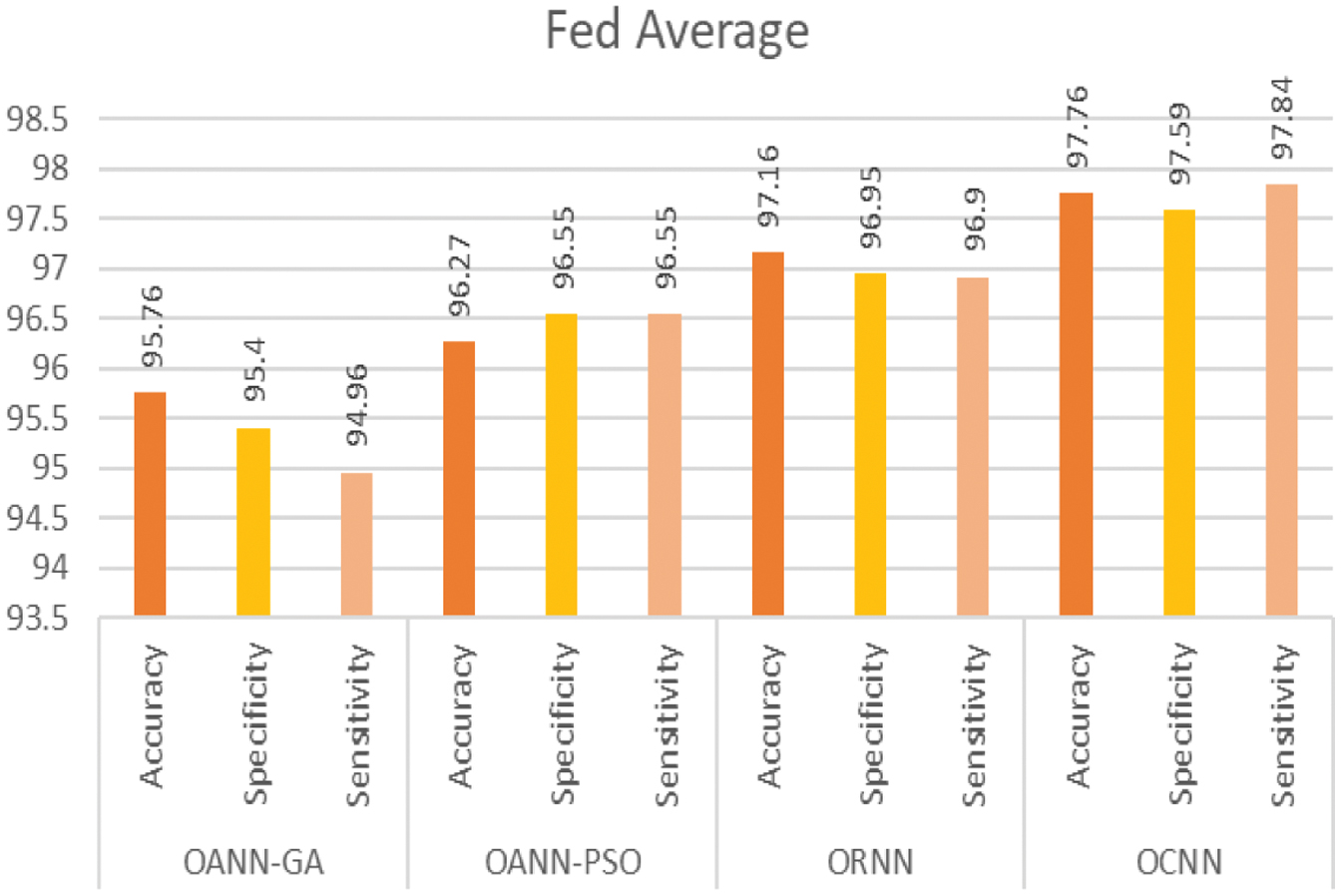

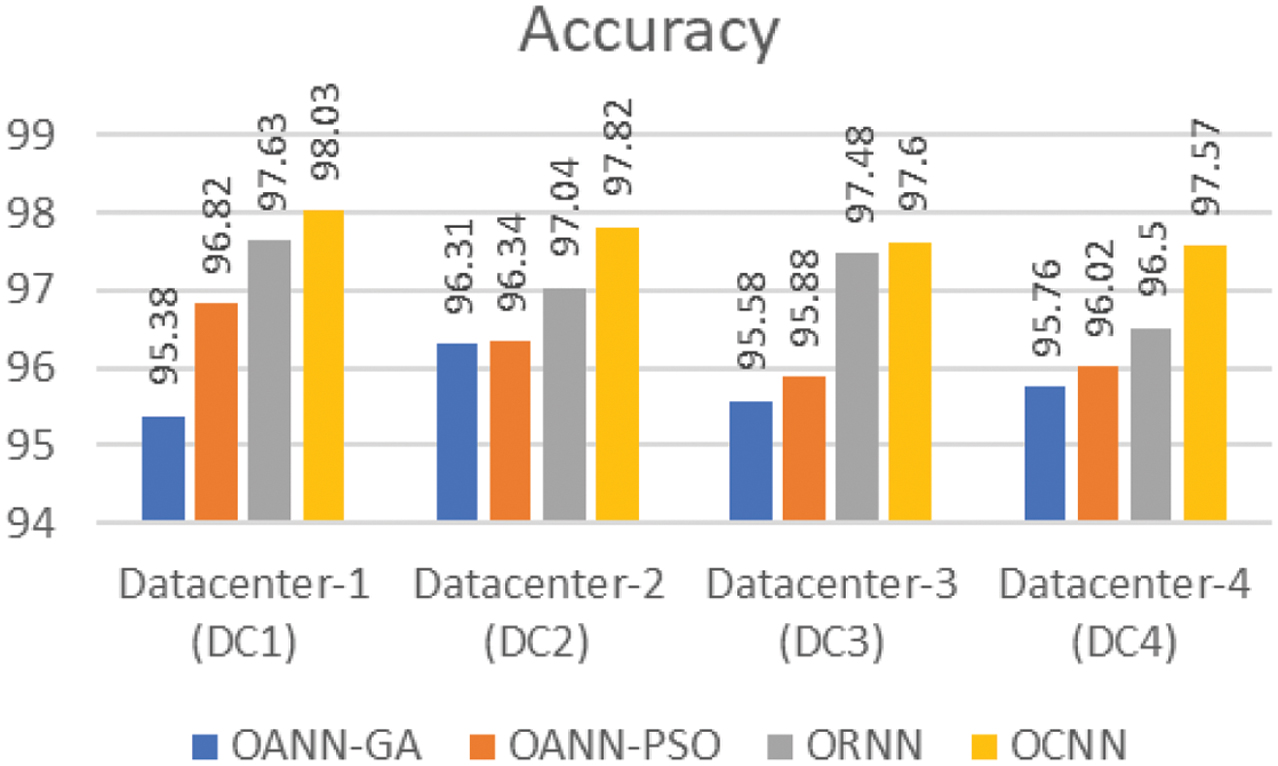

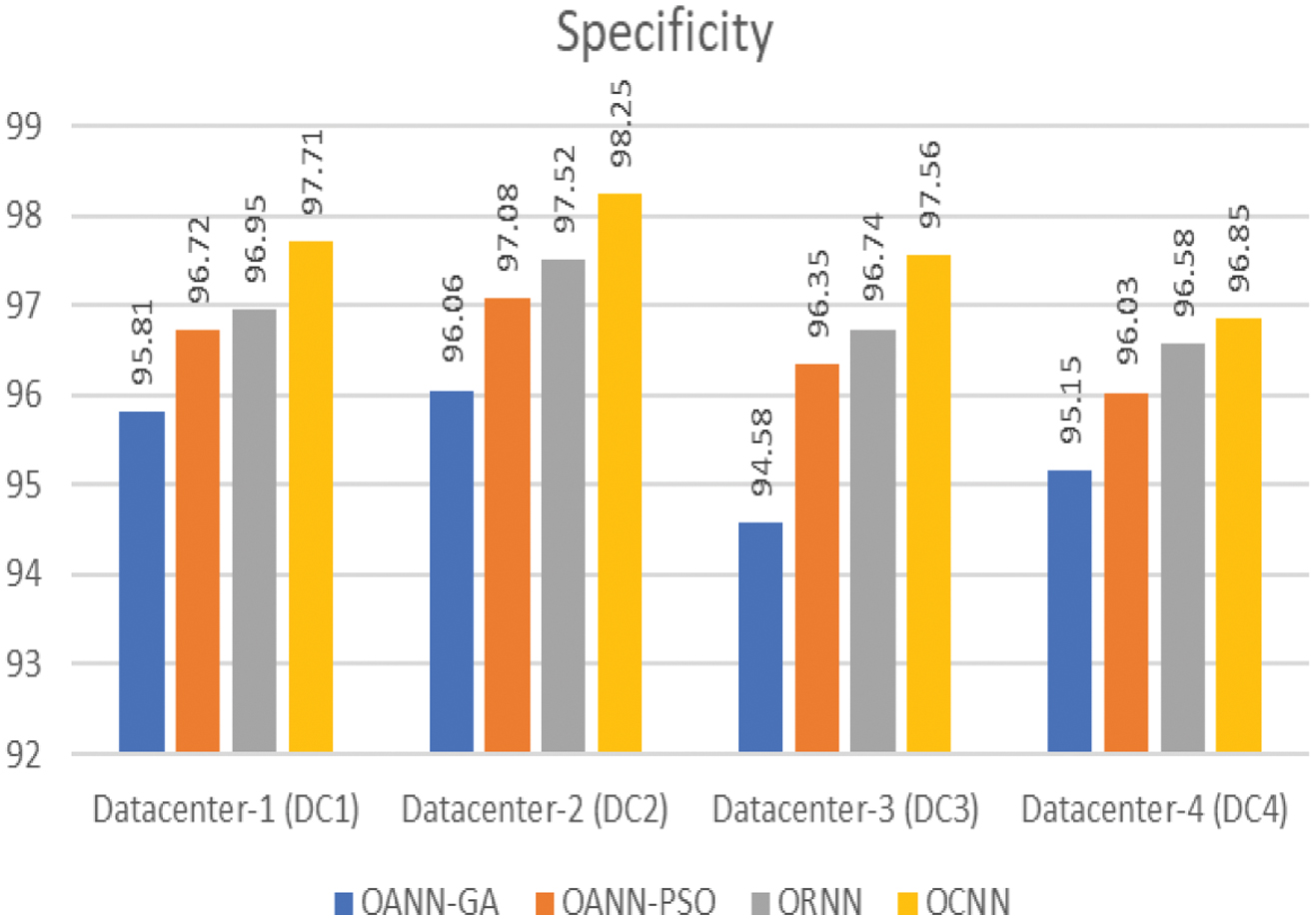

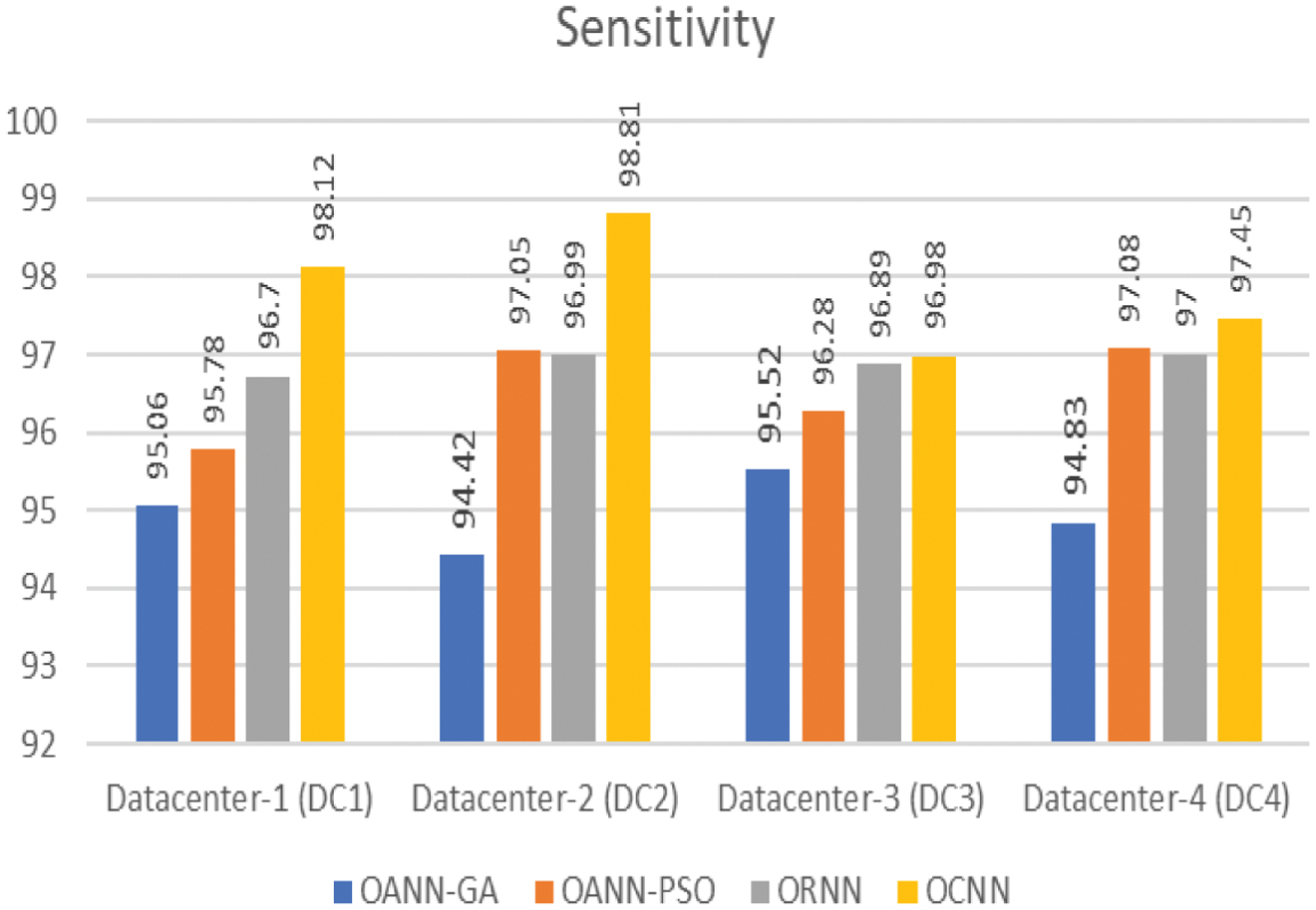

A FL approach involves four data centers, each with its own database. The process included preprocessing the data, training different types of models (OANNGA, OANNPSO, OCNN, and ORNN) for the data centers using the same model for all data centers at a time, iterative model averaging (FedAvg), and final model comparison to identify the most accurate model. Table II shows the performance of the proposed models at each data centers.

Table II. The classifier performance of the different models

| Data Center/Model | OANN-GA | OANN-PSO | ORNN | OCNN | ||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Parameters | ACC | SPE | SEN | ACC | SPE | SEN | ACC | SPE | SEN | ACC | SPE | SEN |

| DC1 | 95.38 | 95.81 | 95.06 | 96.82 | 96.72 | 95.78 | 97.63 | 96.95 | 96.7 | 98.03 | 97.71 | 98.12 |

| DC2 | 96.31 | 96.06 | 94.42 | 96.34 | 97.08 | 97.05 | 97.04 | 97.52 | 96.99 | 97.82 | 98.25 | 98.81 |

| DC3 | 95.58 | 94.58 | 95.52 | 95.88 | 96.35 | 96.28 | 97.48 | 96.74 | 96.89 | 97.6 | 97.56 | 96.98 |

| DC4 | 95.76 | 95.15 | 94.83 | 96.02 | 96.03 | 97.08 | 96.5 | 96.58 | 97.00 | 97.57 | 96.85 | 97.45 |

| Fed Average | 95.76 | 95.40 | 94.96 | 96.27 | 96.55 | 96.55 | 97.16 | 96.95 | 96.90 | 97.76 | 97.59 | 97.84 |

All the proposed models outperformed the conventional methods, and it was observed that OCNN exhibited exceptional performance in the comprehensive classification of micro-classes within the dataset, achieving an average accuracy of 97.76, a sensitivity of 97.84, and a specificity of 97.59 compared to the other methods. Furthermore, these optimized neural structures not only improved the efficacy of the system but also outperformed to implement the privacy of the data at each data center with the help of federated modeling. The following Figures show the performance metrics of each optimized neural model. Figure 4 shows the classification accuracy of the proposed models with respective data center. Also, Table III presents the accuracy of the various existing methods and the proposed method.

Fig. 4. Accuracy of classification.

Fig. 4. Accuracy of classification.

Table III. Accuracy of the various existing methods and the proposed method

| Author | Methodology | Year | Accuracy |

|---|---|---|---|

| [ | Genetic, CFL FedAvg | 2024 | 73% |

| [ | RNN and Long Short-Term Memory | 2024 | 97% |

| [ | BiLSTM, Federated Learning | 2024 | 93% |

| [ | ResNet, Federated Learning | 2023 | 94.8% |

| [ | 1D-CNN, FedCluster | 2022 | 89.38 |

| Proposed method | OCNN, FedAvg | – | 97.76 |

Figure 5 shows the classification specificity of the proposed models with respective data center.

Fig. 5. Specificity of classification.

Fig. 5. Specificity of classification.

Figure 6 shows the classification sensitivity of the proposed models with respective data center.

Fig. 6. Sensitivity of classification.

Fig. 6. Sensitivity of classification.

Figure 7 shows the performance of the FedAverage proposed in the work.

V.CONCLUSION

Cardiovascular disease is a critical global health issue, requiring accurate and privacy-preserving diagnostic solutions. This study presents a FL-based OCNN for ECG classification, ensuring both data security and high predictive performance. Unlike conventional approaches, our method dynamically selects the best-performing optimized model at each institution before federated aggregation, enhancing learning efficiency and generalization. The proposed OCNN achieved an average accuracy of 97.76, a sensitivity of 97.84, and a specificity of 97.59, demonstrating its effectiveness in detecting arrhythmias with high precision. Our approach addresses non-IID data challenges, reduces communication overhead, and improves computational efficiency, making it scalable for real-world deployment. Future work will focus on multi-modal data integration and optimizing communication efficiency to further improve scalability and resource utilization.